The main types of sensors in Robotics & Self-Driving Cars (and how much you should know about each)

"I made it". These were my first thoughts back in 2017, after I graduated from my diploma in Internet of Things and got my first internship. After years learning about electrical signals, bluetooth, connectivity, networks, prototyping, and time spent building projects using multiple types of sensors... I was going to be an IoT Engineer — and make millions!

Or so I thought... Because my first project ended up being an epic failure. The market wasn't really interested in smart alarms like we all thought it would — there was no interest in smart fridges or other smart objects either... IoT was flopping — and thus, I got sent to another consulting project on AI!

And AI gave me this pair of glasses and told me "See? You learned about all these sensors, but what really matters is the data it collects". That was really an eye-opener for me; to the point where I spent most of my energy learning about AI & Robots... and writing on it.

But sensors matter too — and without them — there wouldn't be any self-driving car in development. This is why I'd like this article to focus on sensors. Not on the AI processing, but really the sensors themselves — explain to you the main types, and how much you should know about them.

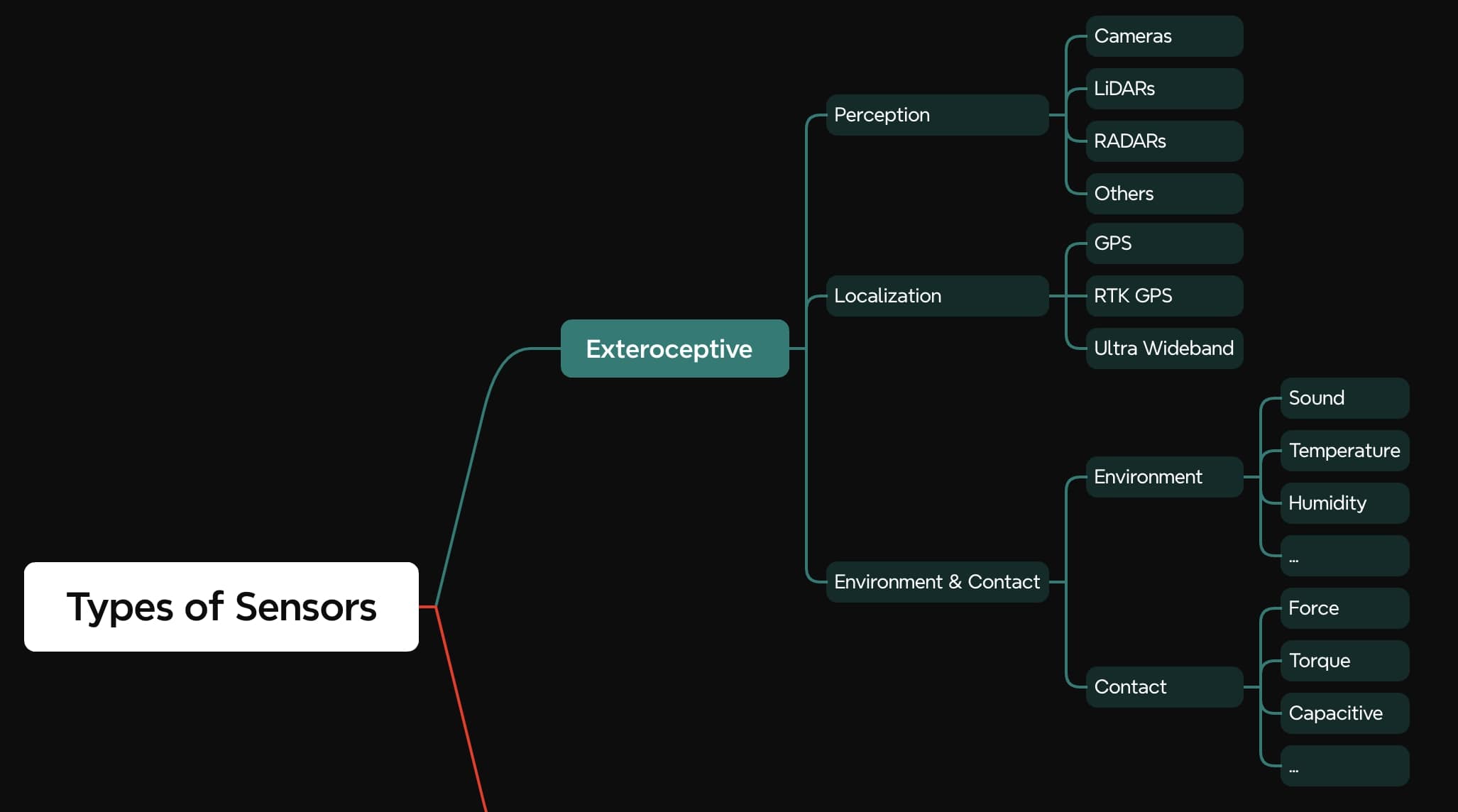

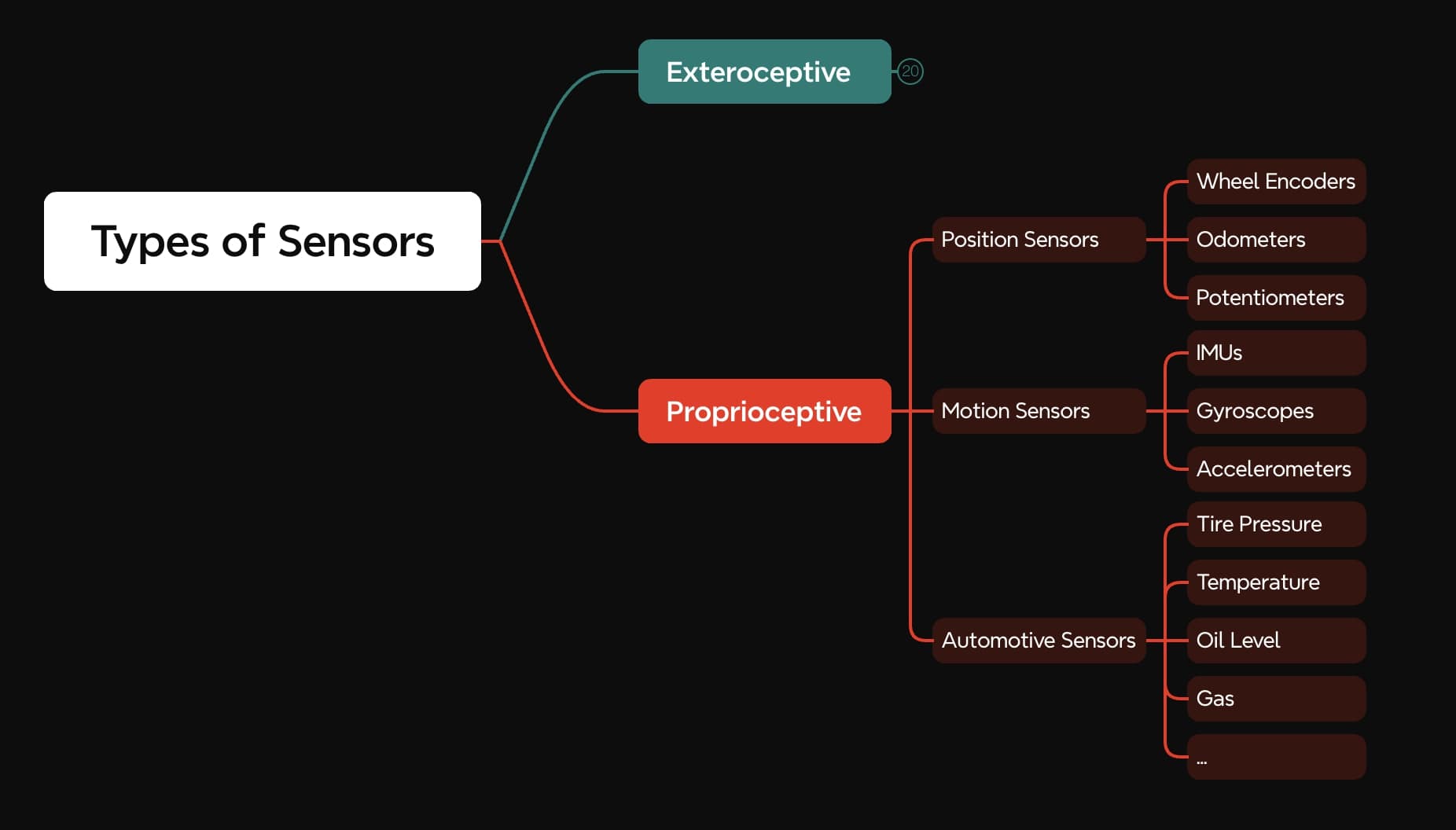

This article will split sensors in 2 categories:

- Exteroceptive sensors — the external sensors

- Proprioceptive sensors — the internal sensors

Yes, the wording is scary — but you'll see they don't bite. Let's begin.

Exteroceptive Sensors: Looking at the outside world

In robotics or self-driving cars, you need to see the world so you can navigate in it. Every sensor that is external will be part of the exteroceptive sensor category. For example cameras or stereo cameras, LiDARs (light detection and ranging), RADARs (radio detection and ranging), GPS, ultrasonic sensors & proximity sensors, thermal cameras, infrared sensors, and so on...

There are many ways we could categorize this first family, but I like to do it by task, for example:

- Perception Sensors

- Localization Sensors

- Environmental Condition Sensors

Perception Sensors

I assume in this article you have a good idea of what are the Perception sensors. Yet you may not realize how much you need to know about each. Let's see for a few per category, what you should know:

Cameras

So you know what a camera is, right? Well, do you know how to set parameters like ISO? Shutter? Gain? Whether to use grayscale or RGB? Yes? So, do you know how to find the intrinsic and extrinsic parameters of the camera? Or how to use Charuco calibration? What about Stereo Calibration? Do you know how to calibrate using multiple checkerboards?

Knowing cameras isn't just knowing how to load images, but it's mostly knowing very well the camera parameters. When you truly understand cameras, you can do really wonderful applications, like this 3D Reconstruction we do in my course MASTER STEREO VISION:

As you can see, we build a complete 3D environment from just cameras! Cameras may give flat 2D images, but when used in stereo mode, you can leverage their 3D properties.

What's interesting:

LiDARs

Cameras are good with distances, but not as good as LiDARs. LiDARs/lasers are light sensors that can send a light wave and measure the exact distance of an object. It means they work in the dark, and they can construct a very accurate point cloud. The thing is, there are many types of LiDARs (like 2D LiDARs, 3D, or even 4D LiDARs — but also Time of Flight vs others...), and the output may be intuitive, but the inner technology is quite complex.

Below is an example of LiDAR processing we do in my course POINT CLOUDS CONQUEROR:

LiDARs are excellent sensors, but extremely costly (this is why startups like Tesla don't use them), easily affected by weather like fog, rain, snow, or even dust, and they can't measure velocities (when you need to measure the velocity of an object, you have to compute the distance between two frames). This is why most automotive companies use... RADARs!

3D and 4D RADARs (Radio Detection And Ranging)

RADARs are very mature (100 years old or so), used in TONS of industries, but they're super un-intuitive. The first time I saw a RADAR output, it just took 30 minutes to get what was on the screen, and I wasn't even sure. Most Perception Engineers in the AI/Self-Driving Car space avoid them like a disease because of that.

Because they use radio waves, they aren't affected by weather conditions, and because they use the Doppler Effect, they can measure the relative velocity of objects. In the self-driving car world, there are almost 0 courses on RADARs, but I did create a very interesting piece of content for my membership, in which we learn to visualize RADARs, create point clouds, and intuitively understand the output. Here is a look at it:

This output has been done using an Imaging RADAR (4D RADAR) from a startup named bitsensing. I highly recommend you go check out their product, especially because it's 4D technology. Unlike most RADARs that find 2D + Speed, Imaging RADARs find 3D + Speed (they see the Z dimension). Similarly FMCW LiDARs steal the Doppler effect from RADARs to measure speed directly, and become 4D LiDARs as well.

Other Sensors (Infrared, Ultrasonic, Thermal ...)

Let’s continue looking at the types of sensors in the Perception world. When you park your car and hear “BIP BIP”, you work with an ultrasonic sensor. These are great sensors for short range, static objects. On the other hand, RADARs are longer range, and work better with moving objects.

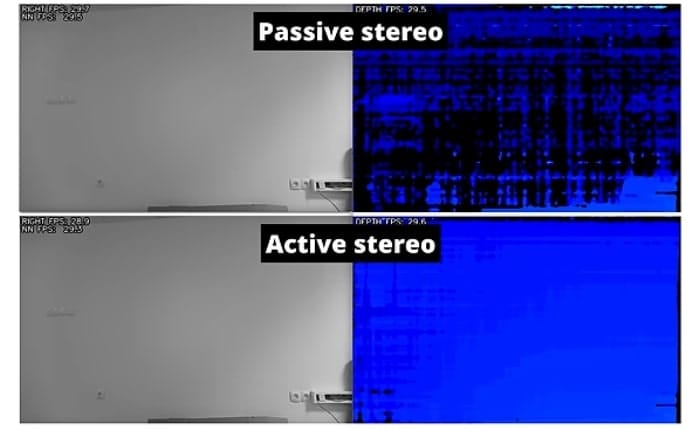

Infrared or Thermal can be a great thing to use with cameras. For example, Luxonis recently announced it was now selling thermal cameras and stereo cameras with Active Stereo. This means using IR sensors with dot projectors to help the camera find depth; which can be very useful for example when an object is in front of a wall (and thus, no detail is visible).

Now, let’s move on to category 2:

Localization Sensors

Even more misunderstood, GPS rely on satellites in space to triangulate your position. They're extremely used in self-driving cars, especially when relying on classic localization and mapping techniques. Other than GPS, RTK (Real-Time Kinematic) GPS are now the standard, because while GPS are accurate at ~1 meter, RTK GPS give centimeter level accuracy?

How?

RTK GPS communicate with a fixed antenna (with a known position) which can measure the error in localization. If the antenna is positioned at position (0,0,0), uses a GPS that says it's at (0.5, 0.5, 0.5), you know you have a 0.5 meter error; and you can send this info back to the cars using RTK GPS.

In my own personal experience, I worked on autonomous shuttles that drove through the Polytechnique Campus in France (the country's most prestigious engineering school), and I remember TONS of problems with GPS, such as:

- Clouds and weather affecting the signal strength

- Tunnels turning our GPS receivers off

- Trees confusing GPS positions

- Or students weird experimentations in some dorm rooms confusing our GPS signals

For some of these cases, we relied on Ultrawide band technology, which consisted in setting up a network of fixed reference nodes throughout the environment. These nodes communicated with mobile tags on the robots, providing precise distance measurements through time-of-flight calculations.

Finally:

Environmental & Contact Sensors

In late 2024, Waymo announced their 6th gen vehicle was using an array of audio sensors to recognize honks, ambient noise, or sirens. When you think about it, it makes a lot of sense for a car to use sound. We rely on sound a lot when driving. But even bigger is outside of the car world. Using this logic, we could think of humidity sensors, gas sensors, radioactivity sensors, ...

Now something related:

If we change the focus from "cars" to things like wheeled robots, humanoid robots, or specialized robots, like surgery robots. They often make something cars don't: physical contact. And thus part of their environment is the other objects they make contact with.

For example, capacitive sensors are the types of sensors that detect tactile feedback, proximity sensing, or materials. You can see them as a "skin" for robots. Some can also sense humidity or a surface, or find moisture, and more...

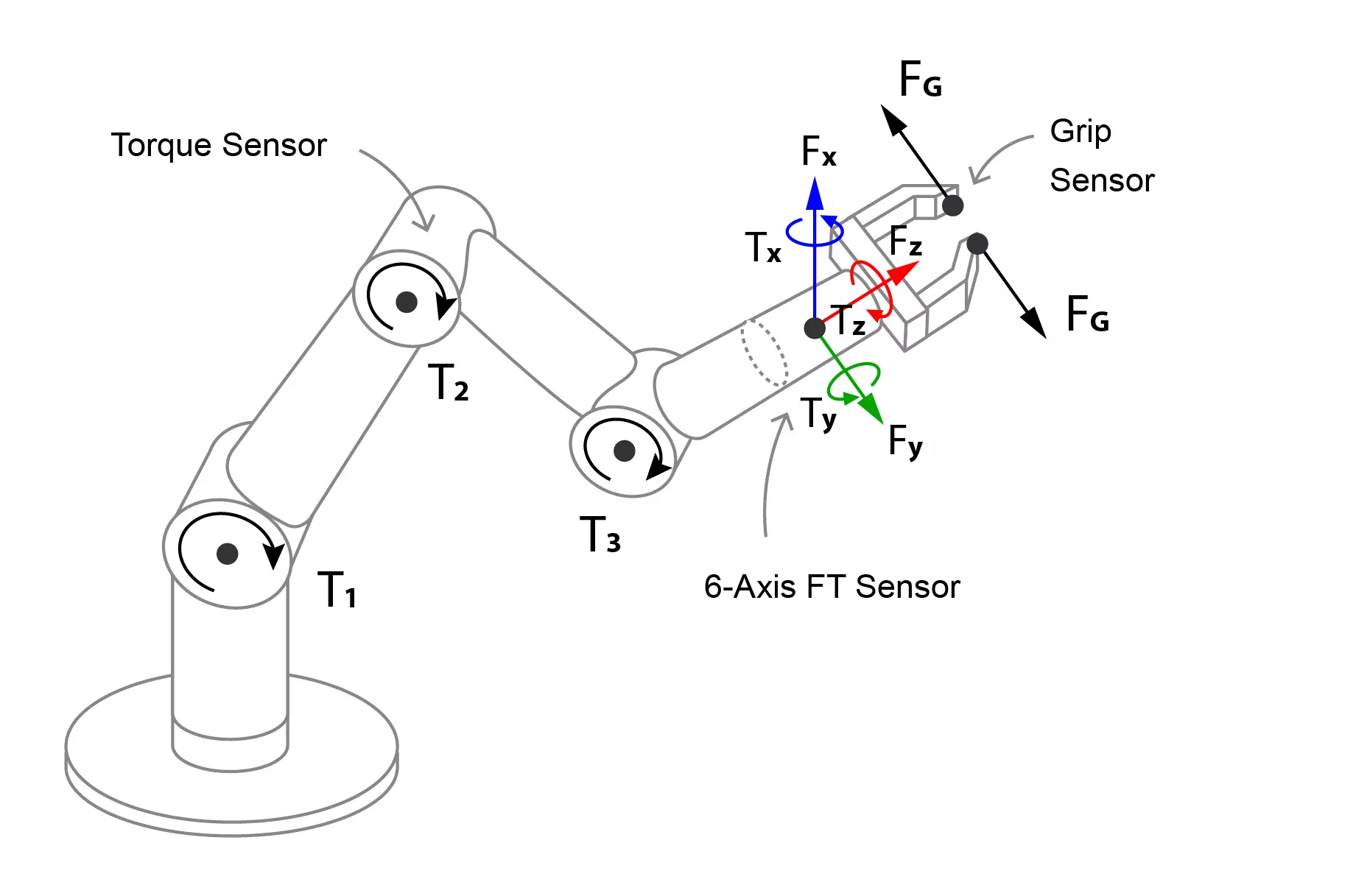

An example on a robot arm equipped with a torque sensor and a grip sensor:

Alright! We have covered the first category of sensors. If we take a look back, we have something like this:

There are a few ones we didn't cover, like flow sensors in water, or light sensors, photodiodes, etc... but they aren't that used with robots.

What now? Let's look into the second category:

Proprioceptive Sensors

I was able to show off with my cool GIFs in the first part, wasn't I? Well, who's laughing now? Because I have to write about proprioceptive sensors, and truth is, I never worked that much with these types of sensors before.

Why?

Because a proprioceptive sensor is an internal sensor. It's something that measures the INSIDE of your robot or vehicle. For example, an odometer measures the rotations of your wheel to estimate how much you moved. An IMU measures your position, orientation, and measure how YOU are moving. While exteroceptive sensors focus on the others, proprioceptive sensors focus on you (I mean, your robot).

I would list down 3:

- Position sensors

- Motion sensors

- Automotive sensors

Position sensors

Imaging you wake up in the middle of the night, heading for the toilets (oh this is going to be lame). You can't see, but know you should walk 10 steps to get to the throne room. So you walk one step, two steps, three... until you reach 10 steps, and... no toilets? 🚽 You agitate your arm, but nothing seems in the way. Where is the door? So you walk one more step, then two, then thr— BAM! Found the door. What happened here? You probably estimated your number of steps a bit inaccurately. Have you been drinking last night?

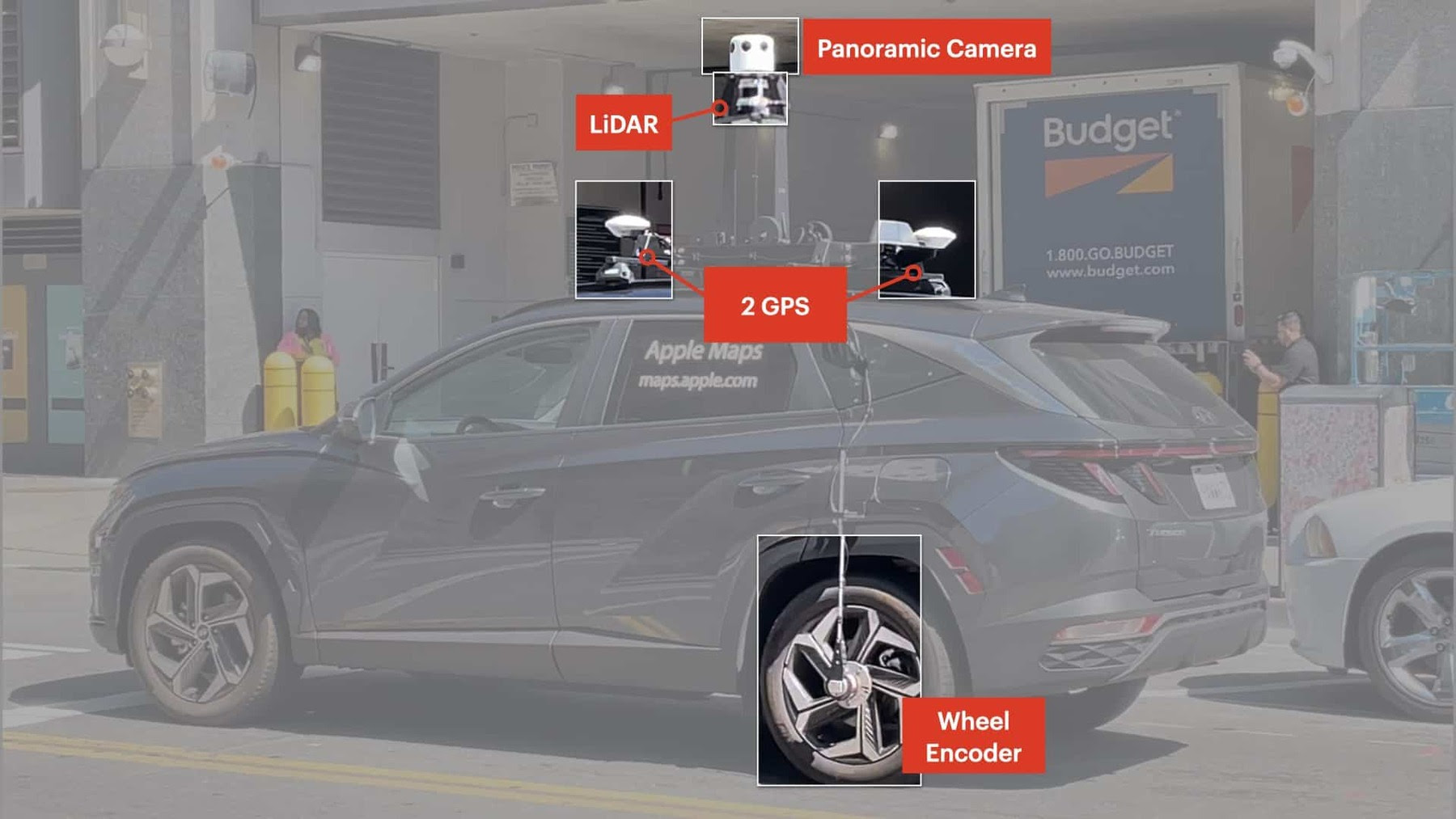

A wheel encoder is a bit similar, it measures how many rotations your wheels do, and thus can tell you how many meters your car or robot has travelled. It's extremely useful in localization, SLAM, or similar tasks.

If you look at an Apple Maps vehicle, you will see the wheel encoders plugged at the bottom:

Motion Sensors

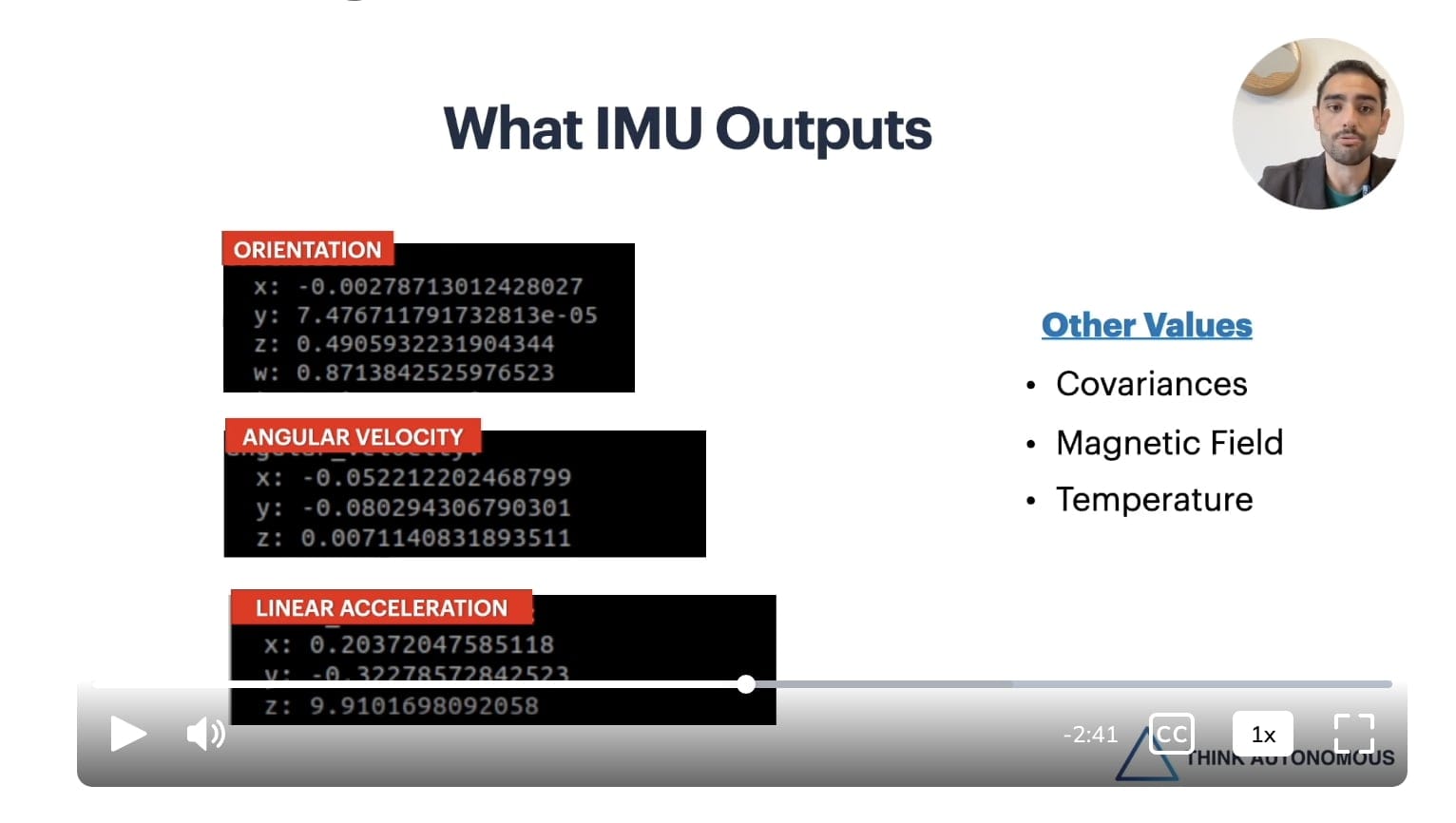

There's position, but there's also motion. I told you I wasn't super at ease with proprioceptive sensors, but I did work on odometry, Visual Inertial Odometry, LiDAR-Inertial SLAM, Localization, and all of these words. What do they have in common? They use sensors that calculate their motion. See in my course SLAM UNLEASHED how I present the IMU outputs:

An IMU is an Inertial Measurement Unit that outputs orientation (in quaternion coordinates), an angular velocity, and linear acceleration. It means it's also part of the accelerometer sensors, that tell you how much you moved, how much you are rotated to the left, etc... They also tell the temperature and even measure the magnetic field to account for the Earth's rotation, and thus know exactly how you are moving.

Yes, if you used an IMU to record data in 2011, it's likely different than if you're doing it today; because the earth's magnetic field moved, and thus, you don't travel the same distance. We're getting really precise here, and I do cover this in my robotic architect course already; but I wanted to point it out because it's an important part.

The other types of motion sensors can be accelerometers like you have in your phones to calculate that you make your 10,000 daily steps, or even gyroscopes that measure your velocity.

Finally:

Regular Automotive Sensors

I really didn’t get inspired for this one, but when you think about it; a self-driving car must use all the regular car sensors as well.

For instance, a pressure sensor in the tires monitors the air pressure and converts it into an electrical signal, ensuring optimal performance and safety while driving. I remember one day, I was driving on the highway, and I noticed that I had to apply some steering to the left to keep my car straight. Somehow, it drifted to the side… After a quick check, I realized I had a flat tire. Self-driving cars must do the same.

On another idea, temperature sensors keep tabs on critical components like the engine, brakes, and battery, preventing overheating or failure. Oil level sensors and coolant sensors ensure that the engine runs smoothly, while fuel level sensors provide crucial data for range estimation. They’re kinda chemical sensors. Even the wheel speed sensors, which are integral to anti-lock braking systems (ABS) and traction control, contribute to the decision-making processes of a self-driving car by providing real-time feedback on vehicle dynamics.

Okay, so before seeing some examples, let’s do a brief recap of that part 2:

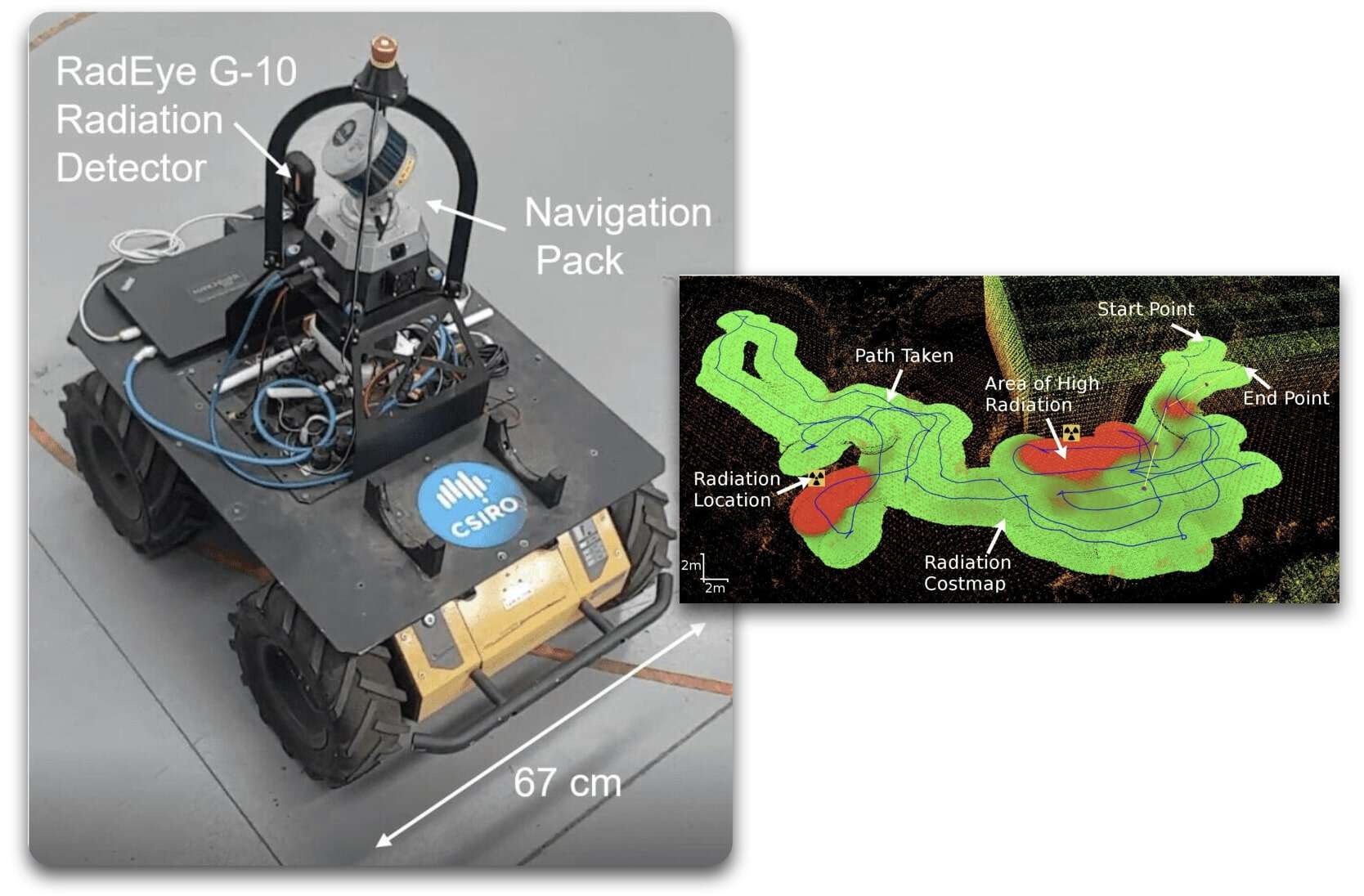

Example: How to SLAM a Nuclear Facility

A few months ago, I was chatting with a client from my 4D Perception course, who has his own 4D Perception startup — and he told me about one of his past experiences working with SLAM for a client from the nuclear field. "Wait... Nuclear ☢️?" I asked like a Looney Tunes. "Yes! Many SLAM applications are incredible when you have radioactive environments!" he answered before sharing a few details about it.

I got so intrigued by the idea that I started searching online, and of course... I found something! RNENuclear robots has written a paper and made this video about it (if there is no image — the video still works):

When you think about it, there are tons of places we human can't go, and where robots equipped with the right types of sensors can make wonders. In this example, the robot works with gamma dosimeters, these are radioactivity measurement sensors. A common one is the ThermoFisher RadEye unit G10— which can cost several thousands.

Example #2: Sensor Fusion of Multiple types of Sensors

I talked about SLAM here, but most robots don't localize using SLAM; but using localization algorithms. This is often a fusion of GPS and sensors like IMU & Odometers. It's all a fusion of sensors! Often done using an Extended Kalman Filter. In my course ROBOTIC ARCHITECT, I have a complete lesson showing it, but for this example, let me just show you the gist:

The Extended Kalman Filter takes:

- GPS

- IMU

- Odometer

And outputs the localization in the Google Map.

Alright, we covered a lot. To finish this article, let me answer a critical question:

How much should you know about these sensors to become a robotics/self-driving car engineer?

At the bare minimum, you should have a good idea of what these sensors are, how much they're involved, and what they do (what we're seeing in this article). There are other ideas you could expand, such as active sensors — which emit their own waves (LiDARs, RADARs, ...) and passive sensors, which are not really sending anything to the environment.

For beginner positions, it's probably a good idea to learn ONE of these sensors well. For example, the camera — or the LiDAR — or the GPS. When you specialize into one of these, you are also learning the algorithms, and starting to tell the difference between each type, and thus you start building expertise.

For intermediate positions, I recommend to understand 2 or more sensors. If you understand the LiDAR AND the Camera, you can start doing some fusion of these for object detection projects. If you understand the GPS AND the IMU, same idea — you dive into Kalman Filters, and thus have some good value to add.

For advanced positions, the more the better. Experts have a good idea of the differences between sensor types. We discussed active and passive sensors, but there's also digital sensors vs analog sensors (one outputs a discrete or digital data/binary for computers, and the other looks more like "signal"), or you could also dive into one sensor and master it really well.

Summary

- Sensors are categorized into two main types: exteroceptive sensors, which perceive the external environment, and proprioceptive sensors, which measure internal dynamics.

- Exteroceptive sensors are split into 3 categories: Perception, Localization, and Contact/Environmental sensors.

- Perception sensors are cameras, LiDARs, RADARs, thermal cameras, ultrasonics, infrareds, ... They work really well alone, but also fused together to provide an accurate understanding of the objects around.

- Localization sensors are sensors like GPS, RTK-GPS, or even workaround solutions like Ultrawide band.

- Environmental and contact sensors, such as audio sensors, humidity sensors, and gas sensors, help monitoring the surroundings of autonomous vehicles and robots. In robotics, we also have all the contact sensors — that are used for that idea of physical contact.

- Proprioceptive sensors, such as position sensors and motion sensors, monitor how the car moves over time. They can be used together with external sensors like GPS.

- We also use all the automotive sensors, like tire pressure, oil, etc...

Understanding and mastering different types of sensors is important, please refer to the part above to get the gist. There are a few types of sensors we didn't cover; because I wanted this article to focus on robotics & self-driving cars, but these are the main ones.

Next Steps

If you enjoyed this article on sensors — you might love this one on the different types of LiDARs. You may also get interested into this article on Sensor Fusion.

Subscribe here and join 10,000+ Engineers!