Sensor Fusion Nanodegree Review

Back in 2020, I wanted to challenge myself and use Udacity's 1 MONTH FREE offer to enroll in the Sensor Fusion Nanodegree. This course was on my list for a few months.

My history with Udacity wasn't new. 3 years earlier, I pioneered the Self-Driving Car Nanodegree and from there to 2019, I was working as a Self-Driving Car Engineer. I have experimented a lot with Computer Vision and Robotics algorithms, but I never really got learn LiDAR & RADAR the "official way".

Here was the opportunity to increase my skills in this field. This is an offer that lasted for a few months, and I wanted to know:

Could I complete the Sensor Fusion Nanodegree in just one month?

In this article, you're going to learn about my experience with the Nanodegree, and you'll see in how much time it's doable. I'll tell you whether I think you should enroll or not, and give alternatives if you don't want to.

The Sensor Fusion Nanodegree is Udacity's Program to teach you about Self-Driving Cars & Sensor Fusion. In the course, you'll learn how to work with sensors. To be specific, here is the exact structure:

- LiDARs (Light Detection And Ranging)

- LiDAR-Camera Fusion

- RADARs (Radio Detection And Ranging)

- RADAR-LiDAR Fusion

The program linearly follows these, and as you can see, I also pointed you to articles I wrote on each of these topics.

Now, let's see what in the course...

Part 1 - LiDAR

Content

The LiDAR part was the reason I enrolled, I wanted to get a taught introduction to these sensors, as I only saw these as working as a self-driving car engineer.

So here's what we learn:

- Introduction to LiDAR & Point Clouds

- Point Cloud Segmentation - How to tell the difference between the road and an obstacle

- Clustering Obstacles - How to separate obstacles and fit bounding boxes in 3D

- Working with real Point Cloud Data

You'll learn to use the Point Cloud Library, which is the OpenCV of the LiDAR. To code, you'll use Udacity's Virtual Machine with over 150 hours of included GPU.

Teacher

The whole LiDAR part is taught by Aaron Brown who now works on Self-Driving Cars at Mercedes Benz. The instructor was very good at explanations and easy to follow.

Project

The final project is about LiDAR obstacle detection in real-time.

We work with real point cloud data from the KITTI dataset, in C++.

The goal of the project is to process the point cloud and do the obstacle detection process.

In reality, obstacle detection using LiDAR can be 10 lines of code, using PCL (Point Cloud Library).

The task of the project is to recode the algorithms for segmentation and clustering.

Recoding the wheel will not help if you want to put that in a real self-driving car, but it's good for understanding.

Here's the output I got:

Time & Difficulty

The estimated time is 22 days. It took me about 7-10 days to do it all allocating about 1-2 hours per day. I also had one big night of coding (ended at 4 A.M.) to complete the project.

It's not very hard to code, but since it's the first project, getting used to the code and library can take time. The review was fast, under one day; and I validated immediately.

I'd rate this part 5/5 since I truly loved working with LiDAR and Point Cloud Data.

The problem with the Sensor Fusion Nanodegree is that everything is overlooked. So I built courses where you can explore several parts of LiDAR, such as 3D Object Detection & Segmentation, 3D Fusion, but also 3D Deep Learning.

Part 2 - Camera

Content

I didn't know what to expect of the camera course. Would it be telling me something I already knew?

This part of the Nanodegree is split in 4:

- Sensor Fusion & Autonomous Driving

- Camera Technology & Collision Detection

- Feature Tracking

- Camera and LiDAR fusion

The whole content is designed to solve one problem: Estimate the Time To Collision (TTC) using camera & LiDAR. I was quite surprised and loved that it was fresh content explained nowhere else.

Teacher

The teacher is Timo Rehfeld, a Sensor Fusion Engineer at Mercedes Benz. He looks very professional and communicates the problems quite well.

Projects

The course has 2 projects:

- Feature Tracking (camera only)

- Time To Collision (camera + LiDAR)

The first project is about feature tracking. Here, you'll use OpenCV to detect keypoints like corners and edges and track them through a sequence of images. It's a bit similar to what I taught you in the MASTER OBSTACLE TRACKING course, but you track keypoints instead of Bounding Boxes.

To learn about feature tracking, we have to learn about feature detection like keypoints detectors and descriptors.

I didn't like this part because it teaches the very basics of Computer Vision too much in-depth. While it might be interesting to know, it was really redundant (and long) to study 10 different ways to detect keypoints.

The second project is about LiDAR CAMERA fusion and Time To Collision estimation. Basically, we'll try to associate the LiDAR bounding box with the camera keypoints and make sure that the point cloud in the bounding box are not associated with another vehicle.

This part was better taught as we had a clear purpose. However, it wasn't always very clear to follow and again, the content was very long so I had to fast run sometimes.

Time & Difficulty

The estimated time is 21 days. That part was interesting at first but got really annoying then. It was very (very) long. Some parts took over an hour to complete and didn't feel that useful.

The first project required to test multiple keypoints detection and matching methods, on 10 images.

At first, I didn't do it and went with the default ones, I didn't pass.

Then I did it partly and didn't validate either, I had to do try 10 detection methods with 10 matching methods on 5 series of images to pass the project — which is 10x10x5 = 500 tests.

Only to find 2 cases where it didn't work because of the specificity of the algorithm and include an if loop. Additionally, I also had to note the times of computation for every test.

👉 The testing part of this project took me hours, even when I decided to write an algorithm to automate this.

I don't know how to feel about this part.

It felt very long and I was just waiting for it to end at the end.

However, the fusion part was interesting and clear to understand as well as the Time To Collision.

It's necessary to learn this to work as a Sensor Fusion engineer. I'm not sure everyone uses keypoint detection as an official method though. I didn't see it used at all in my company.

I'd rate it 3/5.

Part 3 - RADAR

Content

RADAR is an essential component in self-driving vehicles because it can estimate the velocity directly. I always knew that, but never really got into the details of how the Doppler effect worked.

Here's the curriculum:

- Introduction to RADAR

- RADAR Calibration

- RADAR Detection

The content is unique. I didn't see a lot of RADAR courses out there.

If you're interested in RADAR, I'd do the course only to learn this part.

Coding is made with MATLAB. Nothing easier. The focus is set on the technique and how you can make sense of a wave; not on the code. However, I got to say that a few years after taking this course, I was not able to work on RADARs again because this project is in MATLAB, and thus difficult to reuse; it's like schoold project you can't turn into reality.

Teacher

The course is taught by Abdullah Zehdi, who I think was also instructing in the self-driving car & robotics Nanodegree programs.

Project

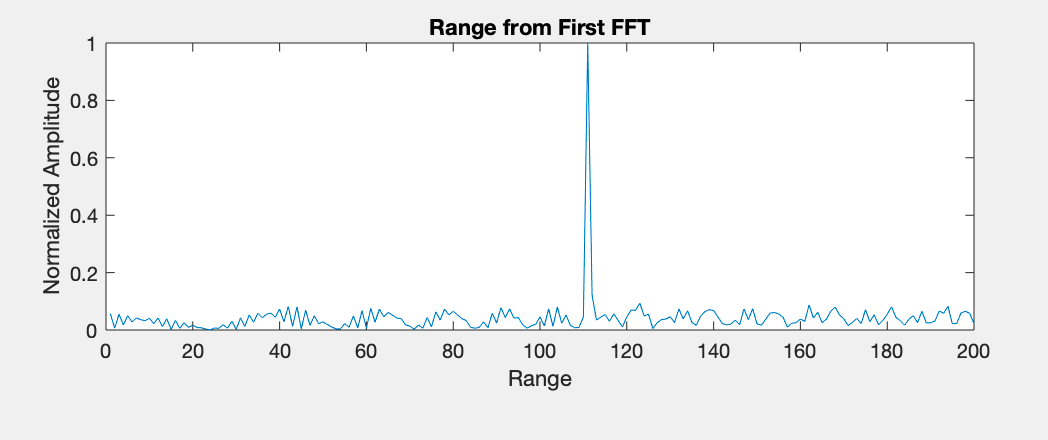

The project is about RADAR obstacle detection.

RADAR's output is different, it looks like this.

The concepts were clearly explained, but unfortunately, I didn't spend too much that redoing the math of everything. The project took me a few hours and the review was fast.

Time & Difficulty

This part is estimated for 14 days. Because of the camera part, I was 5 days away from the end of the free month and still had 2 projects to complete. However, I was surprised it was one of the easiest part to code.

The course and project both took me a day (last Sunday). I liked this part. However, we'll need more to really know how to use RADAR in real-life.

It was more of an introduction than a concrete experience. MATLAB made it easy but however less realistic.

I'd rate this 3/5 too.

Part 4 - Kalman Filters

One good thing is that I already had this part 100% viewed since it was already in the Self-Driving Car Nanodegree. Because this section is 100% similar to the Self-Driving Car Nanodegree, I graduated from it on Day 1.

I will however comment it to you...

Content

In this course, you'll learn about Kalman Filters, the algorithm used to fuse data from LiDAR and RADAR.

You'll learn:

- Kalman Filter

- LiDAR-RADAR Fusion with Kalman Filters

- Unscented Kalman Filters

The official course presentation states that there is a week on Extended Kalman Filters. It wasn't in the course. There were however 3 or 4 videos about the topic; but not a full week.

Teacher

You'll learn the course from Sebastian Thrun and the Mercedes Benz team.

The Sebastian Thrun part is taken from the free course Artificial Intelligence for Robotics.

Project

The project is about predicting where vehicles will go in the future.

Here's the output.

I personally loved it when I first learned about Kalman Filters. I wrote 3 articles about the topic and still feel like I have more to learn. Compared to the self-driving car Nanodegree, the project got very visual and it's good to show to a recruiter.

The review was very long, over 2 days and I was afraid to have to pay 399$ just to get the review and the diploma. But I didn't. I finally got it 2 days before the end.

Time & Difficulty

The whole part is estimated at 29 days, the longest of the program. However, it took me about an hour to complete since I already did the whole project and course with the Self-Driving Car Nanodegree.

If you've never done this, it will take you about 2 intense weeks. The course is very good. I, however, remember that it was not very easy to completely understand Kalman Filters with the content alone and that I had to look for other sources.

I'd rate this 4/5.

Conclusion: Should you enroll?

If we add-up the individual grades I gave, you will see:

- LiDAR: 5/5

- Camera: 3/5

- RADAR: 3/5

- Kalman Filters: 4/5

Or a good 15/20 or if you're in a US system, 60/100!

What I liked about this Nanodegree

The good thing about this Nanodegree is that it gives strong fundamentals on Sensor Fusion. It's taught by field experts, and it's really a pleasant experience to follow. It's also in C++, and the projects are interesting.

I don't think you can complete it in a month. In fact, I wouldn't recommend it. Something I don't like with Udacity's model is that they force you to rush to finish. If you take your time, it costs you money. I could "trick" the system because I already have a module completed, but I wouldn't like the idea to be pressured to finish.

If you have never been introduced to autonomous driving, I don't recommend to enroll yet. Instead, I would recommend to take my short (3h) and affordable (19$) introduction to self-driving cars, which also gives you the complete system I use with my engineers who want to join the field.

Rather than jumping too fast, you know exactly what to learn and what not to learn.

👉🏼Here is my introduction if you want to learn more.

Is the program up to date?

Another drawback with Udacity is that their program aren't often updated. The Sensor Fusion Nanodegree was built in 2018. In robotics, some concepts are "foundations" and are therefore needed no matter which year you take them.

This course is full of them, which means although the content is not updated, it's more "traditional" than cutting-edge. And it likely won't be updated any time soon.

I would recommend this program if you're used to Udacity, feel good about them, and want to enjoy a "long" experience in Sensor Fusion. It's really a good program. Here is the link to enroll in the Sensor Fusion Nanodegree.

On the other hand, if you want to have a more "cutting-edge" and close to the field experience, I would recommend my courses. They're updated every year, go more in depth (because more specialized), and much shorter (just a few hours per course). They're also in Python, which is, depending on how you look at it, either and advantage or a drawback. Here is the link to explore my collection of cutting-edge courses.

No matter what you end up doing, enrolling in a Sensor Fusion course is not doing a mistake. Sensor Fusion is one of the most important topic in Robotics and Computer Vision, and I can't recommend learning about it enough.

In fact, I recently wrote a complete post sharing a curriculum for aspiring sensor fusion engineers, you can read it here.