Point Cloud Registration: Beyond the Iterative Closest Point Algorithm

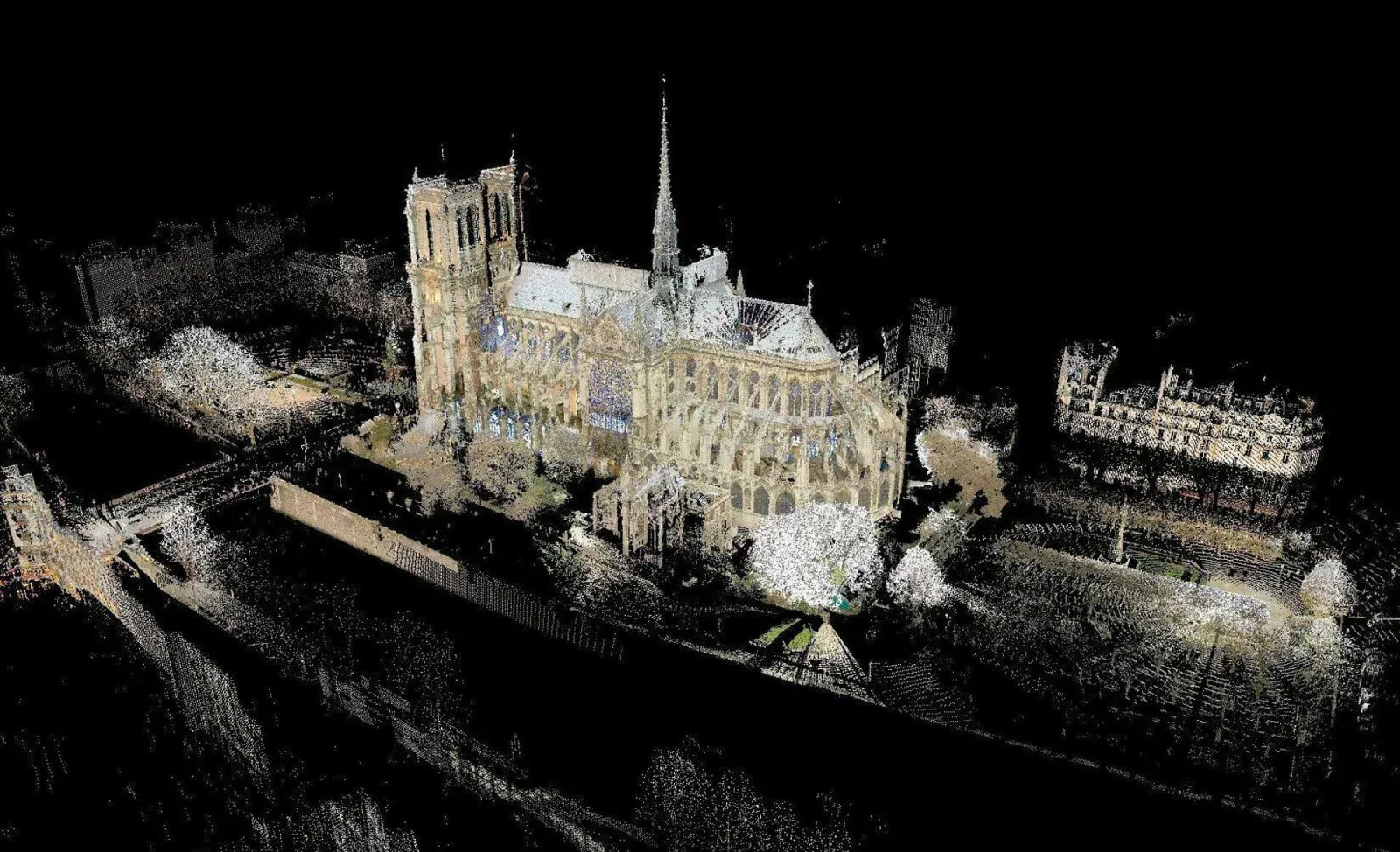

I was walking in Paris, doing shopping with my girlfriend, when suddenly, I saw it. A giant, dark, and somewhat orange smoke invading the sky. People started looking up. What was happening? This is when I looked at a notification on my phone, and saw the news: Notre Dame was burning.

I couldn't believe what I was seeing, the nearly thousand years old cathedral was on fire, and even after hours, the fireman weren't able to make the chaos stop. Days passed, and it was seen as a national tragedy everywhere in France. That is, until the President declared that over 850M$ were donated by the French citizen, and this to help rebuild it.

But how do you rebuild Notre Dame exactly as it was? It turns out that an art professor named Andrew Tallon considered the need to make a digital copy of the cathedral back in 2010, and using a 3D laser scanner, he spent 5 days capturing the monument from 50 different angles, building a set of over 50 point cloud files.

But how did they combine all these point clouds into a single file of a billion points? It turns out, the engineers working on it were able to "align" all 50 point clouds together, and create one piece of digital copy that was accurately representing the monument using a technique called Point Cloud Registration.

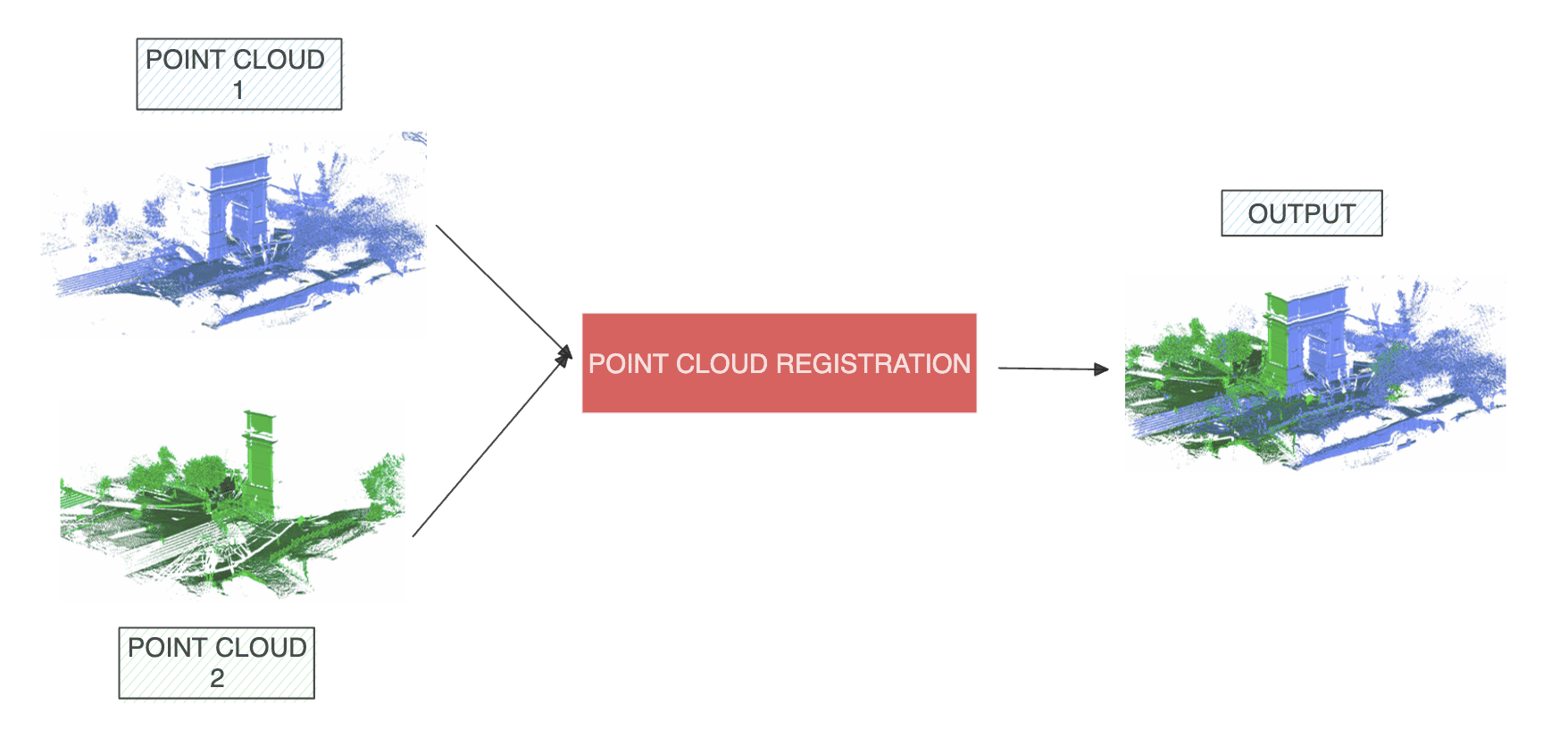

Point Cloud Registration is the task of aligning two sets of points together, and it can be used in cases like this one, but also in autonomous driving, robotics, cinema, and many more...

In this article, I'm going to give you an overview of how it works, what are the different techniques, and how to use it to solve problems related to autonomous driving (my thing).

What is Point Cloud Registration?

Every time you have a point cloud, whether made by a LiDAR, an RGB-D camera, the Face ID scanner of your iPhone, or anything else, you're going to want to process it. Through this process, you will want to find objects in 3D, build a map of the world, combine sensors, and do all sorts of things with the point cloud data.

But what happens when you have multiple point clouds? What if you have, for example, two LiDARs mounted on top of a self-driving car, looking at a street? or what happens when you're doing SLAM, and want to implement loop closure?

The problem is known as point cloud registration, or point set registration, and this is going to be our topic today.

How does Point Cloud Registration Work?

Imagine we have two point clouds we want to align. The real vocabulary we should use first is "registration". This means that we're going to take one point cloud, the master point cloud, and we will "register" the second point cloud to the first one.

First, I want to acknowledge that it's not "easy". It's much more than just combining two point clouds, because there is likely an overlap, some LiDARs may have more points than others, they're not even in the same coordinate system, and so this entire thing needs "techniques". If you want to have a robust registration process, you'll need more than just combining point clouds.

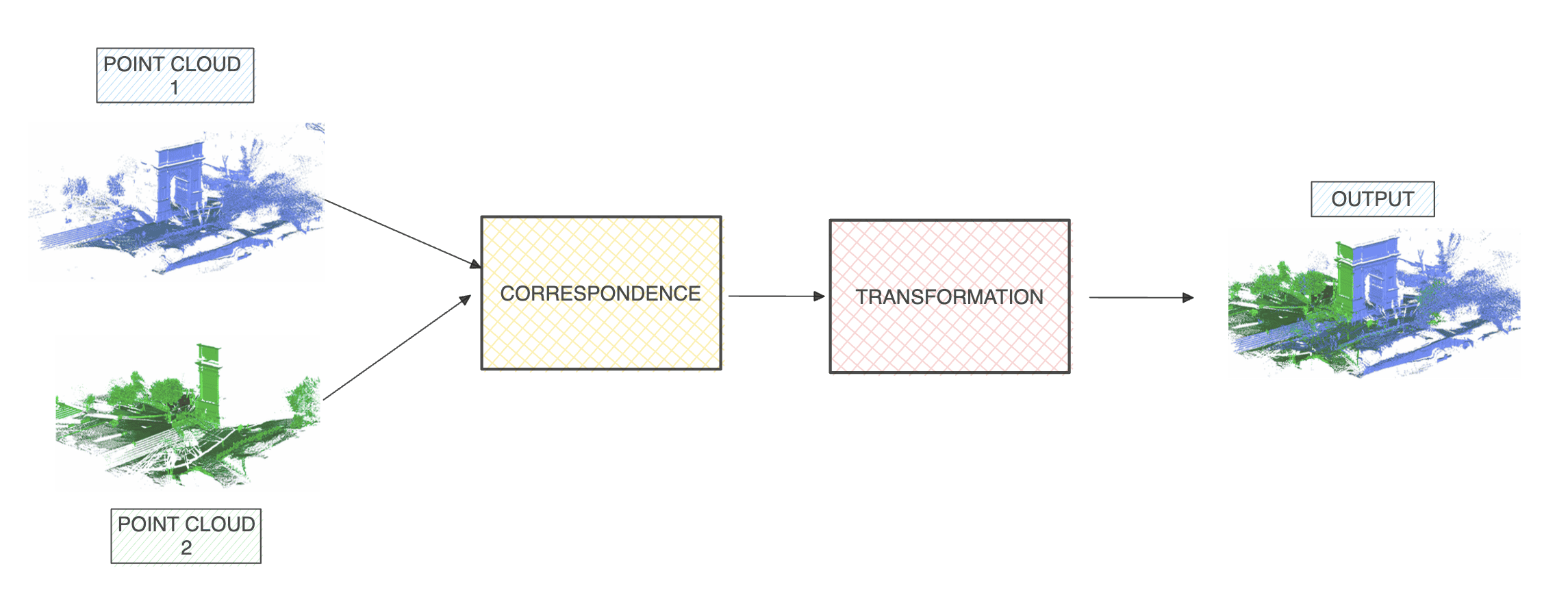

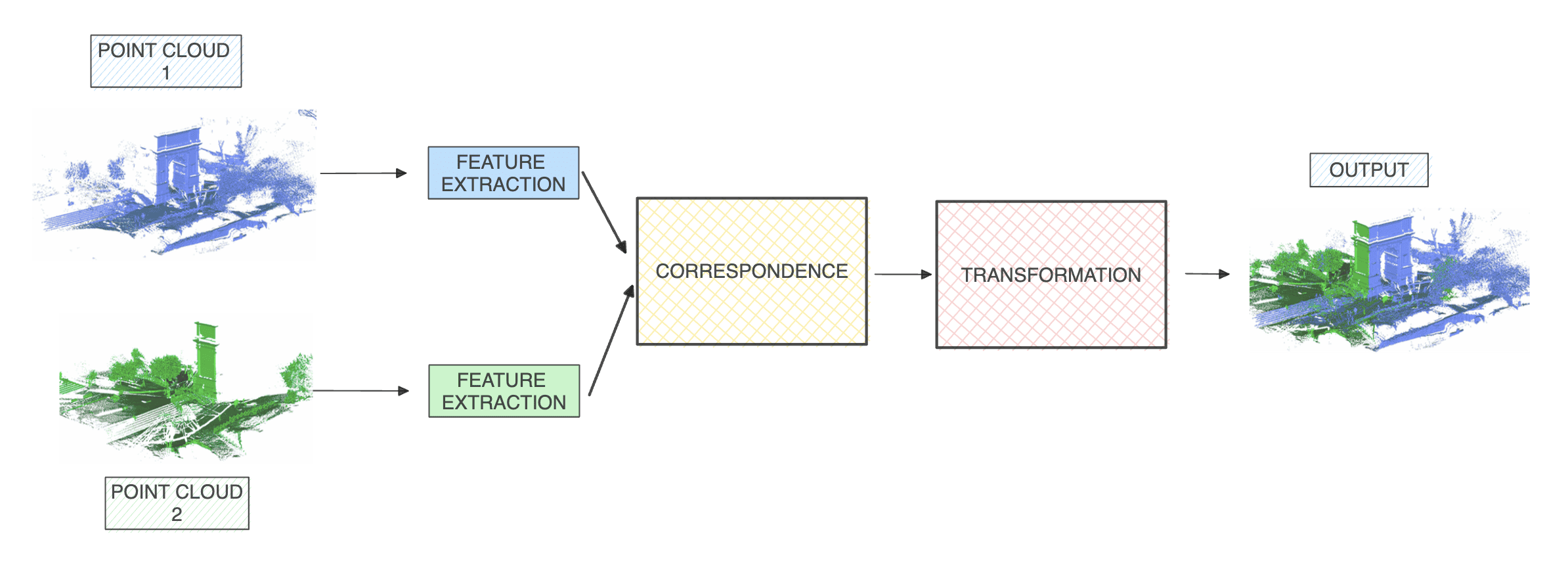

In reality, it's often the story of 2 processes: correspondence and transformation.

So given a point in a cloud, we want to find its corresponding point in the other cloud, and then estimate a transformation matrix to go from one point to another.

Correspondence

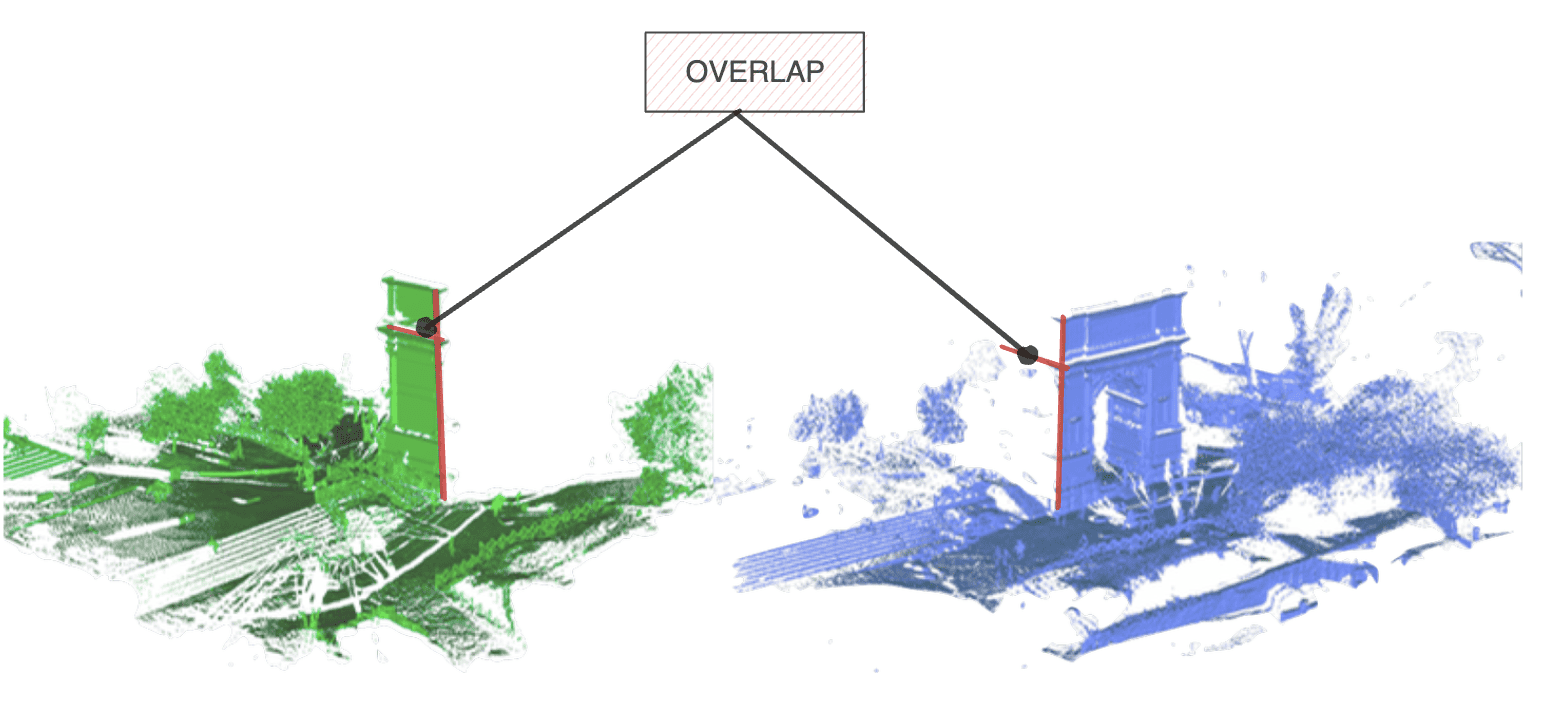

The idea of correspondence is, given a point in target point cloud A, what is the corresponding point in cloud B? You can spend a lifetime searching in a billion points, or you can go over various techniques that will calculate a distance between the point coordinates and pick the lowest cost. This can be an euclidean distance, a point-to-plane distance, or anything like that.

Notice, for example, how these two point clouds overlap:

This overlap is actually useful, because thanks to it, we will understand how our two point clouds work together. The first task is here, we want to estimate all the points that are redundant in both clouds.

Transformation

Once we have these points, we will want to do a transformation from one cloud to the other. A transformation is either:

- A rigid transformation (Translation & Rotation)

- A non-rigid transformation (Scaling, shear mapping, ...)

So how do you do these 2 steps? How do you make sure the points are indeed the right ones, and the alignment is correct?

This is where 3 techniques used and documented:

- Optimization Based Techniques

- Feature Based Techniques

- End-To-End (Deep Learning)

Ready?

Optimization Based Techniques for Point Cloud Alignment

The first category is based on optimization. Optimization means that we will do an iterative process, and that through this process, we will try several correspondences and transformations, and iteratively select the best one.

There are several algorithms to solve these two steps, from the super well known Iterative Closest Point (ICP), to Gaussian Mixture Models (GMM), to even Graph Based Approaches. But no matter the approach, we're always going in an iterative process:

Let's take, for example, the Iterative closest point (ICP).

Iterative Closest Point

The process is as follows:

- For each point in the source point cloud, match the closest point in the reference point cloud (by doing an euclidean distance for example).

- Estimate a transformation (rotation and translation) using a root mean square point to point distance metric minimization technique which will best align each source point to its match found in the previous step. This step may also involve weighting points and rejecting outliers prior to alignment.

- Iterate (continue with other points)

Notice how this iterative closest point is doing nothing but doing an euclidean distance calculation, and then a transformation.

So this process is simple, and efficient, especially if you have the exact same two LiDARs, with similar point clouds, some overlap, etc...

Feature Based Techniques for robust point matching

The main issue with optimization based approaches is that they're going to rely solely on distances or "basic" information. This is why we could also use features. Rather than matching "points" based on their coordinates, we match them based on their features.

Now, "feature" based involves that a point cloud has features. And yes they do! Consider them, we have:

- The XYZ Position of a point

But now think bigger, for a point, we can also have:

- The color information, if from an RGB-D camera

- The Intensity of the point, if provided by the LiDAR

- The Reflectance Information, if provided by the LiDAR

And in reality, we can have many more. A point cloud can have many types of featurs, such as surface normals, corners, key points, etc... In my Point Clouds Conqueror course, I teach 12 types of features you can use in a point cloud, and show you techniques, like the FPFH (Fast Point Feature Histograms) algorithm, to calculate them. It looks like this:

There's also the Deep Learning route. In my Deep Point Clouds course, I teach Deep Learning processes to find features using neural networks. This would imply algorithms such as the "Deep Closest Point" (paper), because you're in the feature space.

So imagine we have these features, what then? Then, we do:

- Correspondence: We calculate a distance between the features

- Transformation: We estimate a translation & rotation to go from cloud A to cloud B. Algorithms like RANSAC could also be used here, since their job is, besides finding outliers, to find planes and their coordinates.

Finally:

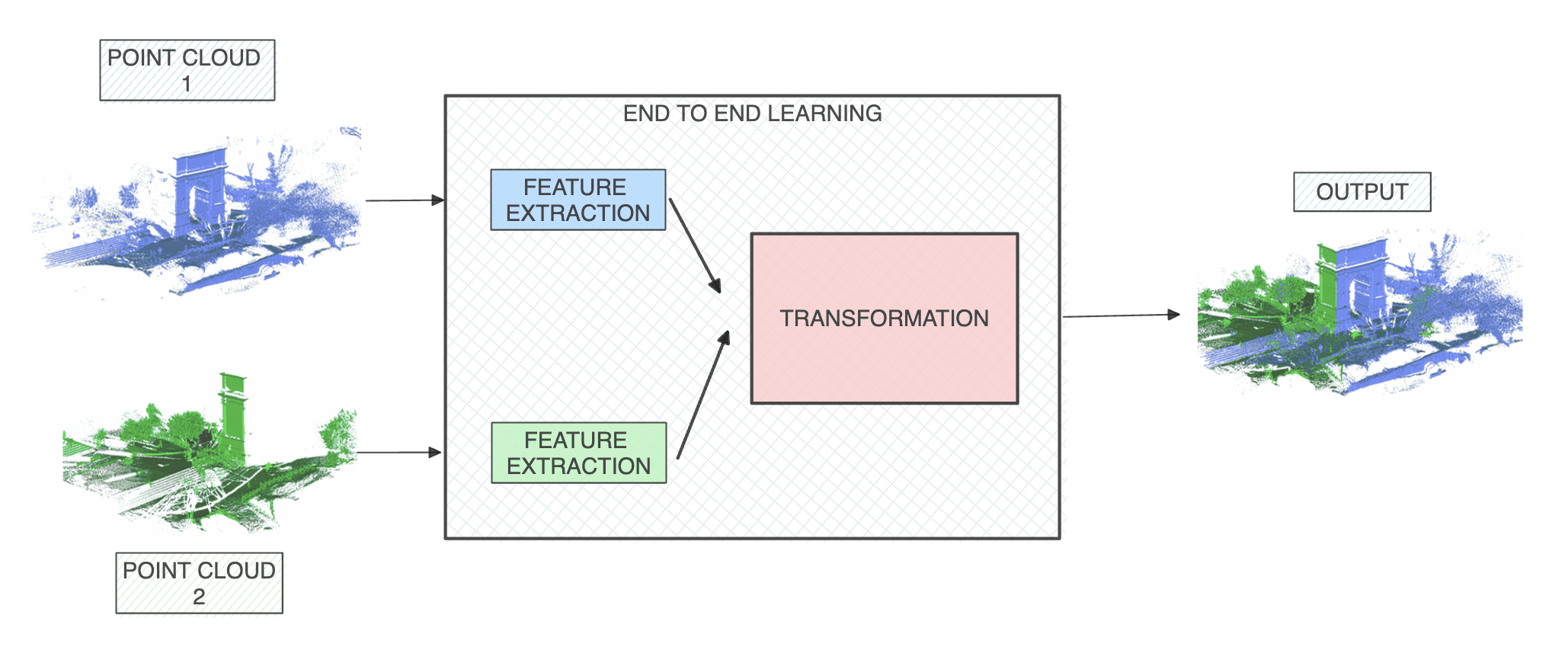

End-To-End Learning for efficient point cloud registration

The main limitation of the first two point cloud registration methods is that they always rely on us calculating a distance between either the points or the features to do the work. With that, the transformation is done via another "manual" algorithm based on Root Mean Squared Error (RMSE).

But if we use Deep Learning techniques, we could also build a framework that, given two point clouds, automatically outputs the transformation parameters.

Like this:

Here, it looks like we ditched the correspondence step, but in reality, we used Deep Learning everywhere, and performed a regression of the transformation parameters directly after a feature extraction. It's a bit more "black box", but it works, and many algorithms have been created for this, and can work with multiple point clouds.

So you know, at least remotely, understand 3 approaches for point cloud registration.

Example 1: Zoox's Solid-State Fusion & Point Cloud Stitching

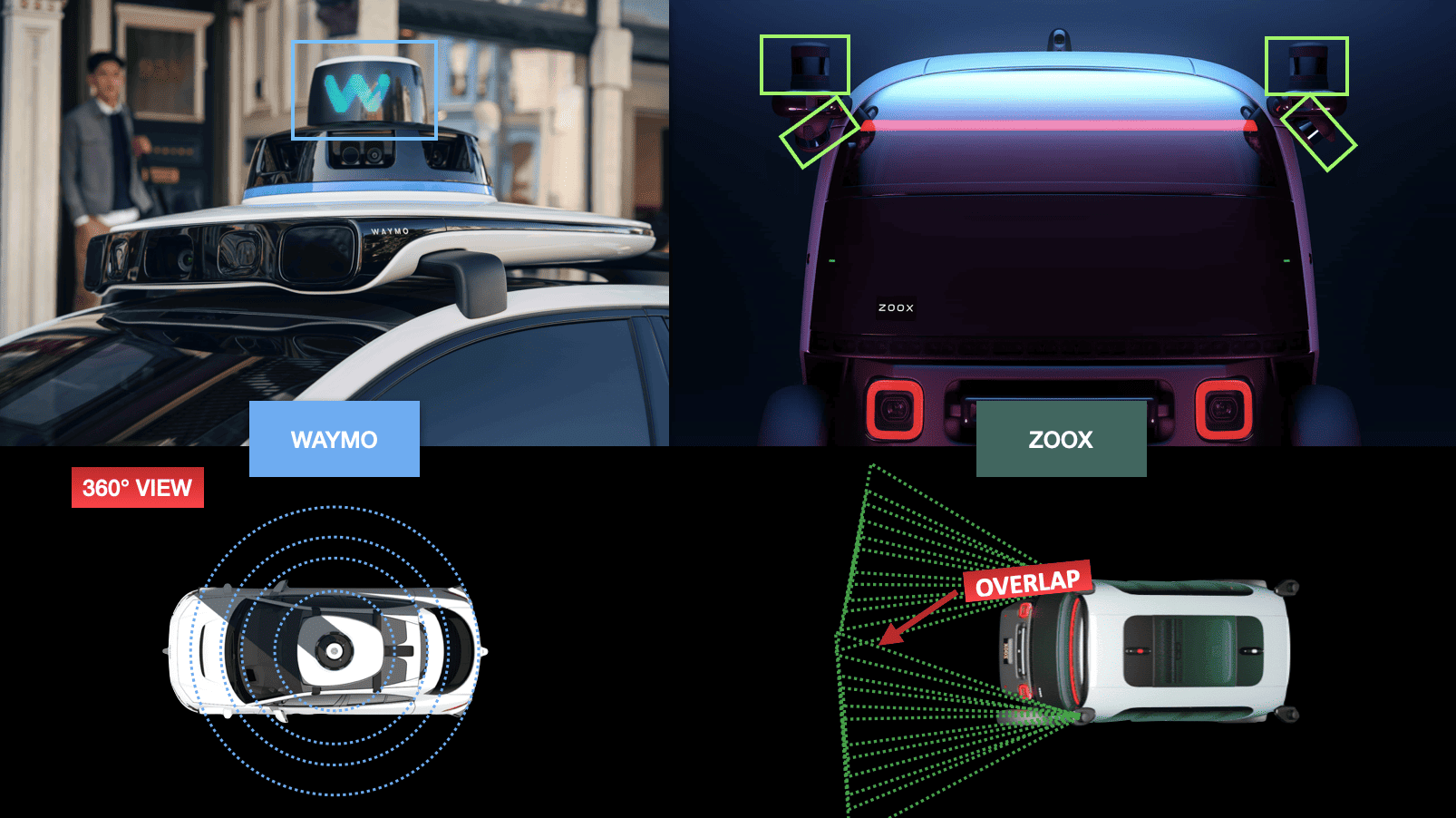

Did you ever see these mechanical LiDARs working on self-driving cars? At one point around 2017~2018, it seems like it was all I could see when viewing the CVPR conferences (conference on computer vision and pattern recognition), or when browsing self-driving car videos.

This LiDAR automatically provides a 360° view of the world. Yet, when looking at the self-driving car industry, you can see how it "shifted" to using solid-state LiDARs, that couldn't do a 360° view. So what did companies do? They started to use many of these LiDARs, and position them everywhere in cars.

So let's look at an example of two very popular self-driving car companies: Waymo & Zoox. The first one is using a 360° mechanical LiDAR, while the second one is using two solid-state LiDARs. Which of them do you think is going to need point cloud alignment?

Obviously, while Waymo only has one point cloud, Zoox has 4 of them. Now Zoox says: "These LiDARs are complementary." You can look at my article on the Sensor Fusion types, and you will see that what they're doing involves a process called complementary fusion, and that this process means that we're adding several sensors together into a single output.

Notice the visualization here:

This is what Tesla uses with their surrounding cameras, and this is what you can use to build panoramic photos from multiple images. Whatever the case, they're using Point Cloud Registration to do this.

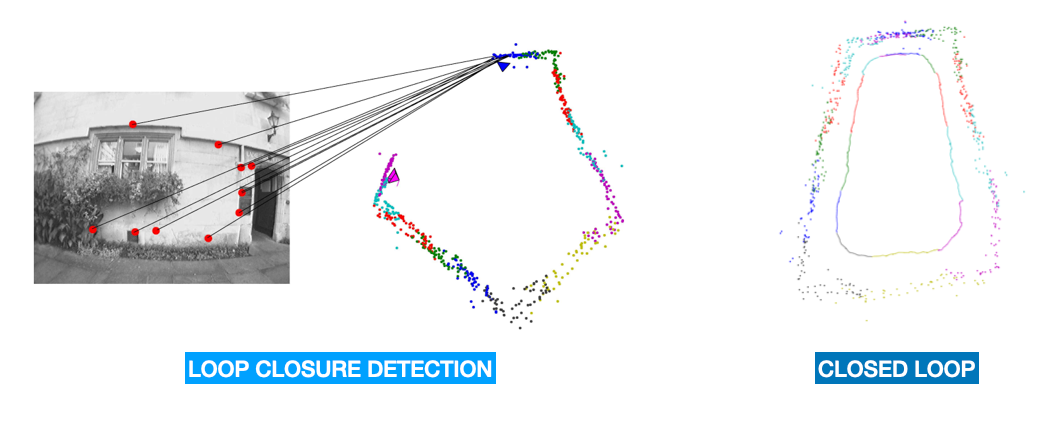

Example 2: Simultaneous Localization And Mapping with Loop Closure

Loop closure detection is another important type of applications. When doing SLAM, we sometimes visit places that we already saw. Loop closure helps "close the loop", and therefore not have duplicates in the map we're building.

The process is explained in the article, but it's widely used in mapping, and even 3D Reconstruction!

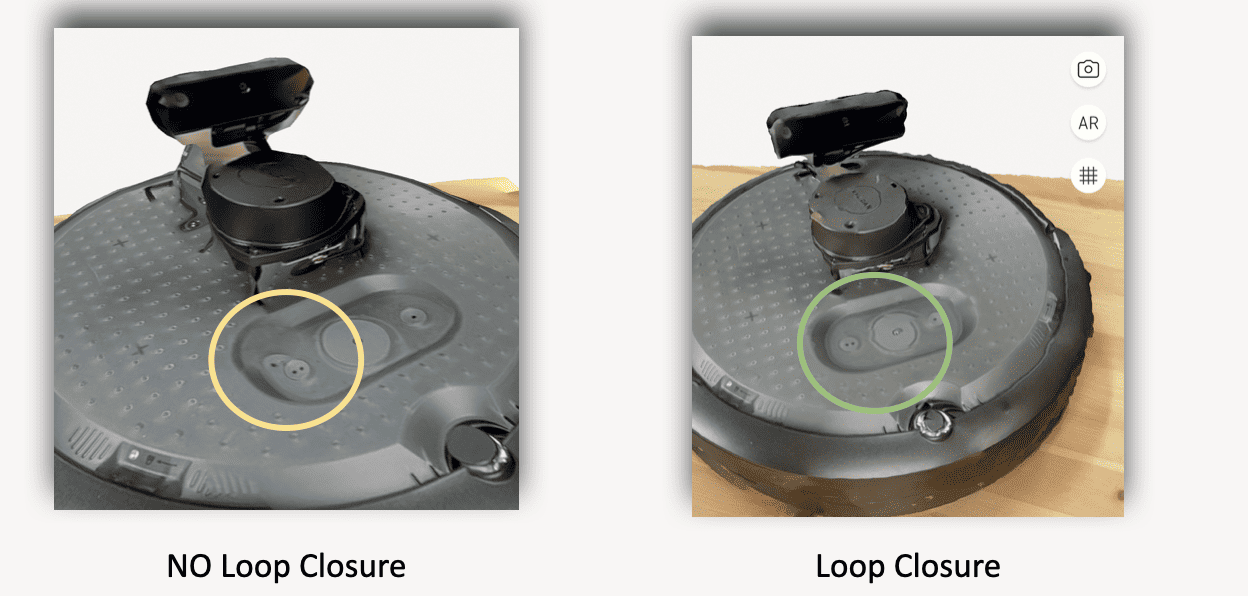

For example, I purchased a Turtlebot 4 the other day, and using the LiDAR on my iPhone, I was able to create a 3D model of the robot. Well, I tried with and without loop closure, and note what happened:

This is obviously done using Point Cloud Registration, and here I'm showing you a proof that it's needed, and that it works.

What if the Point Clouds are different?

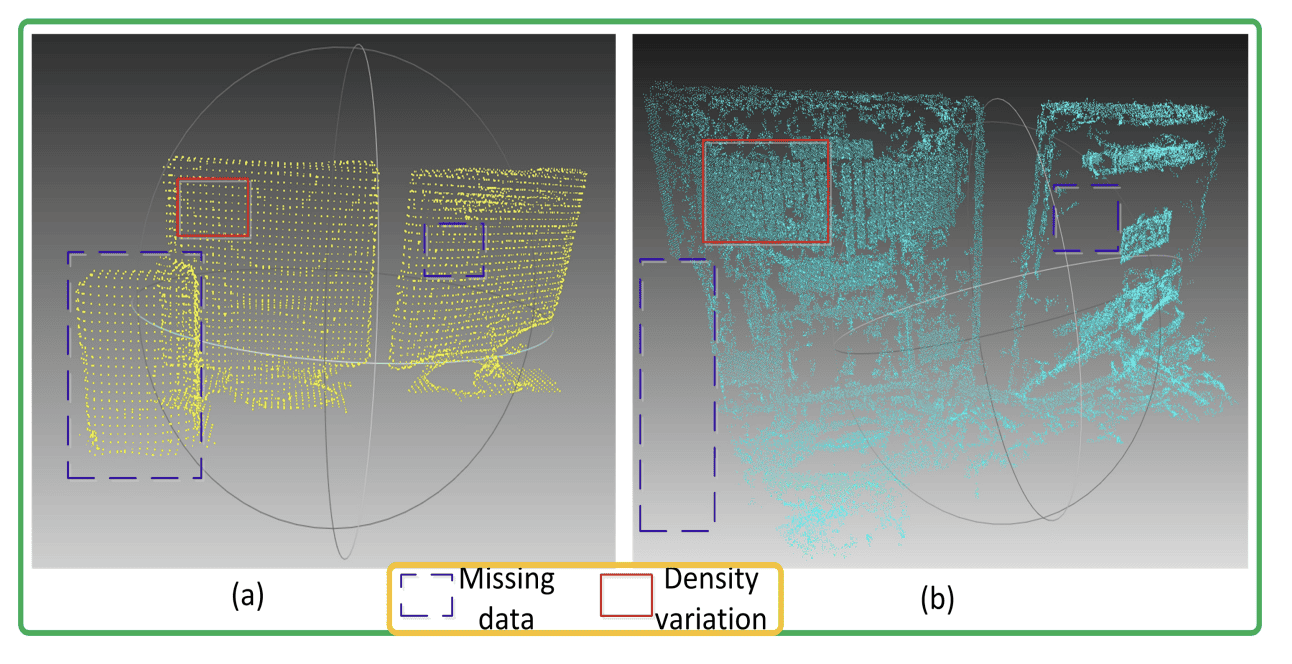

There is one caveat to all of these approaches. They all belong to a family we could call "same source" point cloud registration. This means that in every case, it's the same LiDAR operating, or a very similar one.

But imagine we want to reconstruct Notre Dame. How do we compare the current point cloud with the original one, knowing that it was from a 3D scanner from 2010 that may not even exist anymore? How would the matching process work if you have LiDAR A producing 20,000 points and LiDAR B producing 1M points? Or what if you have an RGB-D Camera, coupled with a 3D LiDAR?

The problem known as "Cross-Source" Point Cloud Registration becomes much more complicated, because you can't really match a source to its nearest point, because there may be hundreds of nearest points.

Fortunately, there are options around optimization and deep learning based methods, such as CSGM , that transforms the registration problem into a graph matching problem; or as FMR that does it with Deep Learning.

So, you know this problem exist, and it's more challenging, but it also brings benefits, because it opens new use cases (like construction, comparison, etc...), and can also leverage multiple sensors, such as color cameras and LiDARs.

I think we have accumulated quite a bit of knowledge, so let's do a summary:

The Summary

Here are the main elements you should remember about point cloud registration.

- Point Cloud Registration is the idea of aligning two or more point clouds together, to build one point cloud.

- This process involves two steps: correspondence finding and transformation estimation.

- There are 3 main families of algorithms existing: optimization based, feature based, and end-to-end based.

- Optimization based approaches include the Iterative Closest Point (ICP), or Gaussian Mixture Models (GMM), and involve finding the closest point in two clouds, and calculating a transformation between the original point cloud and the target point cloud.

- Feature Based approaches involve calculating point cloud features, such as keypoints, corners, colors, etc... and then do a distance calculation and a transformation based on these features.

- End-To-End is the process of regressing the transformation parameters directly using Deep learning.

- If the point clouds come from two different sensors, the problem is known as cross-source registration, and is usually solved by reframing the problem into something else (graph optimization for example).

See? We can reconstruct Notre Dame. And we can do many more things. Applications of this exist in autonomous driving, for example with Loop Closure in SLAM, in 3D Reconstruction, or even in Sensor Fusion.

So now, let's see the next steps:

Next Steps

- If you want to learn more about how to use Point Clouds Registration, I would recommend taking a look at my Loop Closure Article here.

- If you want to learn more about Sensor Fusion, I would take a look at my 9 Types of Sensor Fusion Algorithms here.

But the most recommended is below...