A complete overview of Object Tracking Algorithms in Computer Vision & Self-Driving Cars

Have you seen the movie 'Limitless'? I really loved this movie when growing up. It's the story of Eddie Morra, a broke writer who suddenly gets access to the NZT drug, unlocking immense cognitive capacities. Using it, he's able to learn quicker, benefit enhanced memory, focus better, build charisma, and instantly has an amazing life.

I love this movie, and in particular the idea of being "limitless", which is similar to the movie Lucy where you start using your brain to 100% capacity, and thus fully exploit it. Tons of Computer Vision students today have the NZT pill in front of them, but refuse to take it. And by this, I mean they have great skills in image processing, and can detect objects and find 2D boxes, but are unable to fully exploit this skill to 100%.

In order to fully exploit your Computer Vision skills, you'd need object tracking, and this is exactly what we'll talk about in this article in 3 points:

- Object Detection vs Object Tracking: Why you shouldn't be an Object Detection Engineer, and why Object Tracking Engineers bring more benefits

- Object Tracking Elements: How to track objects from frame to frame

- Advanced Object Tracking: 3D Tracking, Deep Tracking, and more...

Object Detection vs Object Tracking: Why you shouldn't be an Object Detection Engineer, and why Object Tracking Engineers are better

Back when I started in Computer Vision, I often stumble across courses where the curriculum went like this:

- CV Fundamentals: Image Processing, Filtering, Resizing, Colorization, OpenCV, computer vision algorithms, etc...

- Neural Network & CNNs: Neural Nets, MLP Classification, Backpropagation, CNNs Image Classification

- Advanced Computer Vision: 2D Object Detection, CV Projects (car counting, ...), ...

Somehow, no matter the course I picked — and even at university — object detection was presented as the utmost cutting-edge project you could work on. And somehow, almost 8 years later, it's still the case.

When I worked in the self-driving car field, I realized object detection was merely a handful tool, and NEVER the end goal. No, knowing that a detected object is in a bounding box centered at pixel (200,300) isn't directly useful, and this because we're in the pixel space, but also because we have no information about these objects.

This is something I explain in the presentation page of my obstacle tracking course, but also in general every time I talk about object detection: a 2D bounding box is NOT the end goal. However, if you have information about that bounding box, it could be useful... For example:

- If you know how long each specific object has been in the frame

- Or how fast they're going

- Or where they're heading (even what action they'll take)

Then it becomes useful, and can be the origin of many real-world AI applications in retail, self-driving cars, robots, traffic monitoring, sports analytics, visual object tracking, and more..

Computer Vision might be represented by the YOLO algorithm and object detection, but the reality is, skills like Object Tracking are far more useful to companies, and often directly follow Object Detection.

To put things very clearly:

- The output of an object detector is a list of 2D or 3D Bounding Box coordinates

- The output of an object tracking algorithm is the bounding box PLUS the ID of each, and yes, we can add the speed, class, action, age in the frame, whether it's occluded or not, and so on...

But there are many types of object tracking algorithms, and many different algorithms to consider, so let's immediately jump to the second point:

The Main Elements of Object Tracking: How to track objects from frame to frame

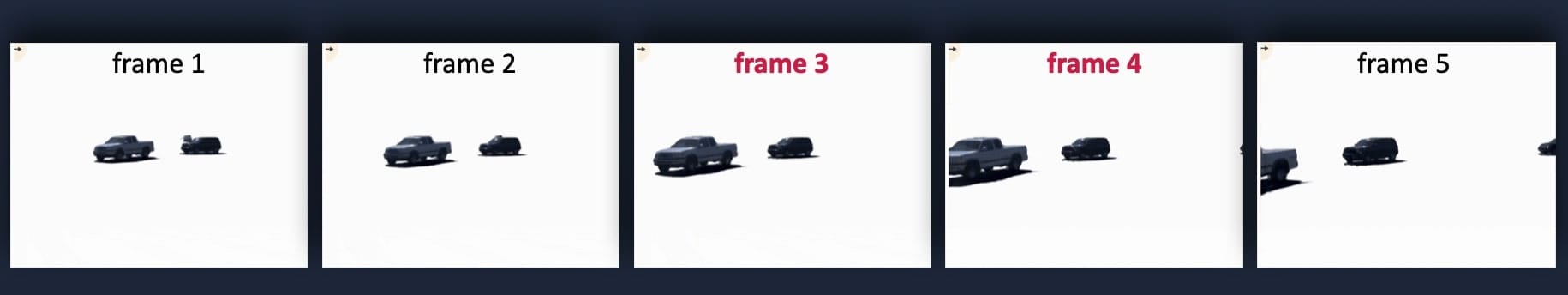

The initial goal of object tracking is, taking two frames, to associate their bounding boxes. To explain the idea very well, let me take the example of this scene:

Say this is the view from our self-driving car, and we want to do vehicle tracking. First, let's remove the background and focus only on the moving objects:

Ohhh this is so cool. What I just did is called Gaussian Decomposition and has been achieved solely to impress you with 3D Gaussian Splatting, but whatever, let's imagine we're the self-driving car stopped and looking at these 3 objects.

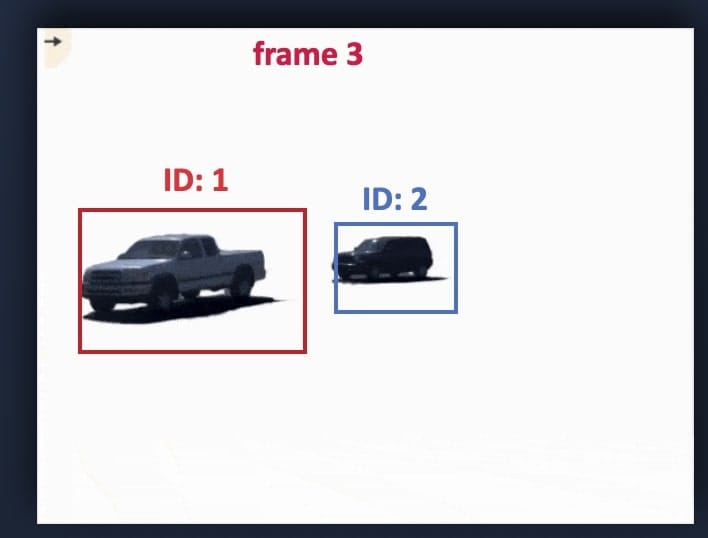

How would we assign the IDs? First, we would need to extract video frames from this scene, so let's put our video object tracking lenses and look at it this way:

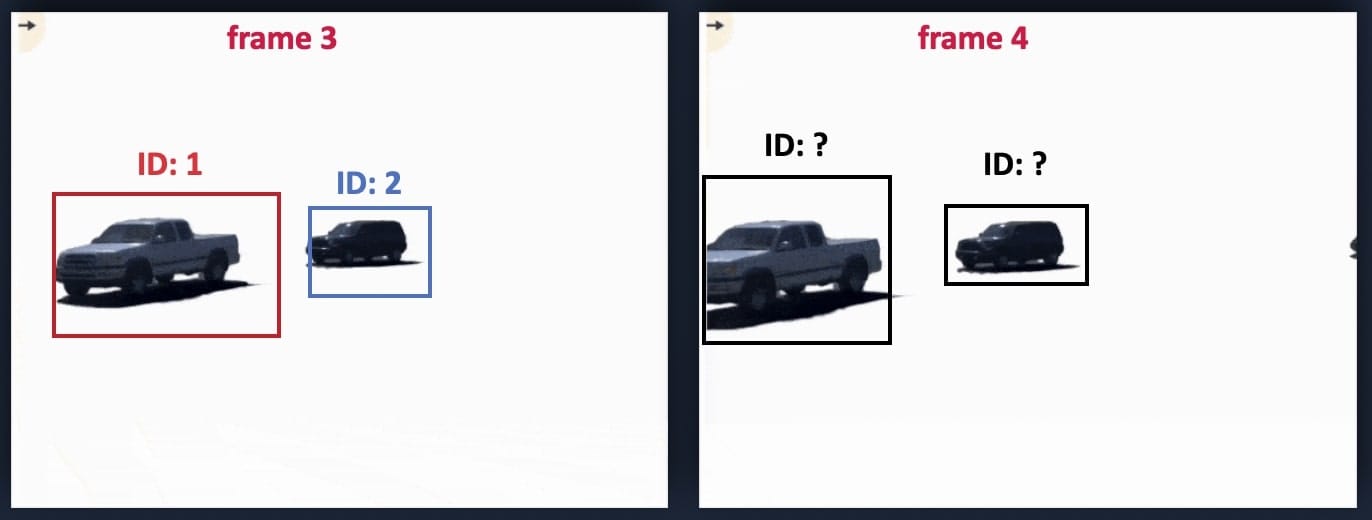

Now, let's try to do the tracking for Frame 3 and Frame 4.

There are 3 main steps you you need to understand:

- Object Detection: YOLO — because you only live once

- Data Association: Bipartite Matching, the Hungarian Algorithm

- Object Tracking: Kalman Filters

1) Object Detection

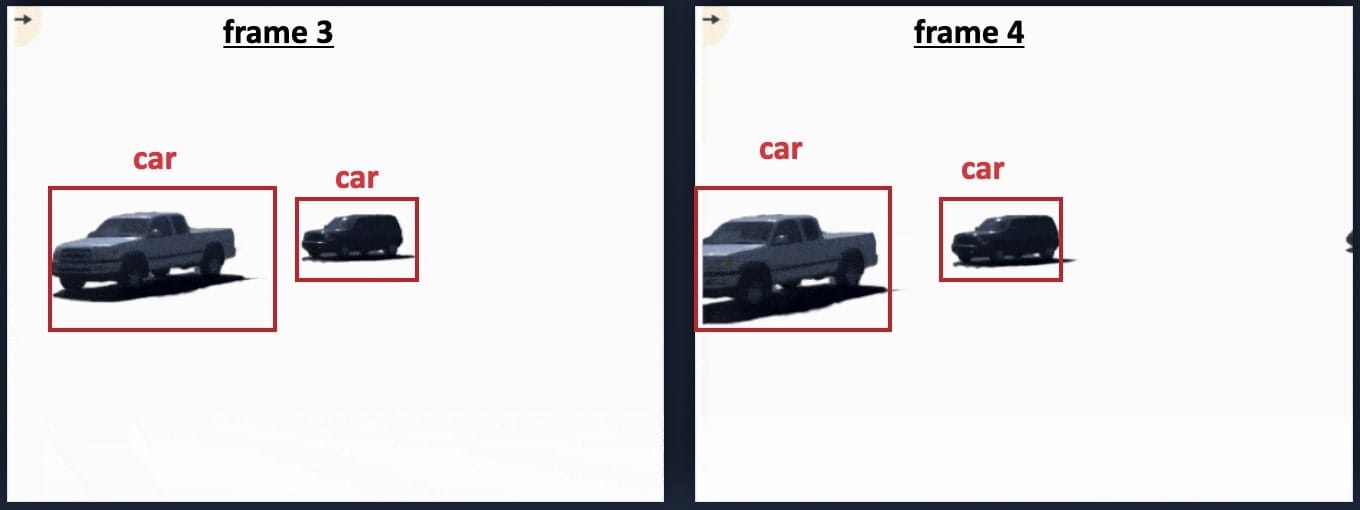

The first step is simple, we detect objects — this is where everybody rush and stops. Since we said we'd focus on frame 3 and 4 only, this would be the output of YOLO:

The problem here is, if every car is red, there is no tracking, we need IDs, and we need good tracking. Comes step 2.

2) Data Association: Bipartite Matching & The Hungarian Algorithm

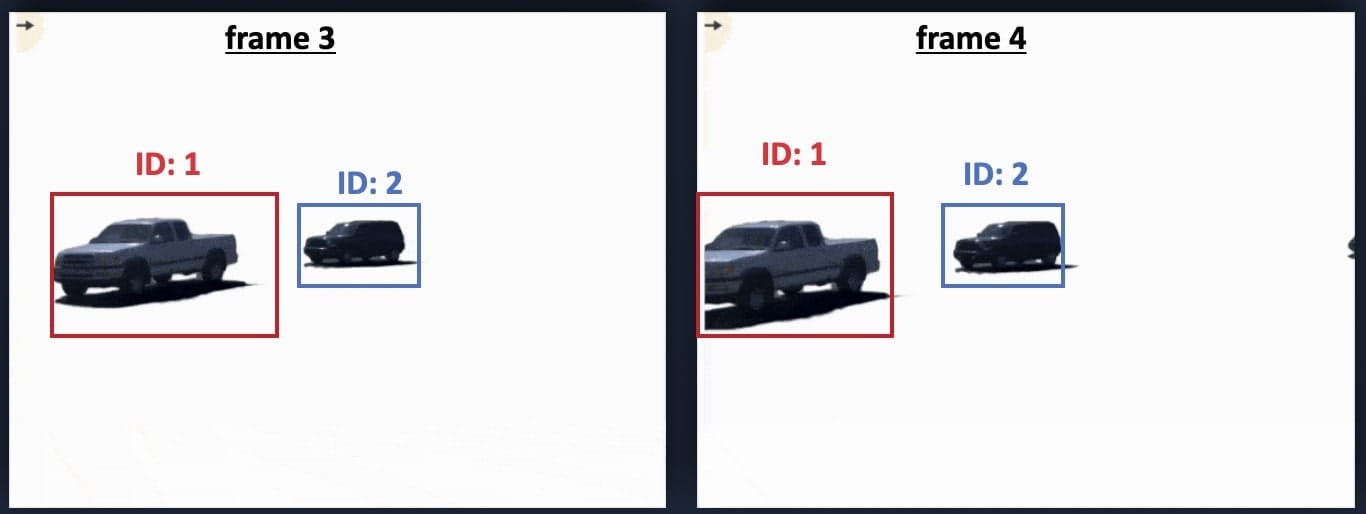

The second step is to attribute IDs to every box, and this for the entire scene. In this article, I'm going to consider that we're tracking multiple objects, and thus doing multi object tracking (MOT). If we keep our same example, it should look like this:

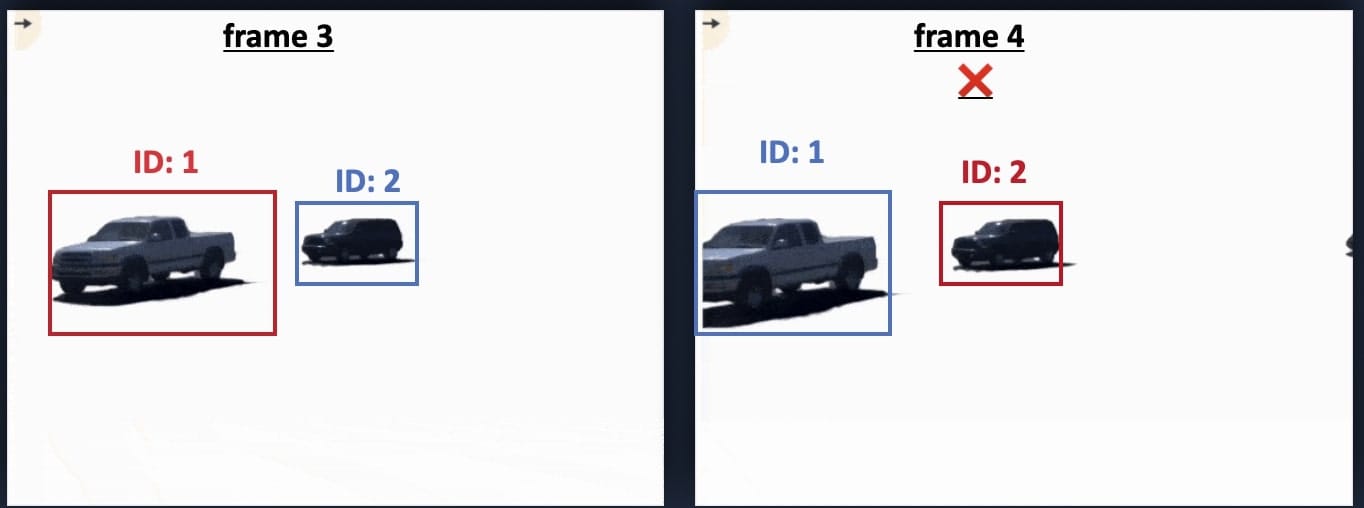

And to be clear, it should NOT look like this:

So how do we do that? You could assign an ID to every single object in frame 3, but these objects have to correspond.

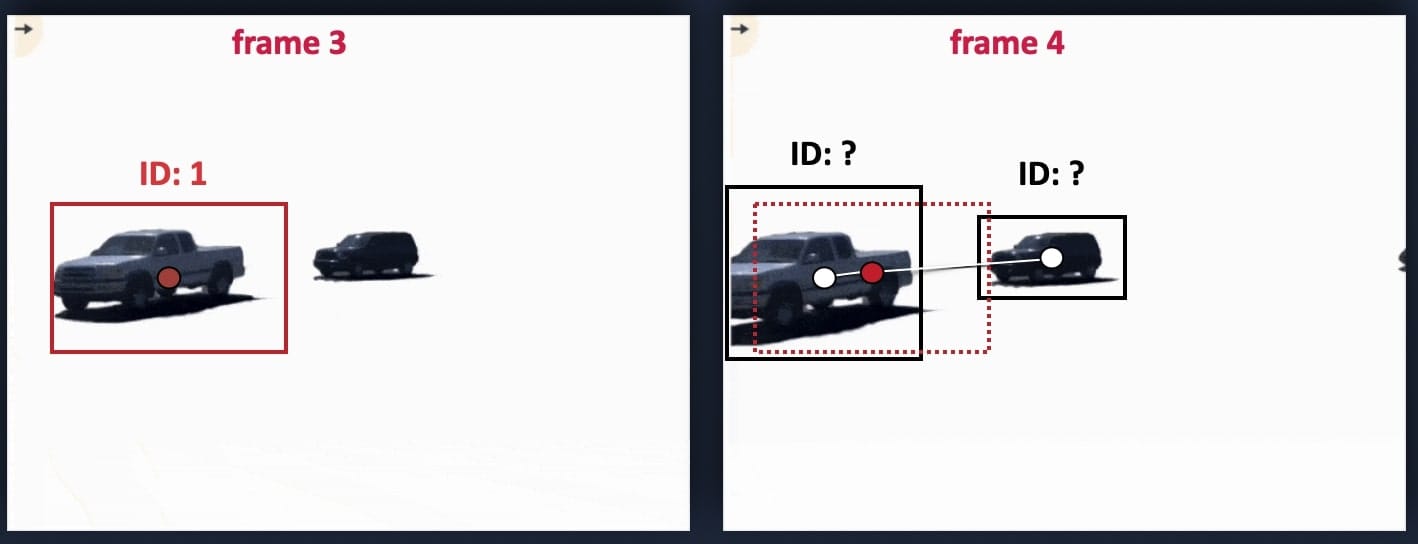

So here is how we are going to make a good matching algorithm:

- We attribute an ID to each box for the first frame (t-1).

- We match the box from (t-1) to (t)

- We assign the ID to each box for the second frame (t)

So:

- We attribute an ID to each box for the first frame (t-1).

Then:

- We match the box from (t-1) to (t)

But how?

This is where we run the data association. We are going to, for each box (and this is important), try to find the closest match with the other box. For this, we'll consider matching criteria, such as:

- The euclidean distance of the center of the boxes

- The size & shape change of the box

- The class (don't match a car with a cyclist)

- The IOU (Intersection Over Union) of how these 2 boxes overlap

- The deep association metric each box has with the other

- And more... (in my obstacle tracking course, we implement object tracking using a cost function of tons of these costs and more...)

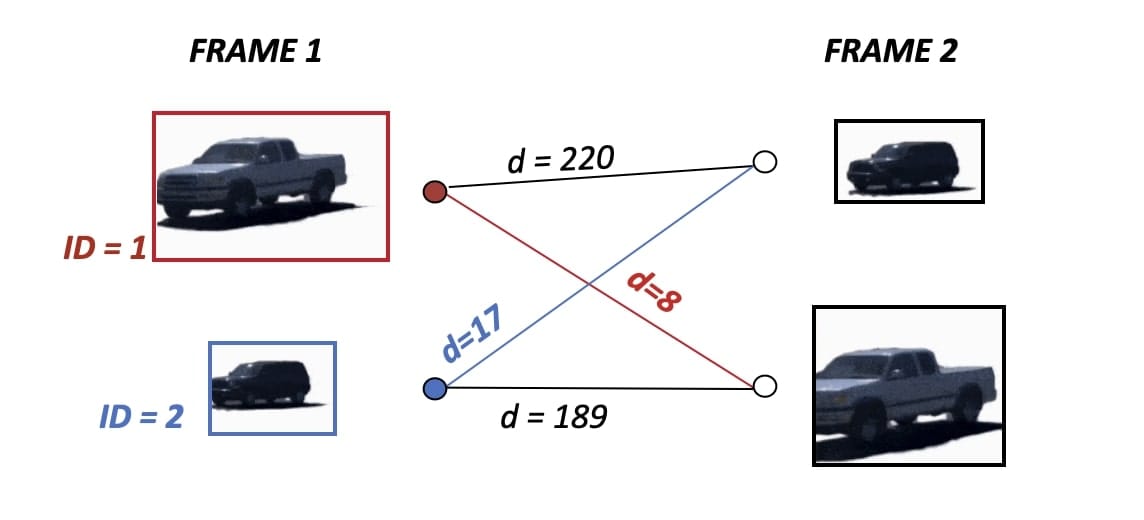

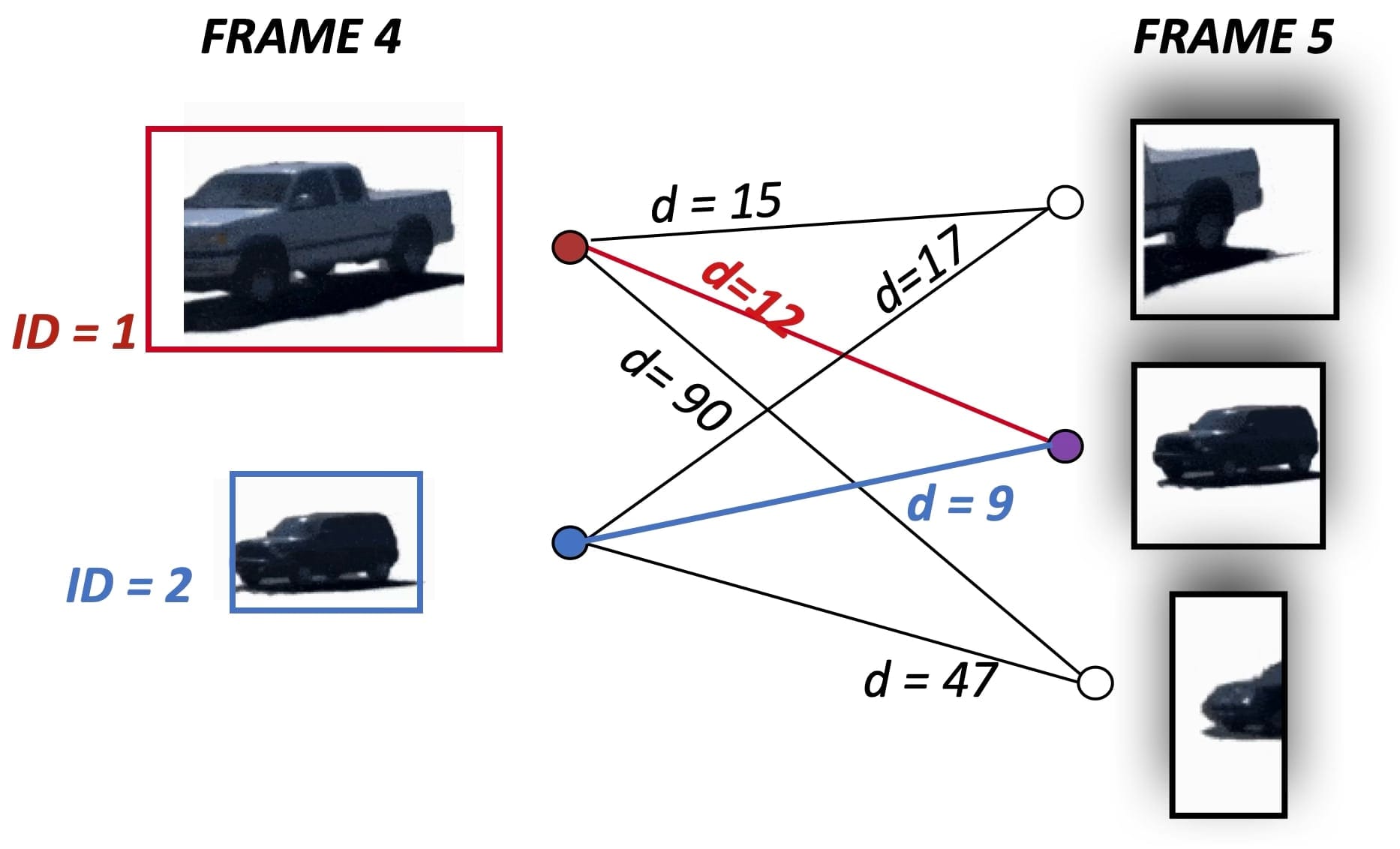

Let me show you an example for the first car, if we pick the euclidean distance as a metric:

As you can see, if we compute the euclidean distance between the red dot and the left car, we have a very small distance. On the other hand, we have a big distance with the right car. And this is how we can do the matching. You are then going to hold what we call a Bipartite Graph storing all the distances.

Finally:

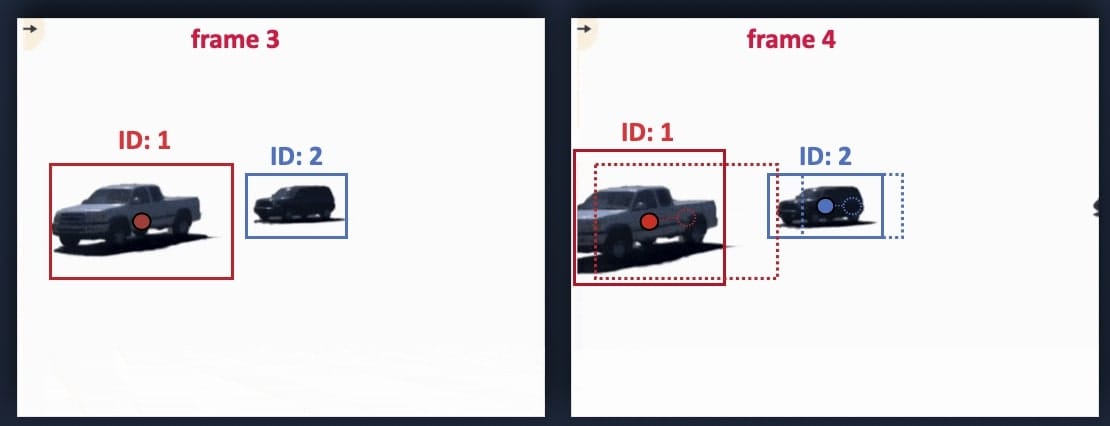

- We assign the ID to each box for the second frame frame (t)

The lowest distance is picked and every object is assigned.

And this is how we do it!

Now, I showed you a very simple version, and before I get loaded with "But what if...?" — there is one final idea to talk about:

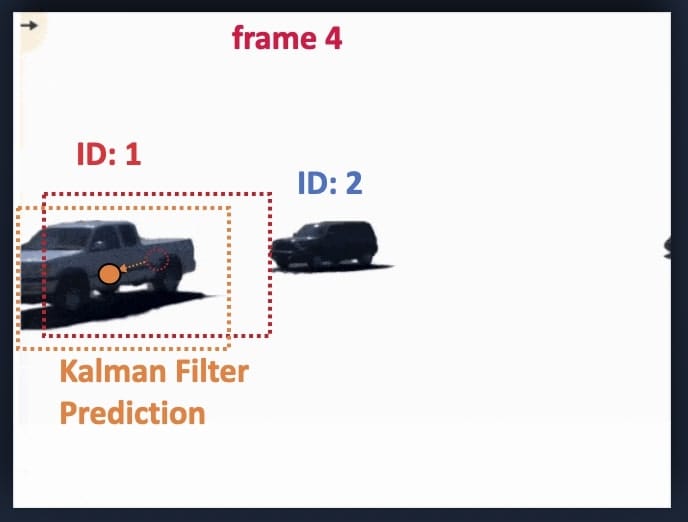

3) The Kalman Filter

When combined with a Kalman Filter, this object tracking method is called SORT (Simple Online Realtime Tracking). What does a Kalman Filter do? Over time, you're going to have a real motion of objects, and thus, the Kalman Filter is going to predict the next position of the box — so that the distance gets smaller.

If I were to illustrate it, it would be:

The orange box is the predicted box, and the red box is the (t-1) box. When using a Kalman Filter, we are going to use the orange box for matching instead. So rather than matching the box at time (t-1) with the box at time (t), we match it with its forward prediction at time (t). This has a big impact, because if a car suddenly accelerates, or is about to leave the frame, you can predict it and thus improve your tracking results.

How a Kalman Filter works

To clarify a bit, we'll initialize our Kalman Filter with a motion model, say constant velocity, and predict the next position of the box. We may predict that the car is at the same position as it is now. Then, we'll get the "real" data from YOLO at t+1, and thus we know we were a bit "off" — so we calculate that motion, and refine our next prediction... again and again until we're perfectly able to predict the next position.

I have a complete article doing a Live Example of Multi Object Tracking with the actual KF values here.

Awesome, so this is our second point, and before taking questions, I'll just show you some advanced algorithms in our third point...

Questions about the simple tracking methods

A few questions you may have about this object tracking approach:

"Is the euclidean distance standard? What do people use in the field?"

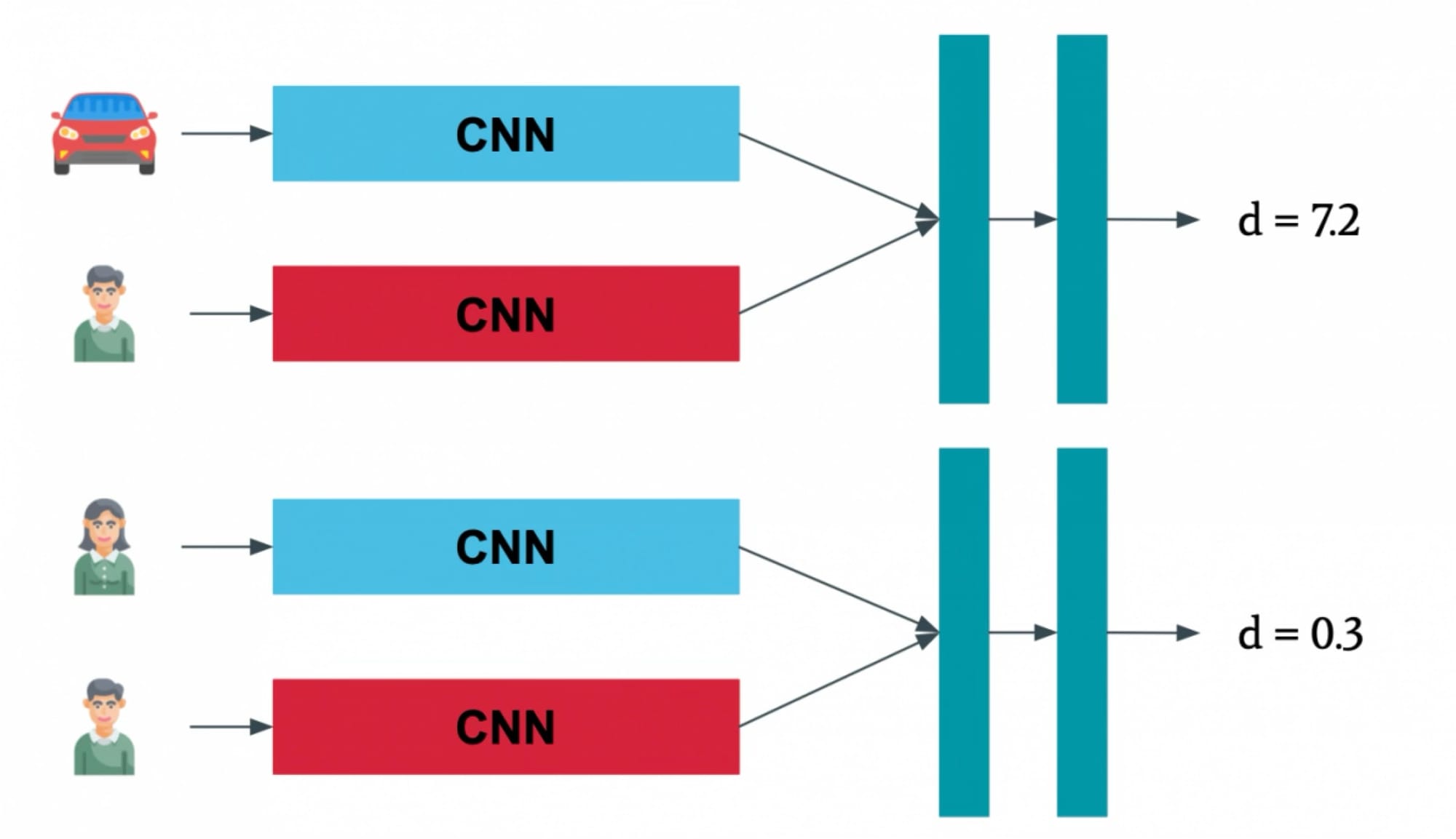

No, the euclidean distance is actually one of the worst metric you can use. Why? Because imaging we have many objects? Or if an object comes in front of another? Suddenly, the shortest distance could be attributed to another object, and thus, we could fail the tracking. People like to use the IOU (Intersection Over Union) instead, or the IOU + the distance between the deep convolutional features (we look at the CNN feature map inside the bounding box). Often, a combined cost is the best solution.

What if 2 object have a minimal distance to the same object?

Haha! That is interesting. Imagine this case:

As you can see, in this example, object 1 is moving, and a third object is entering the scene. It's possible that we don't know exactly how to do the match; maybe the first object moved too much, and thus we have a situation like this:

So what if everybody has to be assigned to the middle object? This can happen extremely often, especially when the two frames don't have the same number of vehicles. This question, and ALL the others like "What if we have occlusions?" or "What if 2 objects are in frame 3 but 5 objects are in frame 4" or "What if an object is mis-detected?"... can be answered with one word: The Hungarian Algorithm.

This is the algorithm behind all matching, even the advanced ones with Deep Learning, and if you'd like to understand how it works, I have a complete article about it here.

You now have a good idea of how to do multiple object tracking, let's move to the advanced algorithms.

Advanced Multi Object Tracking Models

In this section, I will still focus on the Multiple Object Tracking problem. Not the single object tracking (image tracking), which is often a simpler Computer Vision problem answered with other techniques.

In the example I showed you, we were (1) detecting cars and (2) tracking them. We call this tracking-by-detection. In reality, we don't have to do this. Modern approaches today track object immediately: we call this joint detection and tracking. And thus, we have two families:

- Tracking by Detection algorithms

- Joint Detection and Tracking algorithms

Let's briefly take a look at each:

Tracking By Detection

You already saw a simple object tracking approach called SORT, which belongs to the first family; and when you add deep CNN metrics, you have Deep SORT! The idea is, rather than simply looking at the euclidean distances, we also add the Deep CNN feature distances as a metric. For example:

The matching is here done using the IOU/Distance/Shapes, etc... but also adding this new cost, which is going to look "inside" the bounding box.

What are other approaches from this family?

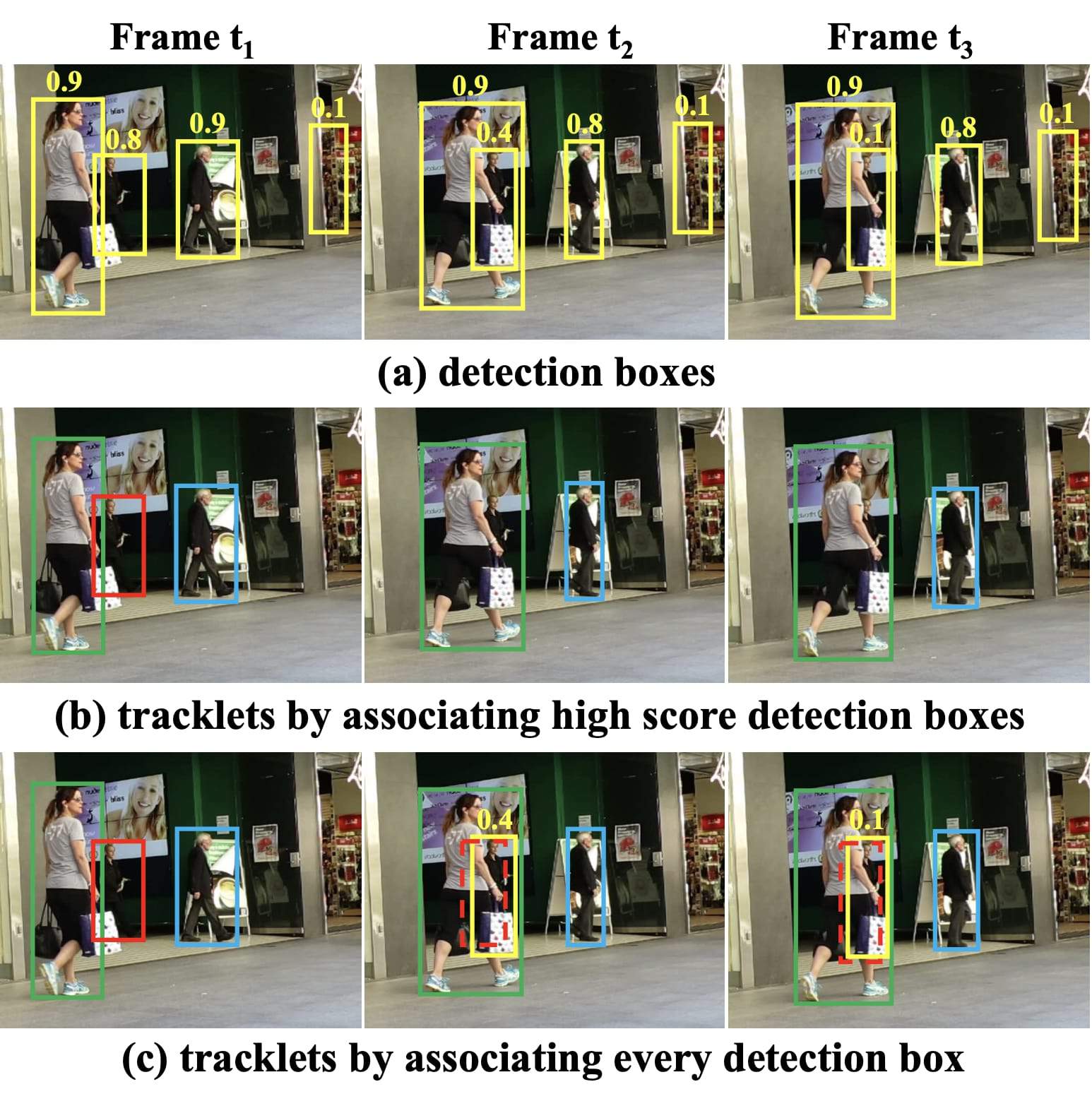

For example, ByteTrack is pretty well-known:

The goal of this algorithm is to match all the detection boxes, even the low confidence ones, which are assigned to unmatched detections (when it makes sense). ByteTrack's "two stage association" approach (of associating both high-confidence and low-confidence detections) makes it highly beneficial in situations where confidence is inconsistent or where external factors make detection challenging.

Now let's see the second family:

Joint Detection & Tracking

Here are a few state of the art algorithms to consider...

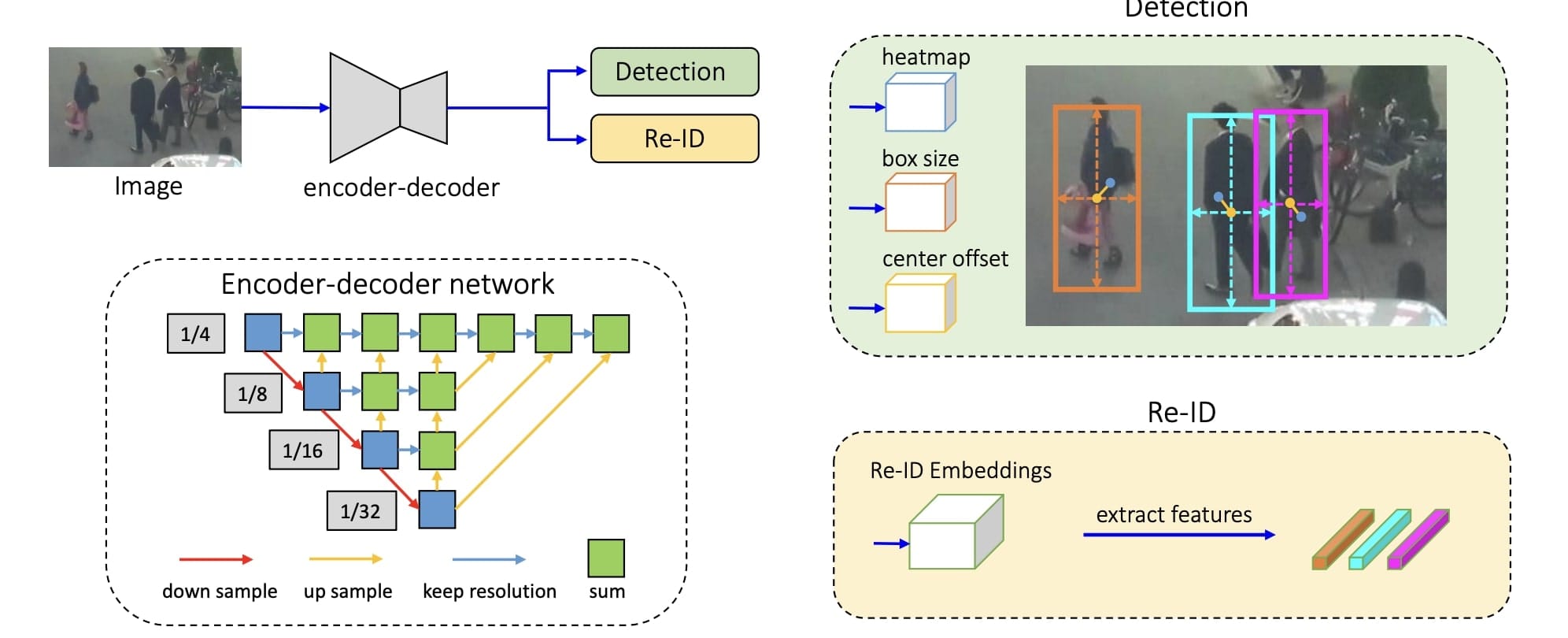

FAIR-MOT

FairMOT is a multi-object tracking (MOT) framework that is focused on the re-identification problem. The training loss is optimized for both object detection and re-identification (ReID). It has two branches: one for anchor-free detection and another for ReID feature embedding. This is really effective when you have occlusions, dense crowds, and offers real-time performance without compromising tracking accuracy.

Now is this better then the other one? Which could be better? As you saw, some algorithms are optimized for occlusions, others for using multiple sensors (or from different camera angles), others for sudden velocity changes... etc...

Now let's see some examples:

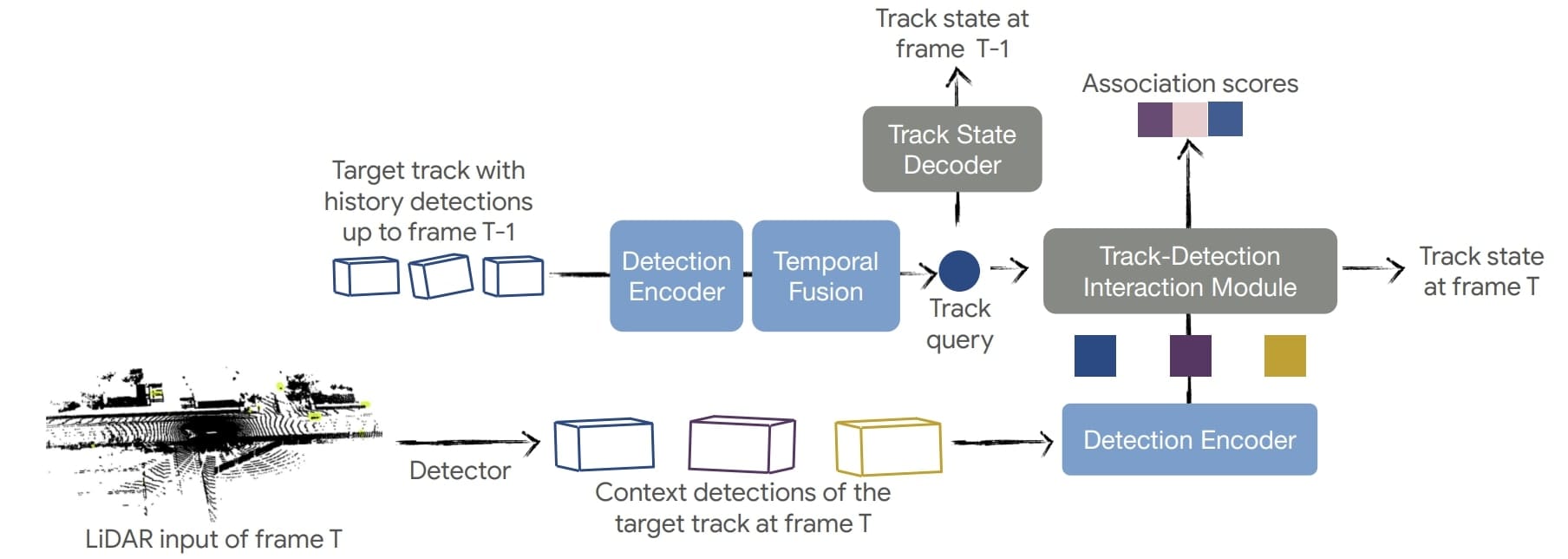

Example 1: Waymo's Stateful Track Transformers

In 2024, Waymo released an object tracking algorithm called STT: Stateful Track Transformer. Here is the diagram:

As you can see, this algorithm is based on LiDAR (as for almost everything at Waymo), and it begins with the Detection Encoder thad encode all of the 3D detections and extract temporal features for each track. The temporal features are fed into the Track-Detection Interaction module to aggregate information from surrounding detections and produce association scores and predicted states for each track. The Track State Decoder also takes the temporal features to produce track states in the previous frame t − 1.

I have a complete and full explanation of this advanced algorithm in my free app.

Next, let's see another example:

Example 2: 4D Perception with 3D Kalman Filters

In my article on 3D Object Tracking, I talk about object tracking systems that work with 3D Bounding Boxes. In this case, there are 2 different situations:

- We're still using monocular camera, and this time tracking 3D Bounding Boxes

- We're using LiDARs, RADARs, or stereo cameras, and thus track 3D Bounding Boxes, but have access to a different type of data (point clouds, RADAR maps, ...)

In my course MASTER 4D PERCEPTION, I do this with LiDARs, and here is the output:

Now that is stunning!

The key elements of this project are:

- We're using a LiDAR detector like Point-RCNN, PV-RCNN, or Cas-A to find 3D Bounding box coordinates

- We then have several possible association criteria such as the 3D IOU, but also the point cloud shapes, the 3D euclidean distance, and more...

- We're using a second order 3D Kalman Filter tracking XYZ in a constant velocity motion model.

You can learn more about this in my course MASTER 4D PERCEPTION (but warning, it's advanced and I highly recommend you check my introduction course on tracking before going to this one).

You've now been through the end of this article!

Congratulations! Let's do a quick summary of everything you learned:

Summary

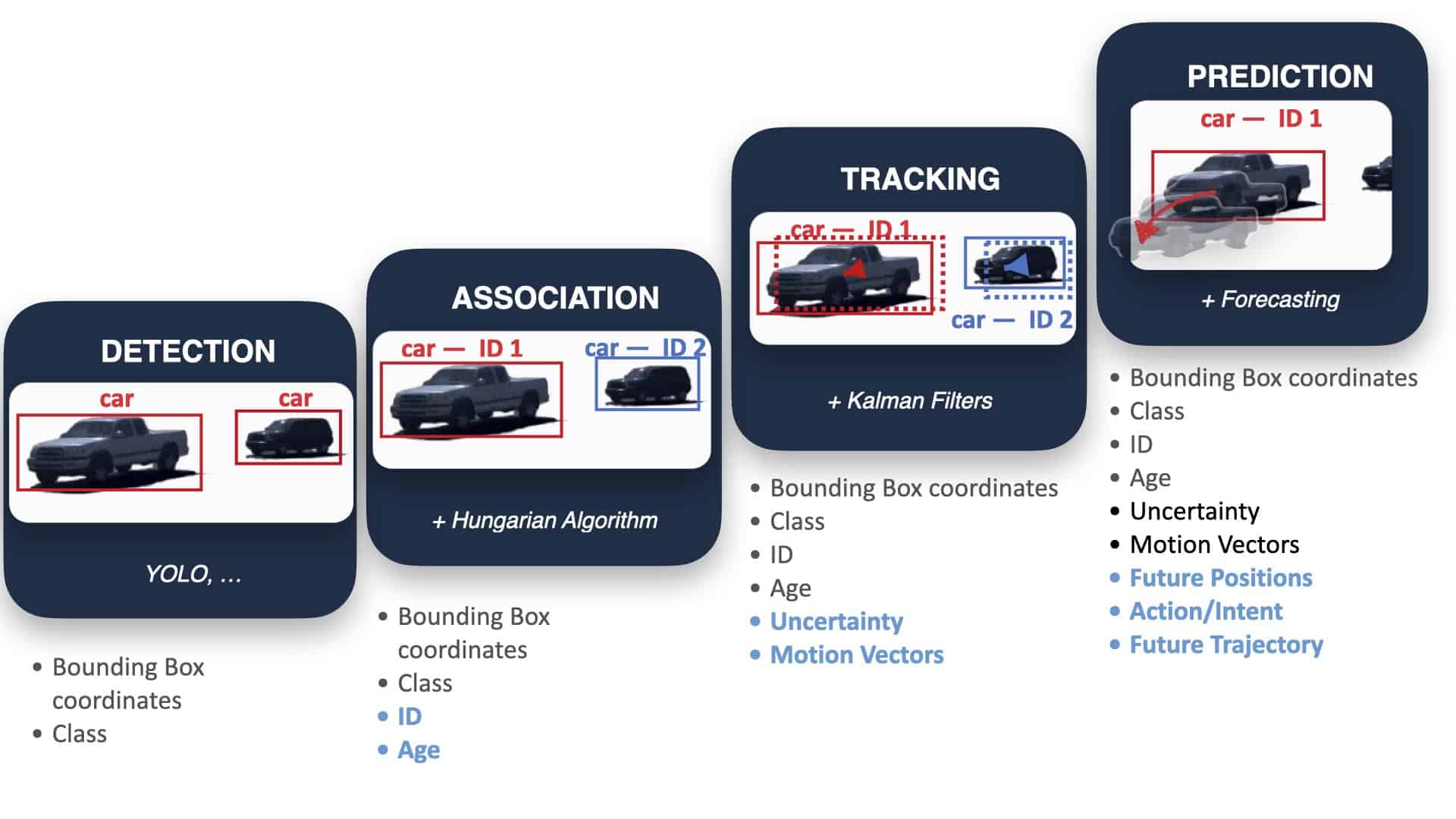

First, there is this image that I built, showing the different stages of tracking:

As you can see, most engineers who only learn to use object detectors miss the benefits of association & tracking: ID, Age, Uncertainty, Motion, and even (if you predict) future positions, actions, trajectory, etc...

In a self-driving car, using tracking instead of simple detection is much more useful, and there are many real time object tracking applications that creating fantastic startups.

A few other summaries:

- The main elements of object tracking are (1) detection, (2) association, and (3) prediction via Kalman Filter.

- In association, we build a bipartite graph holding the objects of (t-1) and those of (t) and do a matching based on criteria such as euclidean distance, IOU, deep features, etc... While the majority of CV enthusiast use IOU, I highly recommend a combined cost function instead.

- The Hungarian algorithm is the algorithm responsible for tracking, and handles all the edge cases such as new objects, old objects, mis-detections, occlusions, etc...

- Kalman Filters are responsible for the prediction allowing for smoother tracking. In the case of 3D Tracking, we would use 3D Kalman Filters.

- There are two families of trackers: tracking-by-detection, and joint-detection-and-tracking. The second family promises to do it all in a single network, and can be seen as more advanced; yet, today, both are heavily used (and the first category probably more).

- Many companies from the field use tracking, such as Waymo with their Stateful Track Transformers — and you can learn it all on my free app.

Next Steps

If you enjoyed this article on tracking, there are chances you might enjoy these other ones:

- Computer Vision for Multi-Object Tracking: Live Example

- An Introduction to 3D Object Tracking (Advanced)

- Exactly how the Hungarian Algorithm works

Subscribe here and join 10,000+ Engineers!

You can also see relevant tracking courses here.