8 takeaways from mobileye's End-To-End talk

Earlier this month, mobileye held a talk sharing their thoughts on End-To-End Learning (including Tesla's E2E system), and why they weren't going to use fully E2E in their own self-driving cars.

Below are 8 ideas I got from the first part:

Idea 1: MTBF: Mean Time Between Failures

Basically, how many hours between 2 interventions? What mobileye says is that E2E adds uncertainty to that time, while their Compound AI System (CAIS - AI modules and bricks) doesn’t. The typical range is between 50k hours and 100M hours, so this is a lot.

Mobileye believes Waymo is the only one who passed the required levels; and therefore can really be « eyes off - hands off ».

I'll get back to this point with idea 7 to illustrate it with Tesla.

Idea 2: There are 3 typical self-driving prototypes: Waymo, Tesla, and Mobileye.

- Unlike Tesla, Mobileye says it’s not camera only, but camera centric (they double down on camera, but use other sensors)

- Unlike Waymo, they claim to use HD Maps, but have a real scalable system to build them (called REM — I actually describes it in one of my daily emails here)

- Unlike Tesla, it’s not full E2E, but a CAIS (Compound AI System). AI is a part of the solution but not the full solution.

- Finally comes the MTBF, which is unknown for Tesla, and to be developed for Mobileye

Idea 3: E2E give 2 main promises: no « glue » code and fully unsupervised learning.

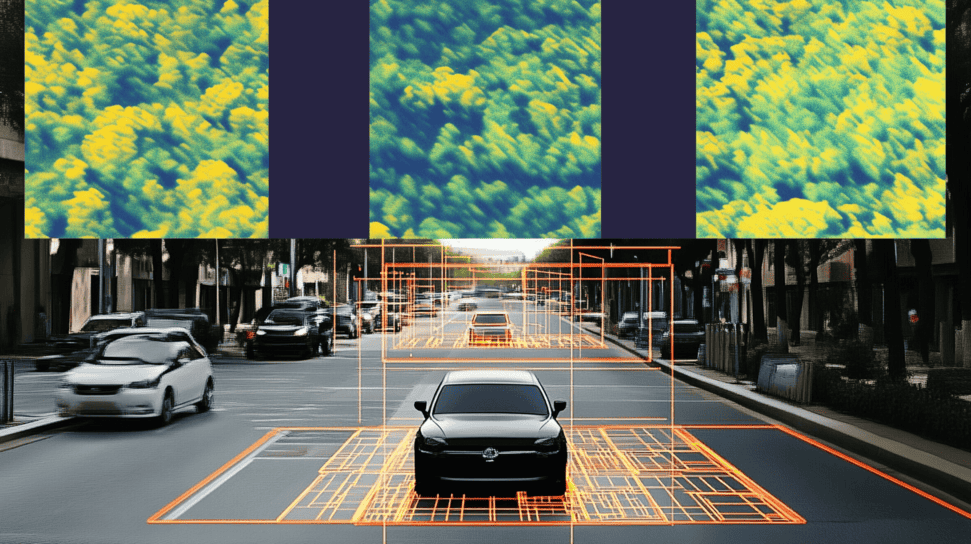

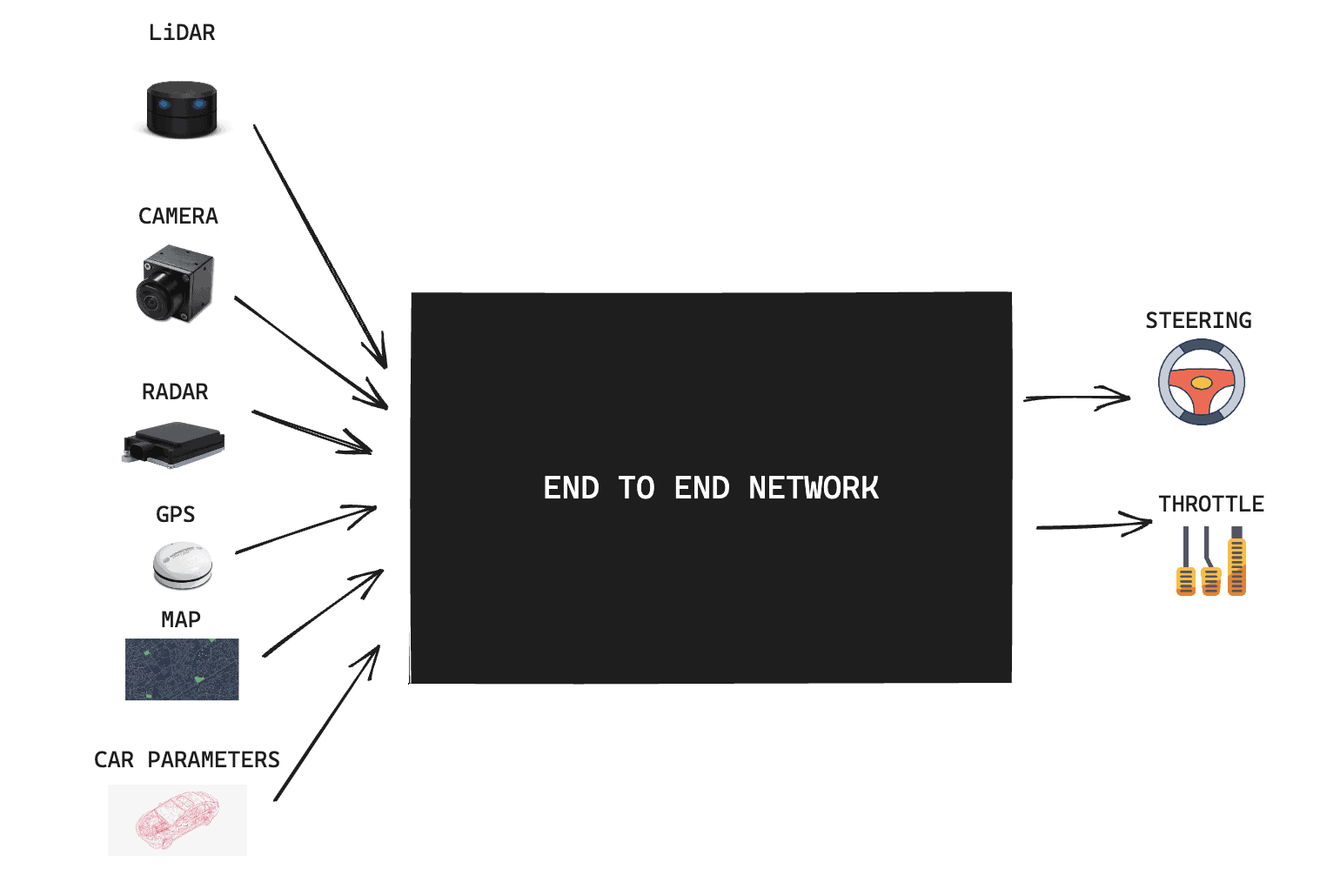

This is the idea behind End-To-End: input > model > output. I actually describe it in this post.

According to mobileye, the reality is different: there is code to glue, and tons of problems.

Idea 4: The AV Alignment problem

There is still glue to put.

For example, people don't fully stop at STOP signs. So you can't use your data alone with no glue. You need to add glue. In E2E, the glue is not "online" (the algorithms running live), but "offline" (the data is altered, the training losses changes penalty, etc...)

Idea 5: The Calculator problem

Chat-GPT can calculate 2x2, but if you start asking for long multiplications with many numbers, it'll get confused. So much that OpenAI shifted to a calculator solution, where they replace LLMs with python code when they identify a query for complex calculations.

(even in the picture below taken today with a python script, you can see errors!)

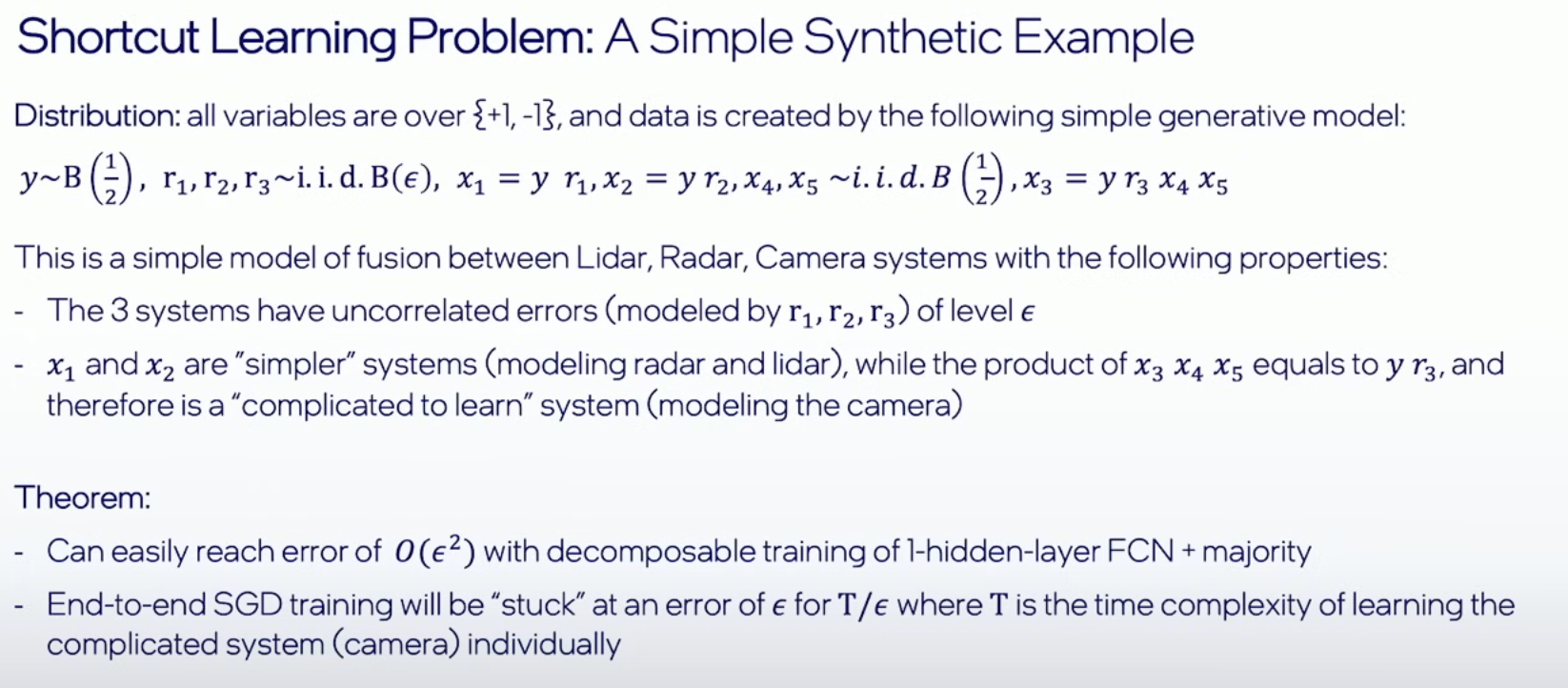

Idea 6: The Shortcut Learning Problem

Because camera, LiDAR, and RADAR are all different, just fusing them all in a big network (the E2E approach) is stupid because it doesn't leverage their unique advantages (RADAR for speed, camera for context, etc...).

Idea 7: The Long Tail Problem

Solving one edge case doesn't guarantee your system will get better. It depends on how rare the edge case is, and how related it is to your driving behavior.

The (publicly available) Tesla FSD Tracker Data shows that while there has been a significant improvement from FSD 11 (Modular) to FSD 12 (E2E), FSD then decreased from 12.3 to 12.5. So it shows it's still gradual improvements versus a revolution.

Idea 8: Primary Guardian Fallback

In Sensor Fusion, what do you do if 2 sensors tell a different thing?

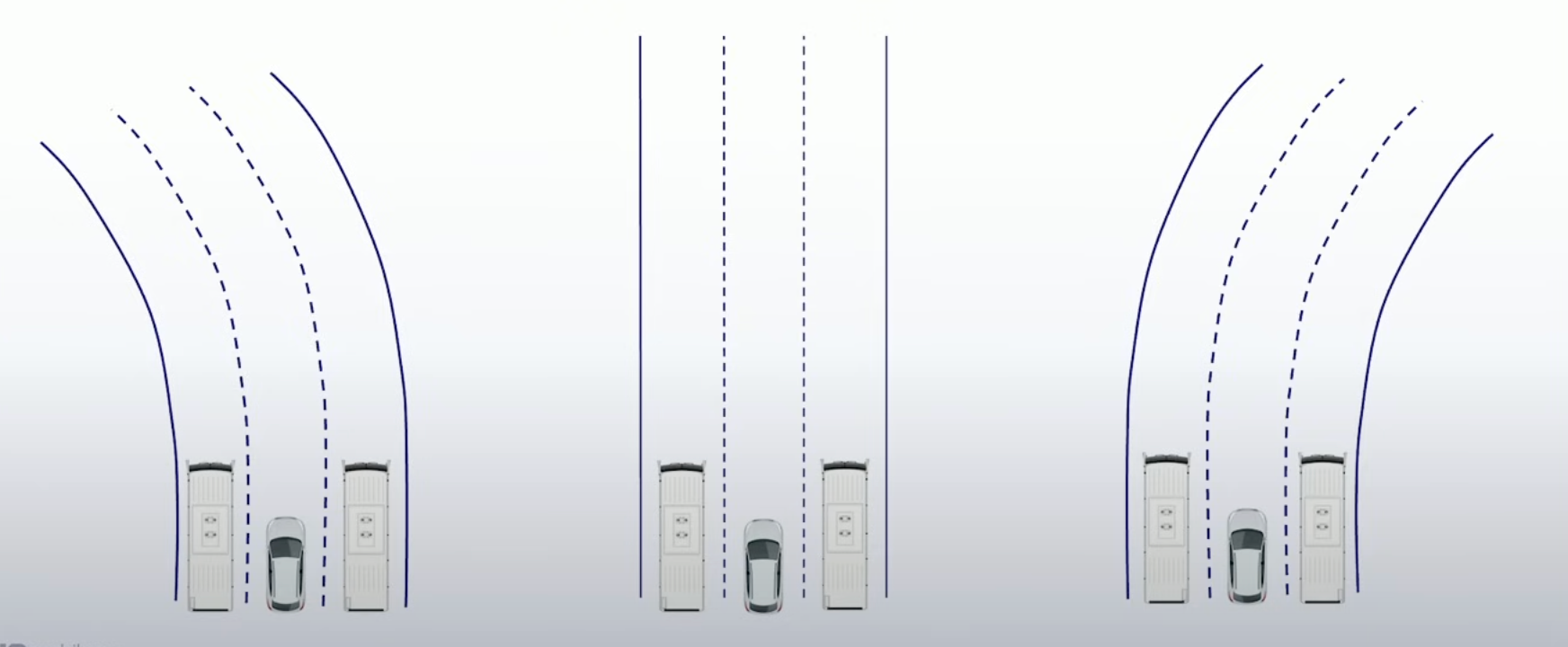

Take a lane detection approach, where we try to classify the type of lane we're in...

How do we do this lane detection approach? You can use a lane detector, or you can project the lanes from your HD Map using accurate localization and mapping.

What if these 2 systems tell a different lane type? Mobileye has the PGF — Primary Guardian Fallback system:

- Primary: Your lane detector output

- Fallback: Your HD Map output

- Guardian: A "guardian" E2E Network output

So basically, redundancy!

If the guardian agrees with the primary, take primary. Otherwise take fallback. Mobileye proved that this significantly helped reduce the overall error.

And that is it!

Next Steps

I wrote 2 good articles about End-To-End Learning, you can learn more here: