Introduction to Meta Learning and Neural Architecture Search

In this article, you'll get introduced to an advanced Machine Learning concepts called Meta Learning. The world of Machine Learning has been able to evolve from the traditional 3 types of Machine Learning (Supervised, Unsupervised, Reinforcement) to an astonishing list of Machine Learning types.

Few-shot learning. Active learning. Federated Learning. Multi-task learning. In this giant pool of algorithms, we have one of the most difficult to understand problems in Machine Learning: Meta Learning.

A Note — You can learn more about all the types of Machine Learning in this article.

Before I dive in the explanation of Meta Learning, let me briefly explain what this article is going to be about:

- What is Meta Learning in Machine Learning?

- A Meta Learning Example: Binary Classification

- The Meta Learning Algorithms & Mindmap

- An example of Meta Learning: Neural Architecture Search (Waymo example)

This should be fun, so let's begin right away:

What is Meta Learning in Machine Learning?

Two weeks ago, I was playing Itsy Bitsy Spider with my kid, and suddenly I heard something:

👶🏻: "Pa--pa--"

👀: What the f* did you just say? (I didn't actually curse)

👶🏻: "Papa!"

I was shocked, and super happy!

He said papa!

But then, for two weeks, nothing.

And I realized, he didn't really learned to say it; he just randomly did...

When a Machine Learning Engineer like me has a kid, he can't help but notice the similarities between human learning and machine learning.

For example, in both cases:

- We know how to teach the human or machine.

- We don't really know how the learning part works. So, I'll know how to teach my son the colour "blue", but I won't know what's going under his brain to acquire this concept.

The idea is similar with Machine Learning. And the "learning" part is an entire field Meta Learning. Meta Learning means learning to learn.

Essentially, we're going to learn from other machine learning algorithms. So we'll be one level of abstraction above — as "meta" often is. Data about data is called metadata. Learning about learning is called meta learning.

It's something we humans struggle with, but that we're trying to reproduce anyway in the machine learning world.

To explain to you the concept, let's see a short example of classification:

A Meta Learning Example: Binary Classification

In Binary Classification, the output is always 1 or 0. So, is this a picture of a hot-dog or not? Is signing up for the Think Autonomous daily emails a good idea or not? It's always 1 or 0. Yes or No.

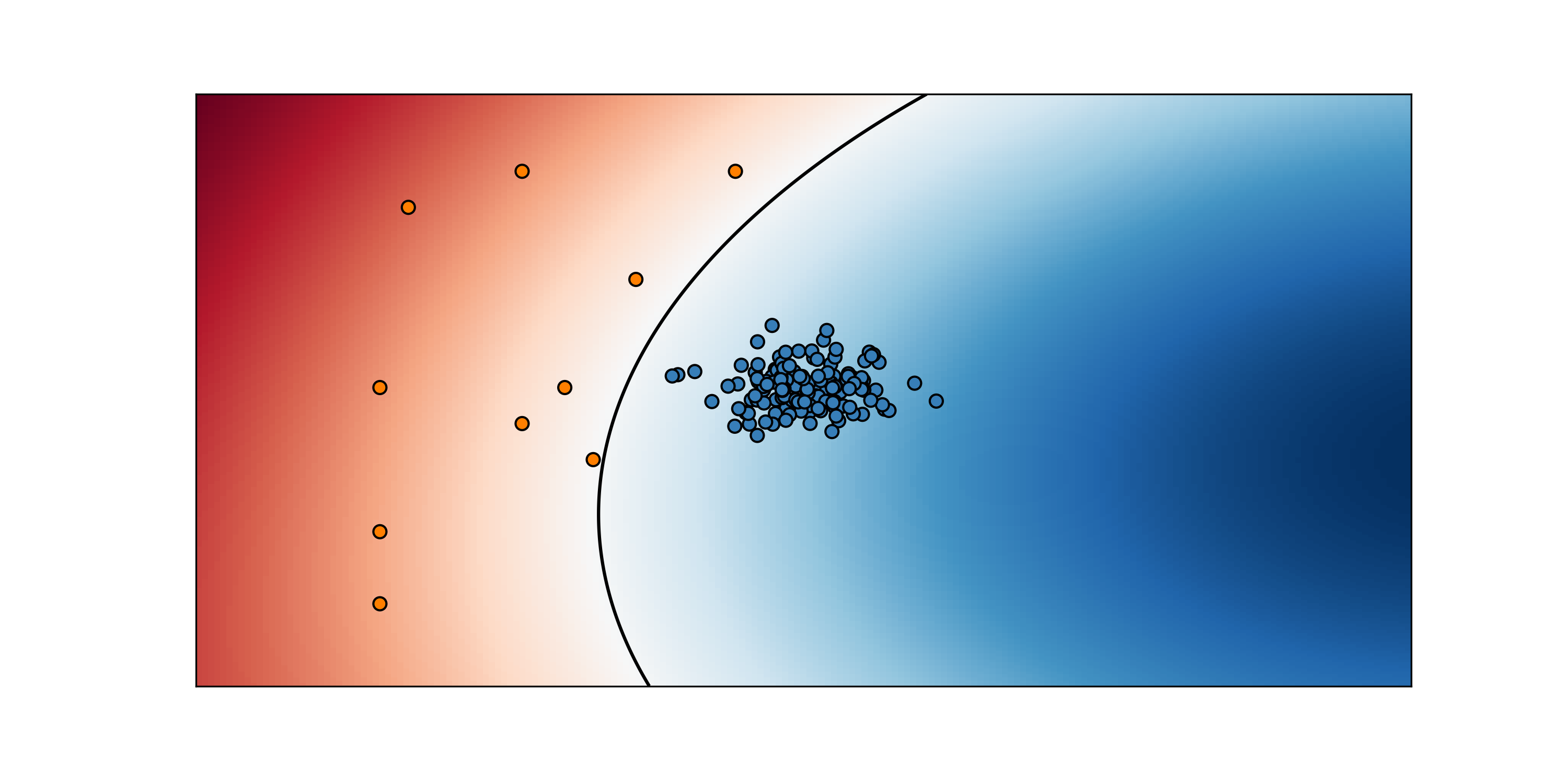

Now, imagine we applied this to image classification:

- Supervised Learning would be learning to classify a picture.

- Meta Learning would be learning image classification itself.

🤯

Notice how "meta" is about learning the concept of classification rather than a classification task.

We therefore could list these types of learning:

- Learning by Examples: Supervised/Unsupervised Learning

- Learning by Experience: Reinforcement Learning

- Learning by Learning: Meta Learning

Now... you may be wondering "How could Meta Learning learn Image Classification?" And "What are we learning exactly?".

Is there a meta-model? a meta-dataset? some meta-labels?

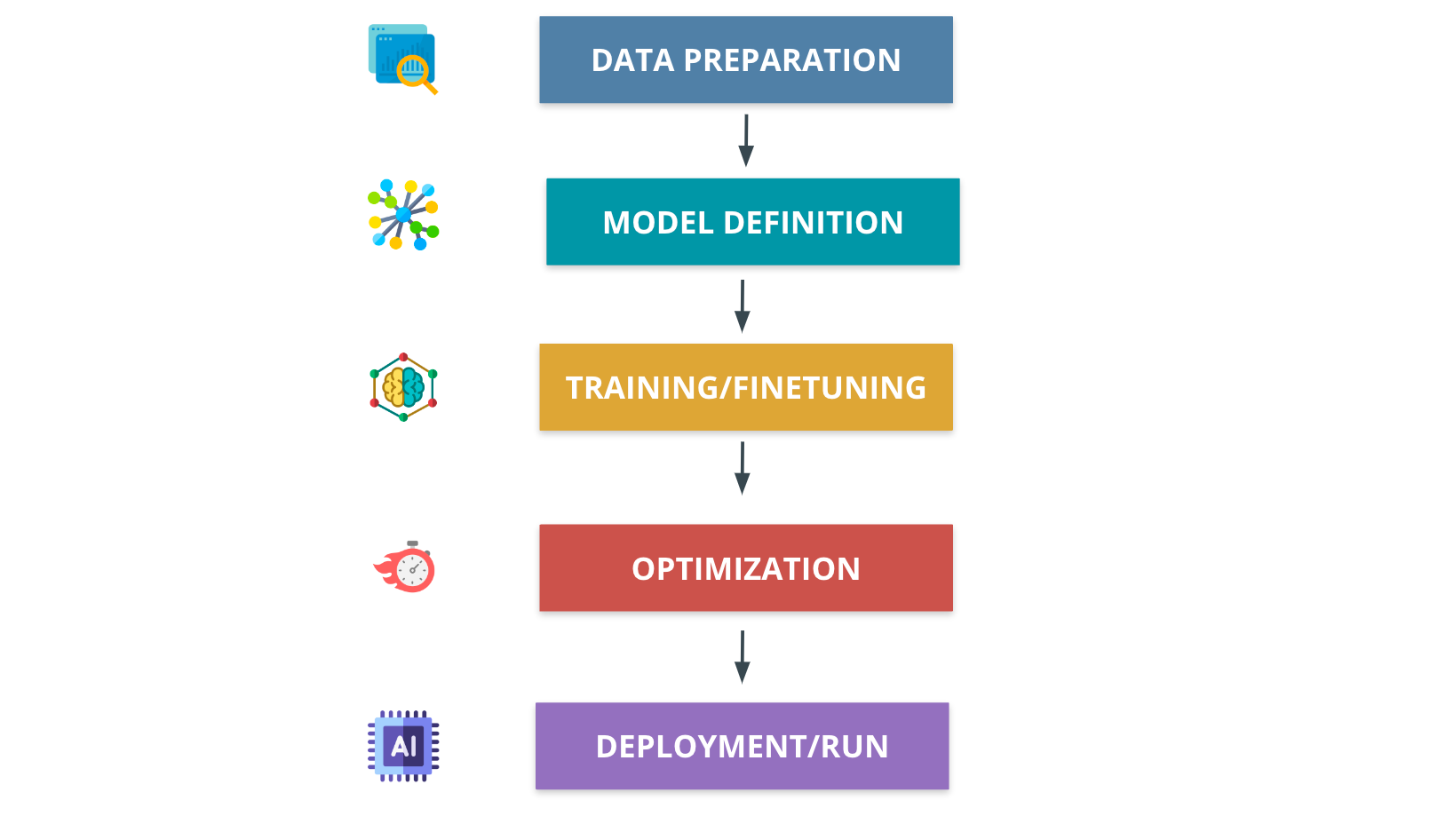

If we see the usual process of Machine Learning, we have these 5 steps:

Preparing the Data, Defining a Model, Training it, Optimizing it, and Deploying to a production environment!

Meta Learning is about automating that second step: model definition.

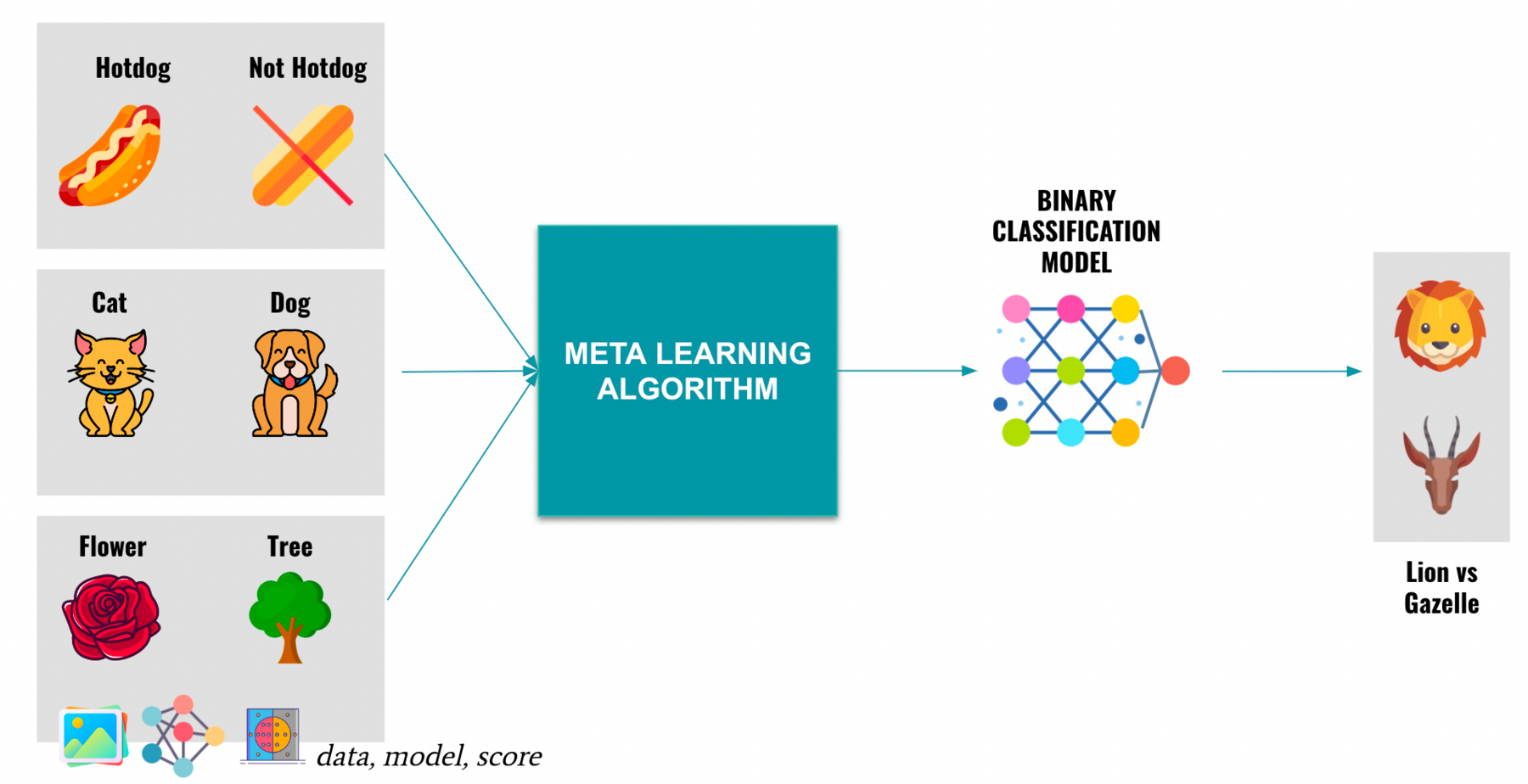

For example with image classification, we would show different examples of models that learning image classification, and ask a Meta Learning algorithm to find the best binary classification model.

This image will speak for itself:

We are looking at several examples of binary classification, on different datasets, using different models, architectures, parameters, and getting different results.

(Honestly, if you google "meta learning", you won't find an image this cool.)

The entire point of it is to then find the best binary classification algorithm possible that can generalise to any binary classification task. And this idea of generalizing is interesting, and it even has a name: "Model Agnostic Meta Learning".

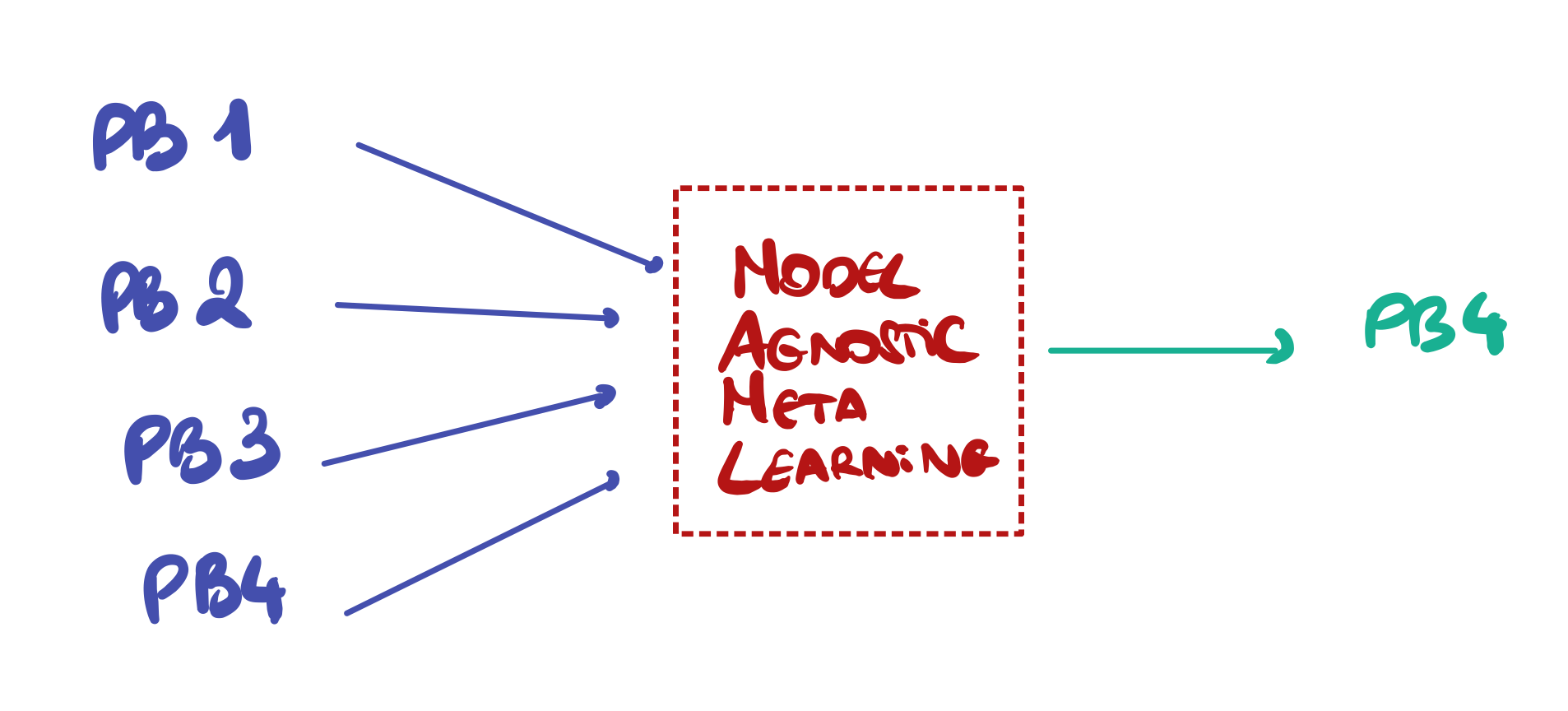

Model Agnostic Meta Learning

As you saw with the binary classification example, we have been able to work on several problems and datasets, and then generalize for the concept of binary classification, allowing us to solve any binary classification task.

And this is the idea of model agnostic meta learning. If your meta learning isn't model agnostic, you're basically stuck with a few similar tasks or datasets. And some papers such as this one teaching Fast Adaption of Deep Neural Networks are explaining this idea of using only a small number of examples, a small number of gradients steps to generalise to a totally new problem.

So we have enough information to understand the basics:

It's one abstraction level above supervised learning.

We want to find Machine Learning models.

We want to generalise easily.

Now, let's see a mindmap of Meta Learning to dive deeper:

The Meta Learning Algorithms and Mindmap

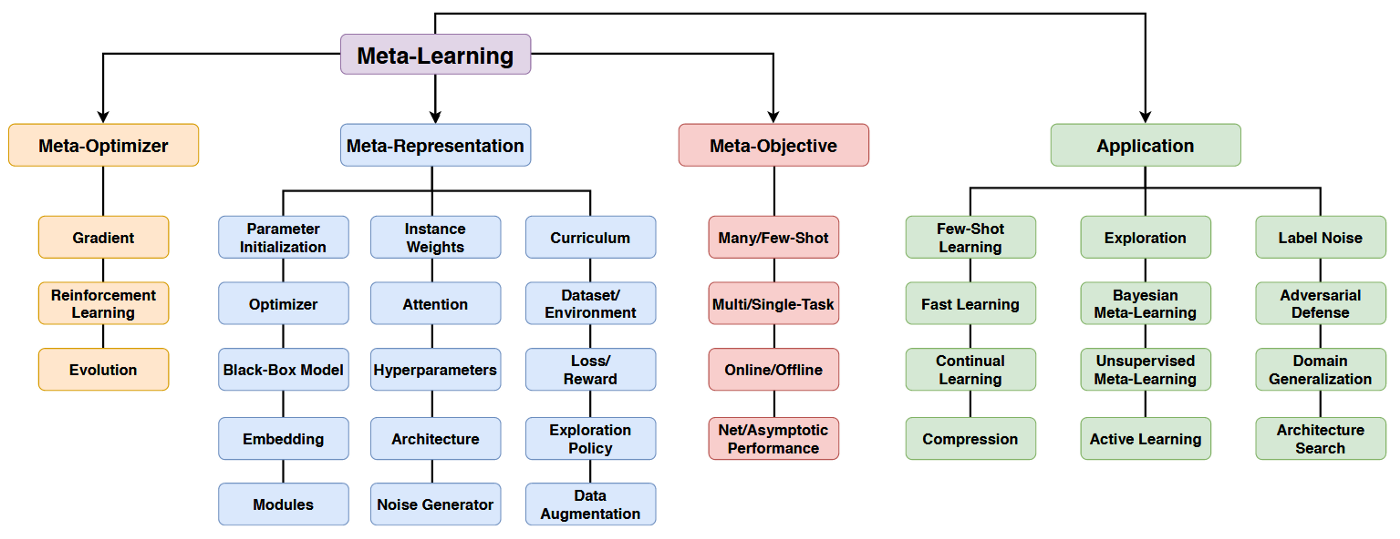

I usually make my own mindmaps, but this one I found here works really well:

- In yellow, the meta-optimizer represents "how" we are going to learn. It can be using gradient descent, reinforcement learning, or even evolution algorithms.

- In blue, the meta-representation describes "what" are the parameters of our model. What is the dataset. What are the optimal parameters and weights. What is the optimizer. What, what, what.

- In red, the meta-objective represents "why" we want to use meta-learning. So, is our problem single-task or multi-task? Will we use few-shot or many-shot learning? Online or Offline? etc...

- In green, the applications are the meta learning tasks, the outcome.

Notice some of the elements listed:

- Few-Shot Learning

- Compression

- Active Learning

- Architecture Search

- ...

It means that we can use Meta Learning with other types of Machine Learning algorithms! For example, we can combine Meta Learning with Neural Architecture Search.

But what is Neural Architecture Search?

An example of Meta Learning: Neural Architecture Search

There are lots of problems to solve in the Deep Learning world, but NAS is probably one of the hardest of them all, if not the hardest. And as you'll see, it's closely tied to Meta Learning.

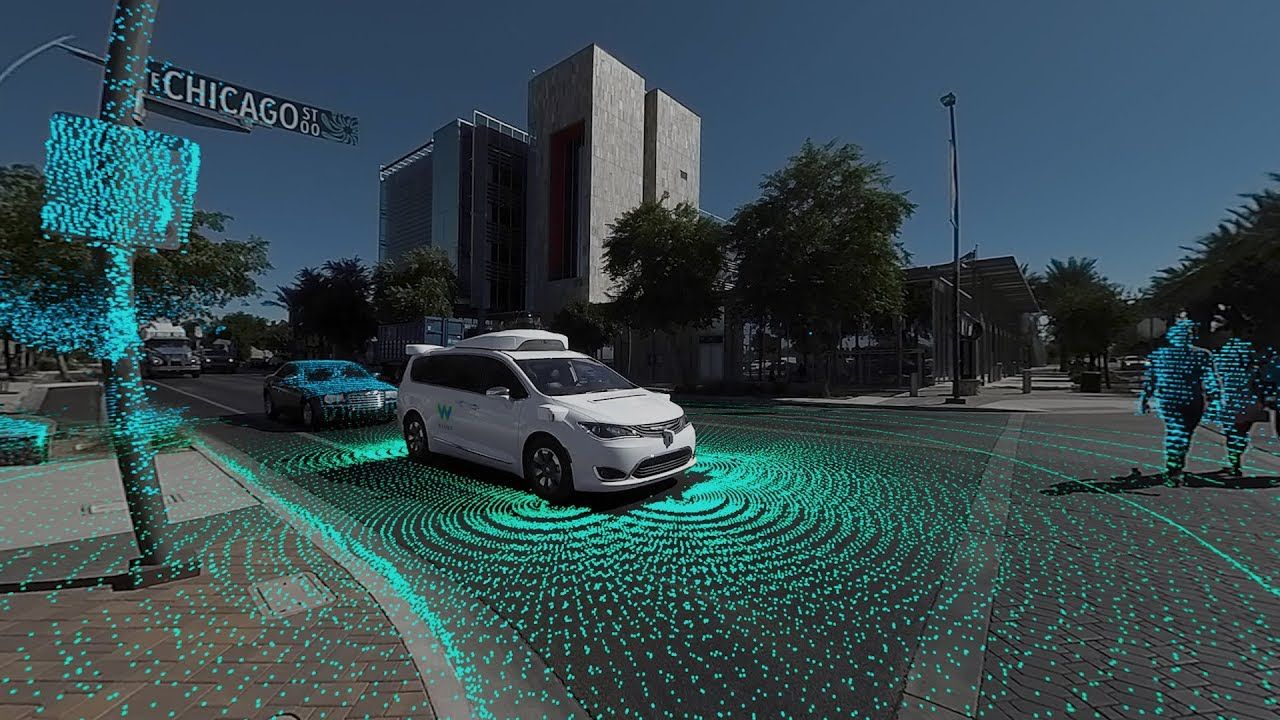

It's something coming from AutoML, and last time I got Waymo (Google's self-driving car division) on the phone, they told me they were actively working on Neural Architecture Search.

So, what is Neural Architecture Search?

Let's have a look with an example coming from Waymo in my article "How Google's Self-Driving Car Work".

Neural Architecture Search at Waymo

NAS is about finding neural network architectures. In the case of Waymo, this technique helped them find architectures with 10% lower error rate for the same latency, or architectures 20-30% faster for the same quality.

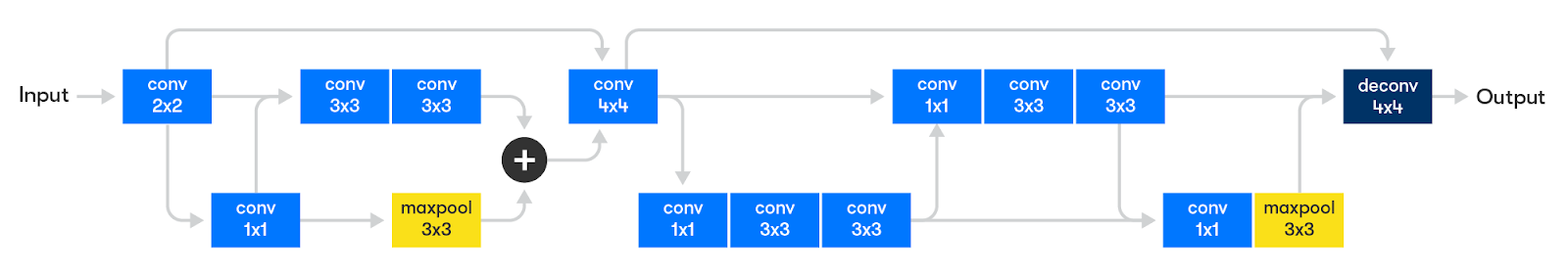

Wow, so how does that work? Here is a graph from Waymo showing how the idea of NAS works.

From left to right:

- We start with a "learning task" to solve

- We choose a NAS algorithm (the algorithm that will find the architecture) and a proxy task (a task doing something similar to what we want to do)

- And then, in the AutoML block: we explore over 10,000 architectures using reinforcement learning and random search.

- We select the 100 most likely to perform models and train them.

- We finally use the best model in the Waymo's car.

This way, Waymo is able to get the results I talked about before — Now, let's zoom in that green "AutoML" block that finds 10,000 architectures.

Notice how it's similar to Meta Learning. We provide a task and a search algorithm (like we did before with the meta-optimizers), and the NAS algorithm will search for tens of thousands of architectures, select the best, and export it.

If we dig further in the idea of NAS, you'll see that it's a combination of 3 ideas:

- Search Space — What are the type of architectures we'll try? Skipping connections? Space reduction? Layer stacking?

- Search Strategy — How are we going to explore the search space? Should we try it all at random? Using bayesian optimization? Reinforcement Learning? Evolutionary techniques? Gradient based methods?

- Performance Estimation Strategy — How will we train our NAS algorithm? Will we measure performance using basic training/validation dataset? Should we train everything from scratch? Maybe using few-shots learning?

For example, here is a neural architecture discovered by Waymo's NAS algorithm:

Meta Learning and Neural Architecture Search

You might be wondering, what is the connection between Meta Learning and Neural Architecture Search?

And it's pretty difficult to understand; so here is a bit of lexical:

- AutoML — Automated Machine Learning

- Neural Architecture Search — An approach to discover the best architecture to solve a specific problem.

- Meta Learning —A field of study where we discover an architecture to solve a general task.

For the classification example:

- In Meta Learning, we show lots of Datasets to learn a concept, like binary classification.

- In Neural Architecture Search, we sample lots of different architectures to learn a specific task, like point cloud segmentation. Notice how we don't learn the concept of segmentation, but rather "point cloud segmentation" and even "point cloud segmentation for autonomous driving".

This is why, Neural Architecture Search was shown in the Mindmap as a type of Meta Learning. It's not "general" as it was in Meta Learning, it's just a specific technique used to find an architecture.

Conclusion

Meta Learning is a type of Machine Learning where we are learning to learn. Just like meta data is data about data. Meta learning is learning about learning. The output of a Meta Learning algorithm is a machine learning model. This is what we want to automate — The search of a model.

In a way, it is belonging to the family of Ensemble Learning and Random Forests, where we're testing tons of decision trees, and keeping only the best performing. The difference is we're doing this with Neural Networks, and we therefore have a gazillion of possibilities and parameters.

When you think about it, this is the next logical step to our evolution. Why would we manually search for algorithms, when we can design algorithms to do it for us?

Still, Meta Learning remains one of the most advanced type of Machine Learning there is, and it can become even more difficult when you combine it with other types of learning like NAS, Few-Shot Learning, etc...