The Ultimate Guide to Medical Image Segmentation with Deep Learning (2D and 3D)

On September 23, 1999, NASA’s Mars Climate Orbiter—a $125 million spacecraft—was set to enter Mars' orbit to study its climate and atmosphere. But just as it approached the planet, something went terribly wrong. Instead of entering a stable orbit, the spacecraft plunged into Mars’ atmosphere and was destroyed.

After analysis, NASA found a unit mismatch: their Jet Propulser used metric units (newtons), while the spacecraft they got from Lockheed Martin used imperial units (pound-force). This caused navigation errors, making the spacecraft descend far too low into the Martian atmosphere; and causing a 125m$ loss.

Human errors happen every day in all sorts of domains. In 2016, an alarming report from Johns Hopkins estimated that medical errors (including misdiagnoses) cause over 250,000 deaths annually in the U.S., making them the third leading cause of death. Many are due to errors in analysis of medical images, such as MRIs, X-Rays, CT Stans, and more.

In this article, I would like to show you how Medical Image Segmentation can be used to counter this problem, and I'll do it in 3 points:

- 2D Medical Image Segmentation

- 3D Medical Image Segmentation

- Examples/Demo

Let's get started...

Intro to 2D Medical Image Segmentation

In 2019, I hosted the biggest AI Healthcare hackathon ever held, happening simultaneously over 20 cities! The goal at the time was to mix companies, healthcare groups, and engineers to build healthcare solutions using Deep Learning. After the 48 hours of coding, the winning team would win 10,000 USD, the second 4,000 USD, and then team 3, 4, 5, and 6 would win 2,500 USD each!

Great computer vision projects happened, and in fact, Paris (my city) finished the competition #2 via Spot Implant, a Shazam for Tooth Implants project that then became a startup. At the time, everybody was working on 2D Images. We had projects like Skin Melanoma detection, X-Ray segmentation, Brain Segmentation, and more...

Let me show you a few tasks in Medical Image Segmentation, and then we'll look at algorithms.

2D Medical Image Segmentation Tasks

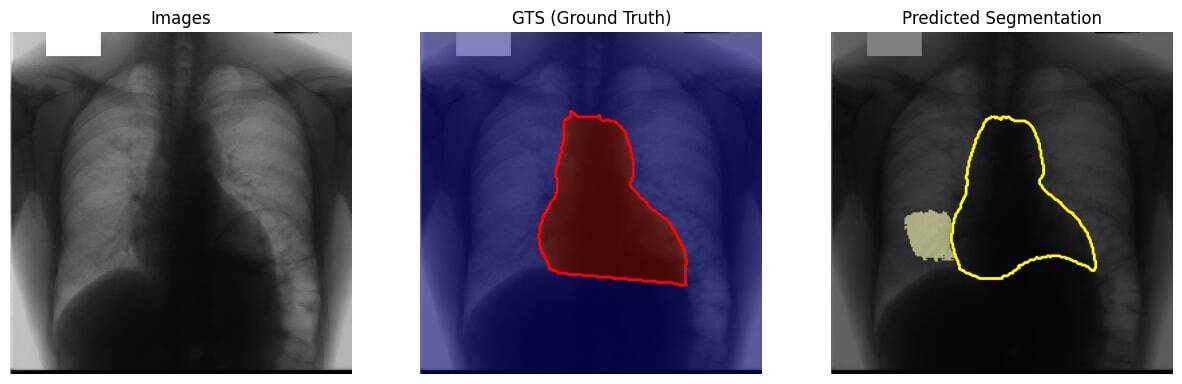

X-Ray (the most common)

First, we have X-Rays. X-Rays are the 2D representation of a body. We often see bones and organs there, and it's the most common image you'll find in Deep Learning x Healthcare. Using medical image segmentation, we can assist doctors in finding bone fractures, lung diseases, and other abnormalities. It can also help in screening large volumes of X-rays for tuberculosis, which is particularly useful in low-income countries with limited access to radiologists.

This really is the most known among Deep Learning Engineers. I would like to show you other applications of segmentation...

Dermoscopy Segmentation (skin lesion segmentation)

Dermoscopy segmentation was the health hackathon's top pick. It's all about using medical image segmentation to spot and separate skin lesions in dermoscopic images. By applying deep learning on medical images, we can quickly and accurately detect skin conditions like melanoma. This helps dermatologists diagnose and treat patients faster and manage large amounts of data more efficiently.

Let's see one or two more...

Mammography Segmentation

Mammograms are specialized X-ray images designed to reveal the inner structure of breast tissue. These images typically come in a flat, 2D format, capturing the breast from multiple angles to ensure a comprehensive view. The details in mammograms can show everything from dense tissue patterns to potential abnormalities like lumps or calcifications.

Look at the image below: see how the role of a doctor/radiologist is to find these highlighted areas. The role of image segmentation is to assist the doctor, so he's not alone doing that high stake task of spotting problems (of course, it goes without saying that doctors also do much more than spotting, from understanding how bad a calcification can be, to finding the treatment, and so on...).

Other Types: Ultrasound 👶🏽, Endoscopy 🤢, and more...

We just saw 3 types: X-Rays, Dermoscopy, and Mammography. There are other types, such as ultrasound images (baby for examples), which can be 2D or 3D; or endoscopy, and more... The image below shows many 2D segmentation applications:

So how do you build the segmentation results? What do you use? Let's take a look...

2D Medical Image Segmentation Models

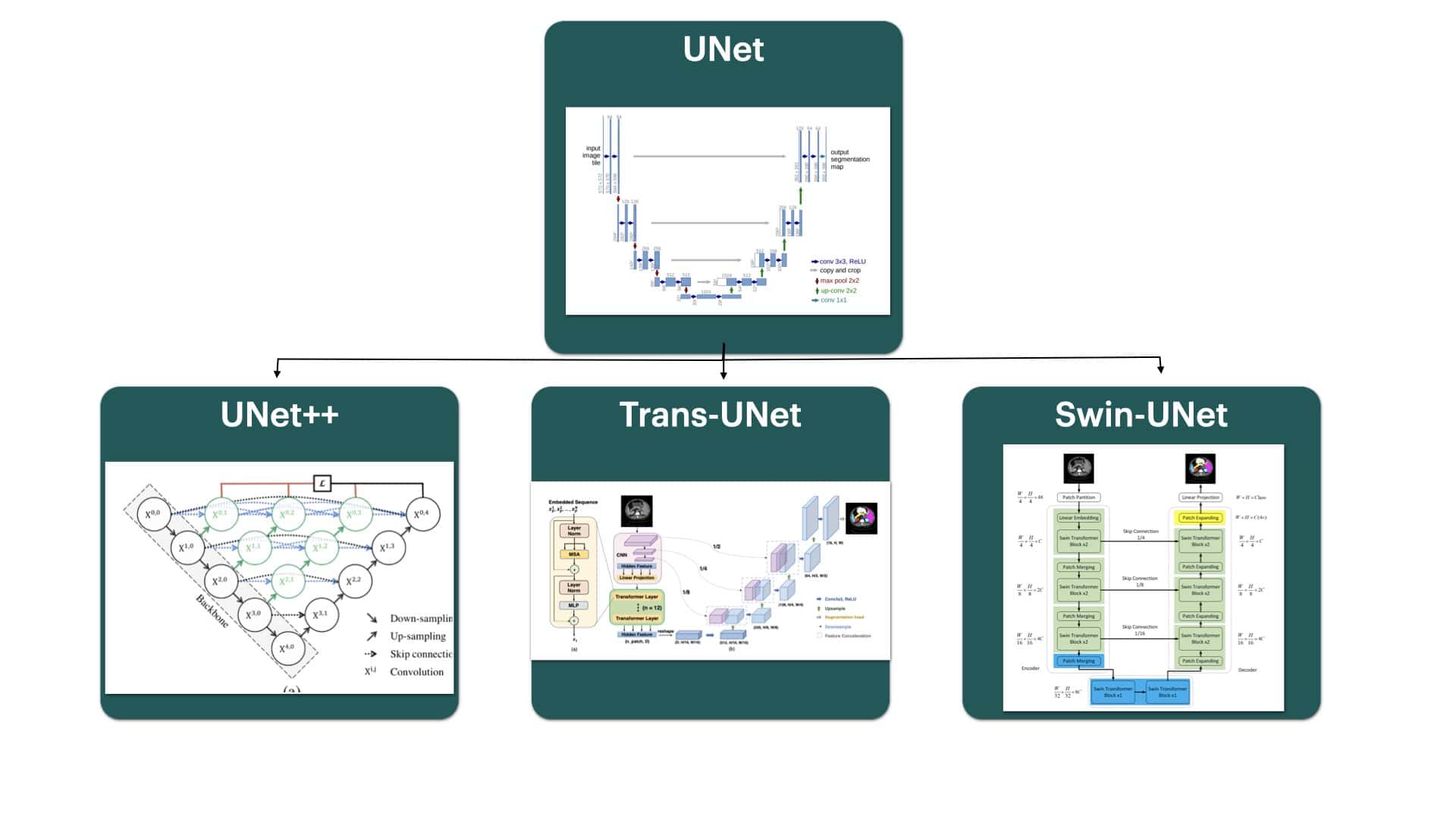

Ever heard of UNet? You know, that 2015 model subtitled "Convolutional Networks for Biomedical Image Segmentation". Well, it may be from 2015, but it's a great way to start! In fact, there have been lots of improvements of UNet, to UNet++, Trans-UNet, Swin-UNet, all keeping that "U" shape, but using different pattern recognition techniques like Swin Transformers, CNNs, etc...

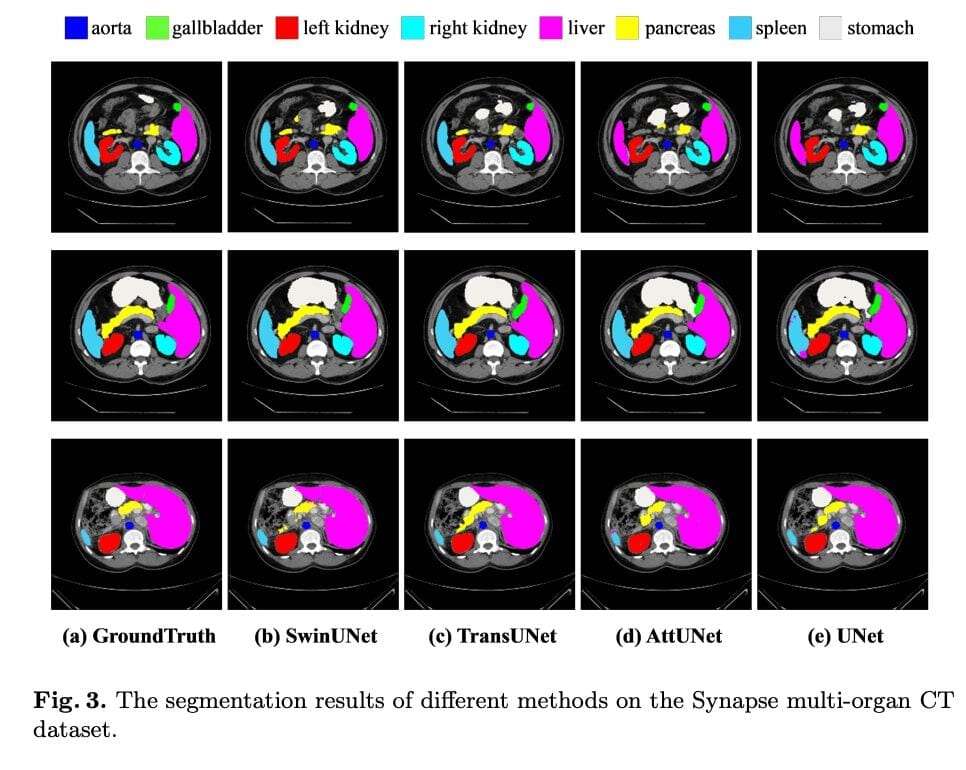

This is one family of semantic image segmentation algorithms, and here is what the results look like:

To get true numbers, Dice-Similarity coefficient (DSC) and average Hausdorff Distance (HD) are used as evaluation metric to evaluate these algorithms.

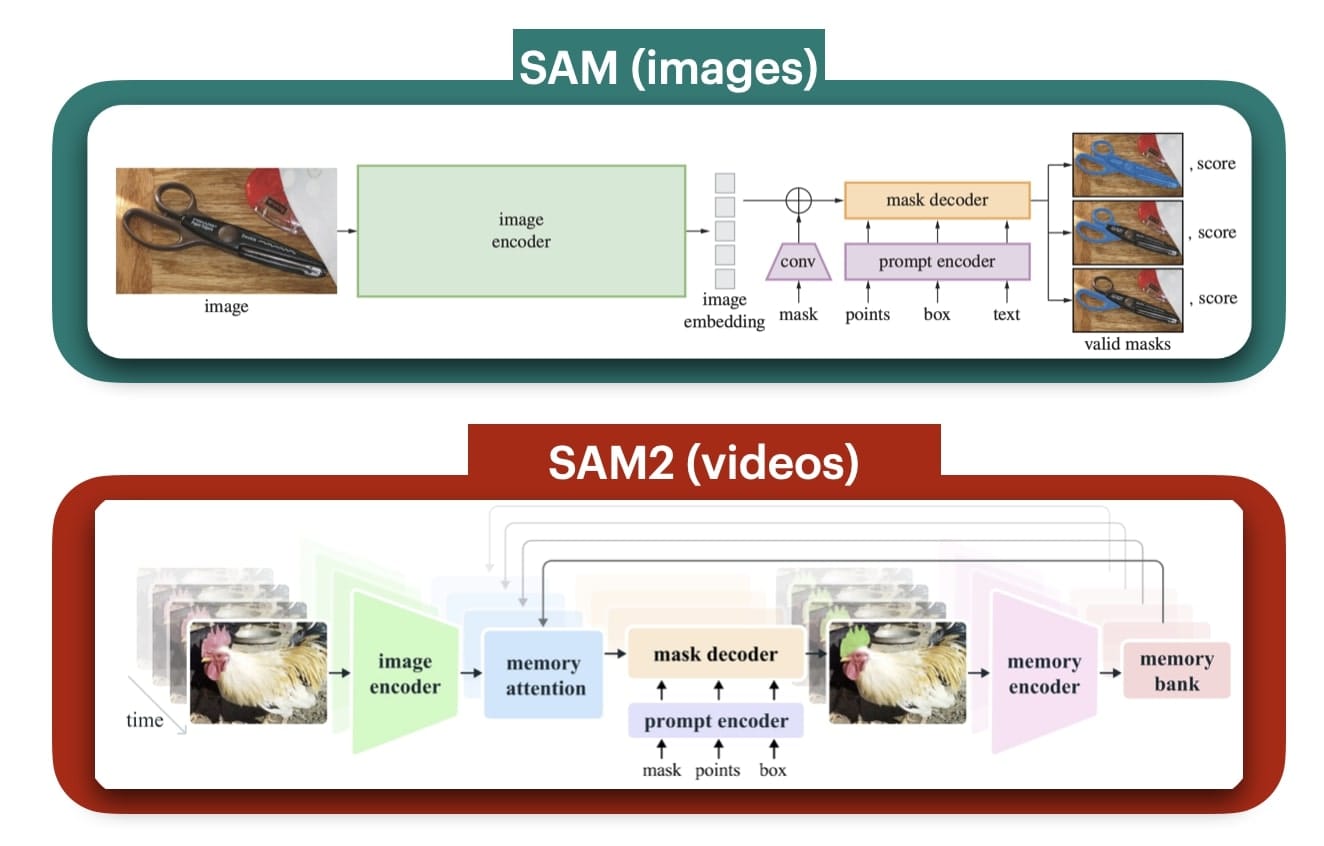

These are great, but what happens when you don't have millions of labeled data? In healthcare, getting access to labeled, free-to-use data isn't easy; especially for certain types of diseases that are specific to certain hospitals, and so on... In these cases, you can use more "foundational" semantic segmentation models such as SAM (Segment Anything) or SAM2. These have been trained using Self-Supervised Learning on "the entire internet", and thus are supposed to find problems better.

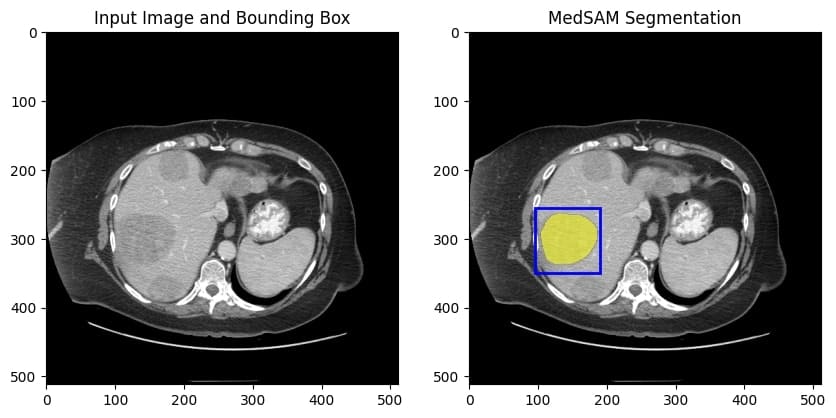

For example, MEDSAM is a Medical SAM (Segment Anything) is what I used for the images above. It's the regular SAM, but tweaked for medical image segmentation, to boost the segmentation performances. The model performance is quite high, and we get a top notch Computer Vision project using image segmentation... It can can even take your prompt as a region of interest bounding box to return the segmented masks:

So this is for the first part on 2D images... Now what are 3D images?

3D Medical Image Segmentation: CT Scans & MRIs

Now come 3D images! For this part, I'll talk about the two use cases (CT Scans & MRIs) and discuss the algorithms together.

CT Scans: Use Cases & Algorithms

Use Cases for CT Scans & 3D Representation

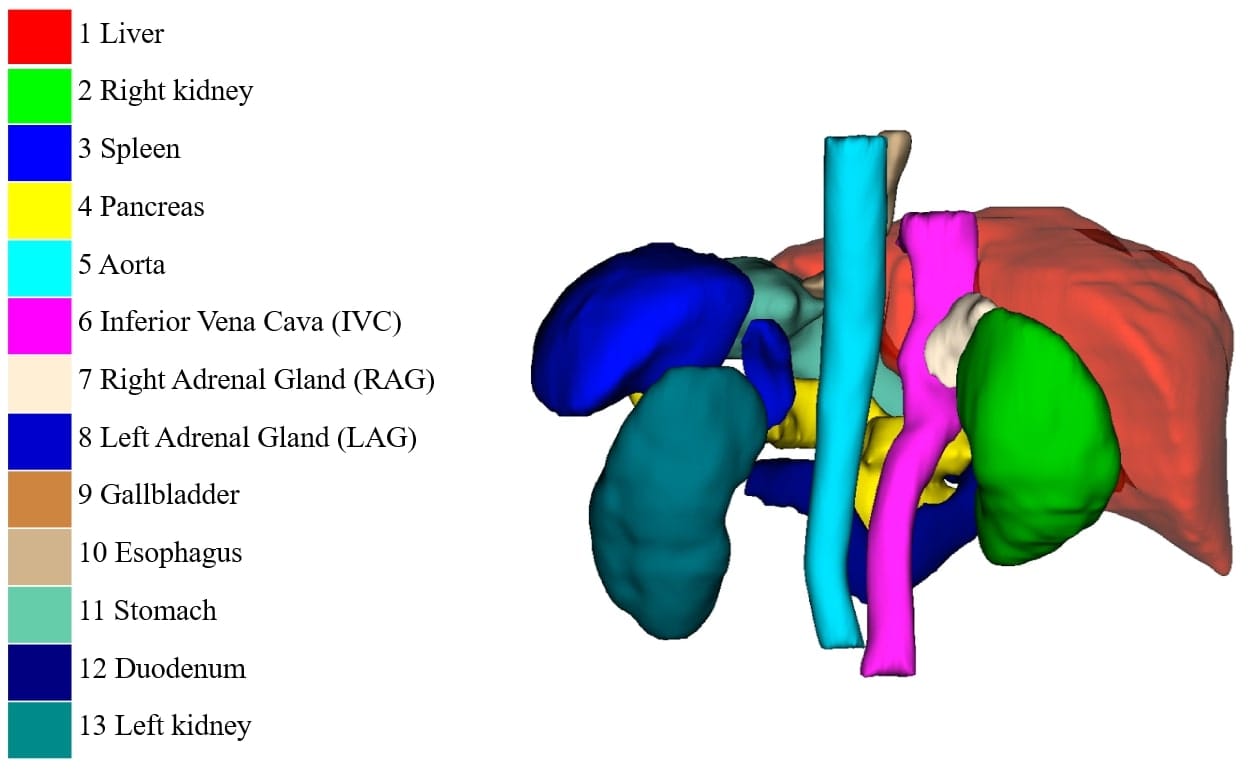

In the FLARE 2022 dataset (Fast and Low-resource semi-supervised Abdominal oRgan sEgmentation), we get access to a few hundred labeled and unlabeled cases with liver, kidney, spleen, or pancreas diseases as well as examples of uterine corpus endometrial, urothelial bladder, stomach, sarcomas, or ovarian diseases.

Hey, relax. I'm just scaring you. I didn't get a clue of what that meant either. Except that:

These are CT SCANS (Computed Tomography Scans). A CT scan uses X-rays to create detailed, cross-sectional (slice-by-slice) images of the inside of the body. They're more detailed than traditional X-rays because they produce 3D images by taking multiple X-ray images from different angles and combining them using a computer.

So what's the "3D" output like? Voxels? Point Clouds? Not exactly. As I said, these are images taken with multiple "layers" (or dimensions). So your input image dimension isn't (512, 512, 3) but (512, 512, 129) or something like this. You have a multi-dimensional image on which you can apply image segmentation to each of the 2D slices:

In this example, I used MedSAM to process individual 2D images. If you do it on the entire 3D CT Scan, you get something like this:

If you get it, you understand that from these images, we can put that into a software that is going to reconstruct the scan to 3D:

From there, people go absolutely nuts and even try to make it into a point cloud (I'm not sure why, but this is cool, shoutout to Beau Seymour's video).

MRI Scans: Advanced Medical Image Computing

Magnetic Resonance Imaging (MRI) Scans are another powerful tool in medical imaging. Unlike CT scans, MRIs use powerful magnets and radio waves to create detailed images of organs and tissues within the body. This technique is particularly great for soft tissue contrast, making it ideal for brain, spinal cord, and joint imaging. By leveraging medical image segmentation, MRI scans can aid in the precise identification of tumors, neurological disorders, and musculoskeletal issues.

Here is an example of MRI scan and its segmentation task:

So now, let's see how to process that...

Algorithms in the 3D Medical Image Segmentation Domain

We already discussed SAM (Segment Anything) and how it can work on individual slices. The reality is, medical image segmentation involves a lot of complex "job" knowledge; and it would probably be better to use a specialized artificial intelligence model for optimal model performance. Today, in AI, we have two types of models:

- Foundation Models, that are very general and know everything

- Specific & Labeled Models, that can only process the images it's been trained on

I would like to show you two models doing both: TotalSegmentor & Vista-3D.

Total Segmentator: A specific model for 2D and 3D Segmentation

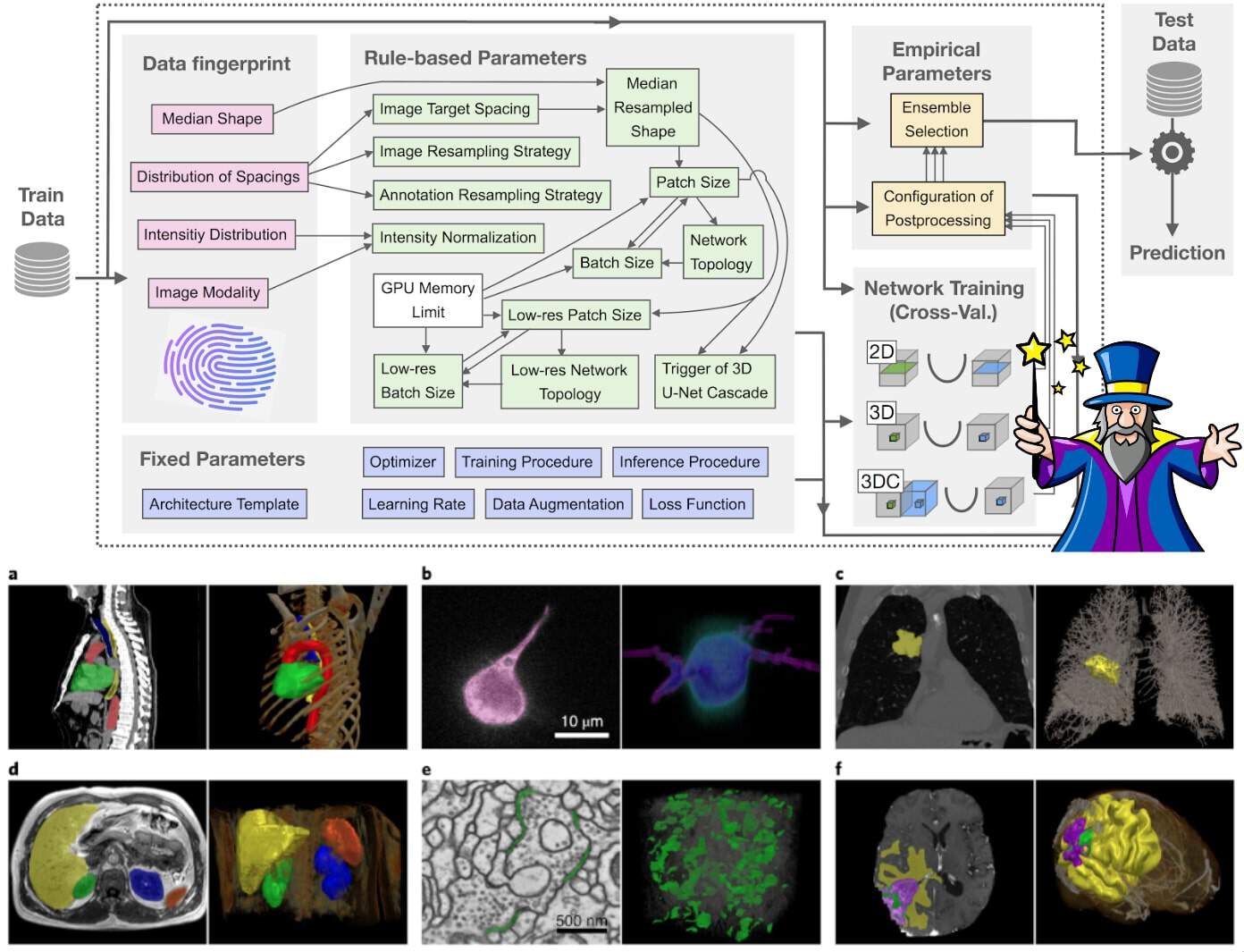

Perhaps one of the most used and well known "framework" for image segmentation of both 2D and 3D data is TotalSegmentator. Rather than being a simple machine learning model, it's a complete framework that does the automatic labelling.

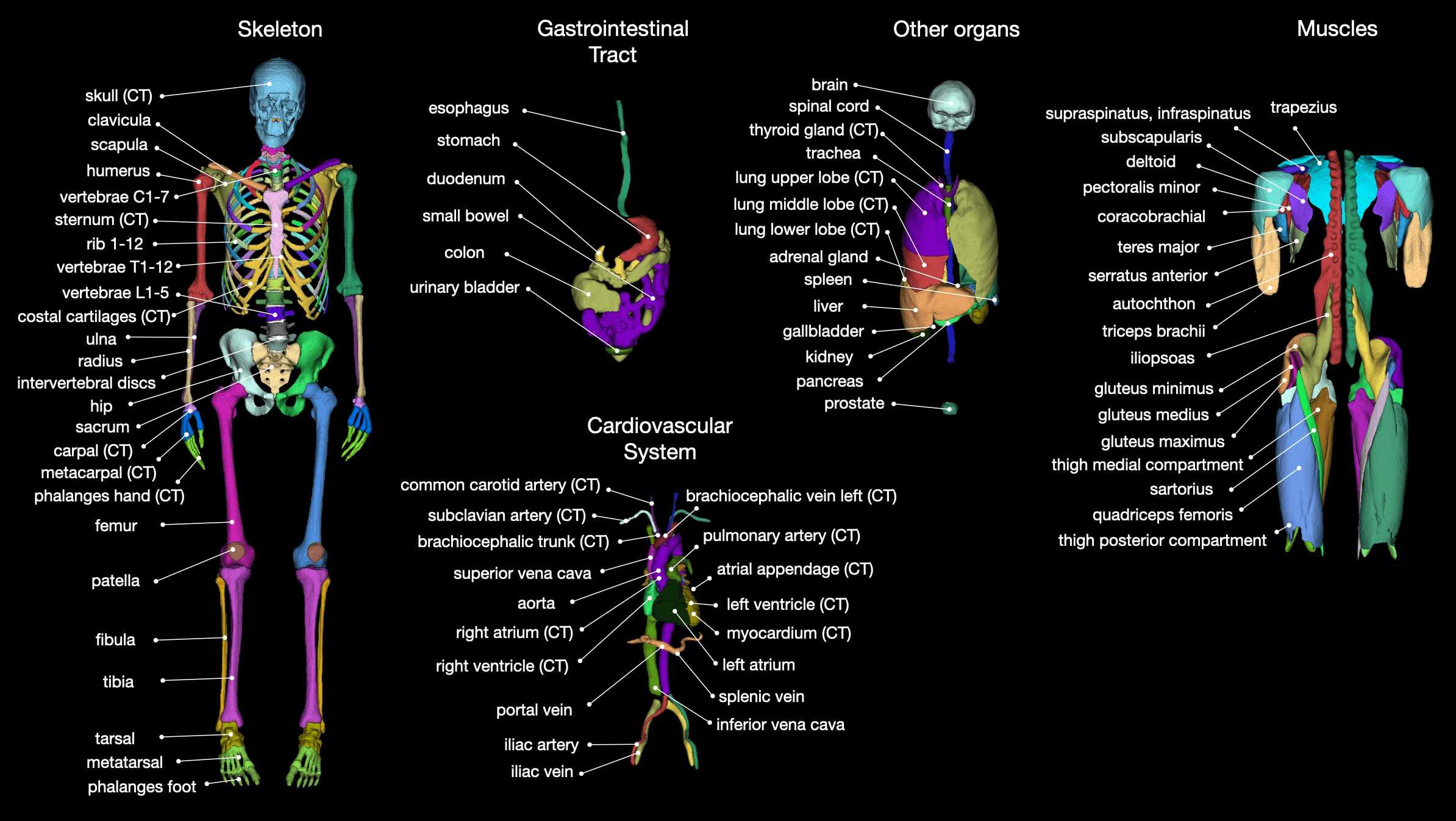

The number of classes for CT and MRI data it can segment is gigantic:

And the model is based on the nn-UNet architecture, which is similar to UNet, but can also take in different medical imaging modalities.

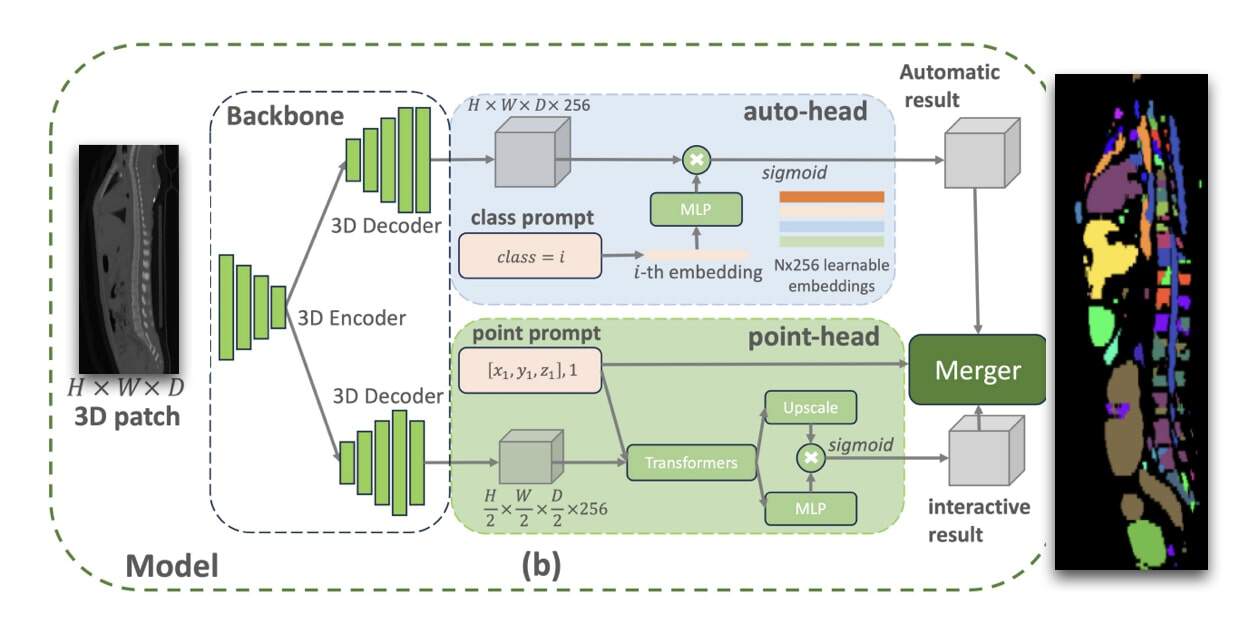

VISTA-3D: Foundation Model for 3D Medical Image Segmentation

VISTA-3D is a 2024 "Foundation model" from Nvidia that works on the 3D patch directly. While being named "foundation" model, it's incredibly specific to the medical image segmentation tasks. Here, we are PURELY in 3D Deep Learning.

So we've seen a lot:

- 2D Segmentation can be done with models like UNet, UNet++, etc... (specific), or SAM (foundation)

- 3D Segmentation can be done with models like nnUNet/TotalSegmentator (specific), or Vista-3D & SAM (foundation)

Let's see examples now...

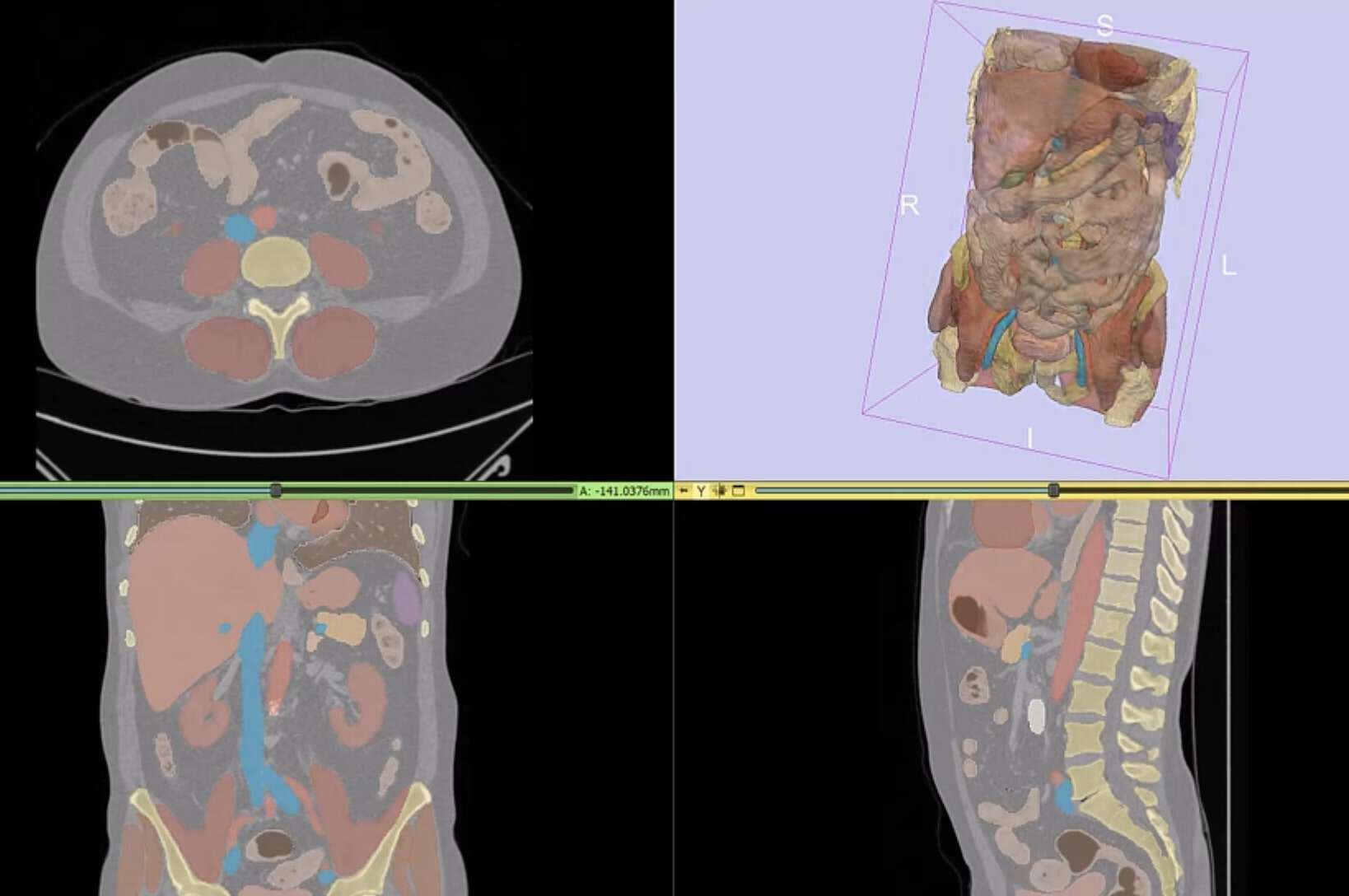

Example 1: CT Scan Segmentation with Vista-3D

In this platform from Nvidia, I am able to select a CT Scan and call Vista-3D to process it.

Notice how we can select an Abdomen, and then pick all the organs we want to segment. Finally, we can get the view from 3 different "angles" and process that too!

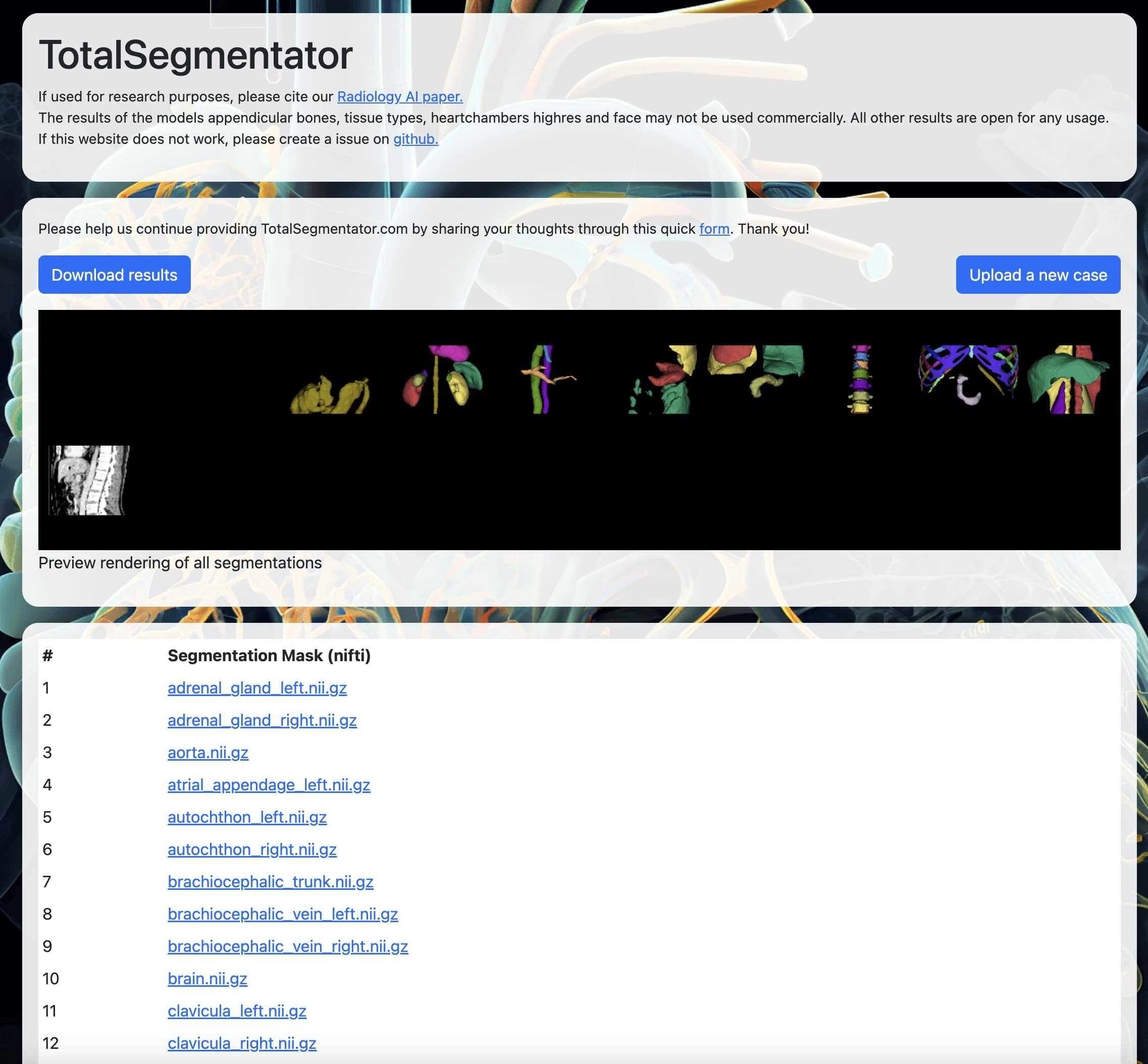

Example 2: CT Scan Segmentation with TotalSegmentator

On totalsegmentator.com, we can upload images, and ask for complete segmentation. Here, I am going to upload a scan from the FLARE2022 dataset I mentioned above. The platform return hundreds of organs all in a weird format 'nii.gz' format:

I can visualize some of these, and see what the output is like:

Alright! So this is our second example, and both have playable demos! Let's now do a summary...

Summary & Next Steps

- Medical image segmentation helps reduce human errors by processing 2D and 3D medical images like MRIs and X-rays.

- 2D medical image segmentation tasks include X-Rays, dermoscopy (skin lesion analysis), endoscopy, mammography segmentation (breast), and more...

- UNet and its variants are popular models for 2D medical image analysis, utilizing CNNs or Transformer approaches. Foundation models like SAM (Segment Anything Model) can also be fine-tuned on medical images, like with MedSam.

- 3D medical image segmentation involves CT(computed tomography) and MRI (magnetic resonance imaging) scans. They're called 3D images because they're multiple slices of the same image under different views.

- MedSAM can process 2D slices of 3D scans, allowing individual segmentation of each slice. We can then fit that into a software that will do a reconstruction into a complete 3D image.

- For 3D processing, TotalSegmentator and Vista-3D are solid solutions, being either specific or foundation based.

Receive my Daily Emails, and get continuous training on Computer Vision & Autonomous Tech. Each day, you'll receive one new email, sharing some information from the field, whether it's a technical content, a story from the inside, or tips to break into this world; we got you.

You can receive the emails here.