Computer Vision at Tesla

Tesla's Autopilot is certainly the most advanced in the world. If Comma.ai is the Androïd, Tesla is definitely the Apple of self-driving cars. Recently, Tesla has released Tesla Vision, their new system equipped only with cameras... making it one of the only companies in the world not to use RADARs!

This is not the only place where Tesla is going against the crowd, their entire strategy is based on fleet and selling products, while most of its competitors sell autonomous delivery and transportation services.

Before we start, know that I also wrote a few other articles about Tesla:

- Tesla vs Waymo: Why they aren't competitors

- Tesla's HydraNets: How Tesla's Autopilot works

- A Look at Tesla's Occupancy Networks: A dive in their new algorithm

In this article, I will explain you how the Tesla Autopilot works with just cameras, and we'll study their neural net in depth.

You will learn a lot about how to implement a Computer Vision system in a self-driving car company like Tesla, but please note that I don't just publish about Tesla and Computer Vision on this blog, I also share daily content on my private emails on Self-driving cars and advanced AI technologies. If you'd like to join, leave your email here and I'll see you tomorrow in your first email!

Before we start, here's a short summary of what we'll learn:

- Introduction to Tesla Autopilot

- Tesla Vision - Driving with 8 cameras

- Hydranets - Tesla's Insane Neural Networks

- How Tesla Trains its neural networks (with Pytorch)

- Continuous Improvement & Fleet Leverage

1. Introduction to Tesla Autopilot

According to many self-driving car experts, Tesla is leading the self-driving car race! Its team of 300 "Jedi Engineers", as Elon Musk calls them, is solving some of the most complicated problems such as lane keeping, lane change, and cruise control. They also have additional tasks such as driving in a parking lot, Smart Summon , and city driving.

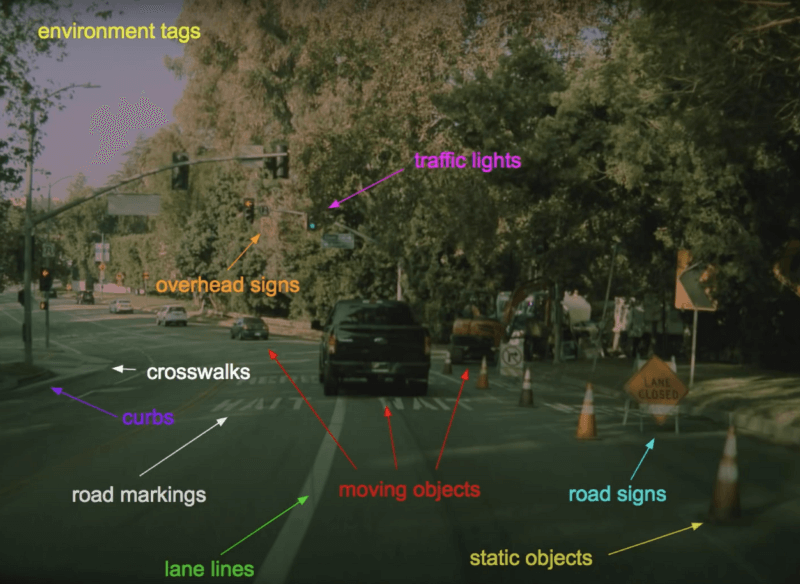

Tesla Vision has to deal with all these tasks

Tesla’s tasks are well-known today. From lane detection, the most important feature of autonomous cars, to pedestrian tracking, they must cover everything and anticipate every scenario. For that, they use a Perception system made only with cameras called "Tesla Vision".

2. Tesla Vision - Driving with 8 cameras

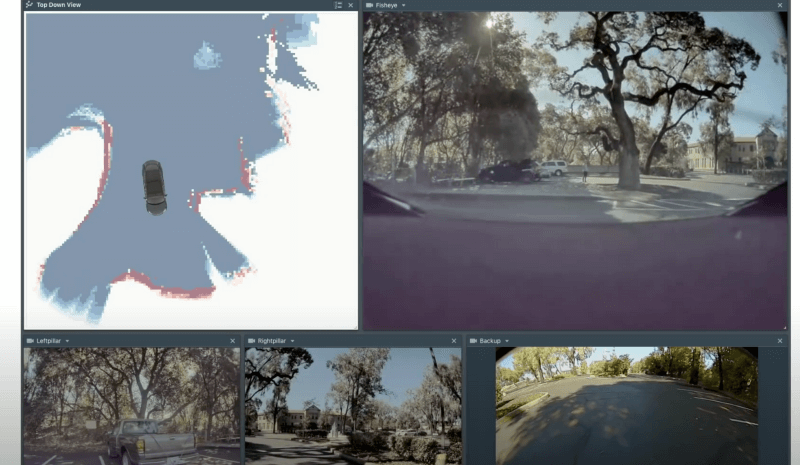

This is the view from the 8 cameras of a Tesla Model S.

In 2021, Tesla switched to a vision only model; ditching the RADARs. I have written a complete article on the idea of transitioning to Vision only, and on the updates made on their neural networks here .

In this article, we'll go back to the idea of Hydranets, Tesla's Neural Network, and we'll see how these models are trained in the company.

3. Hydranets - Tesla's Insane Neural Networks

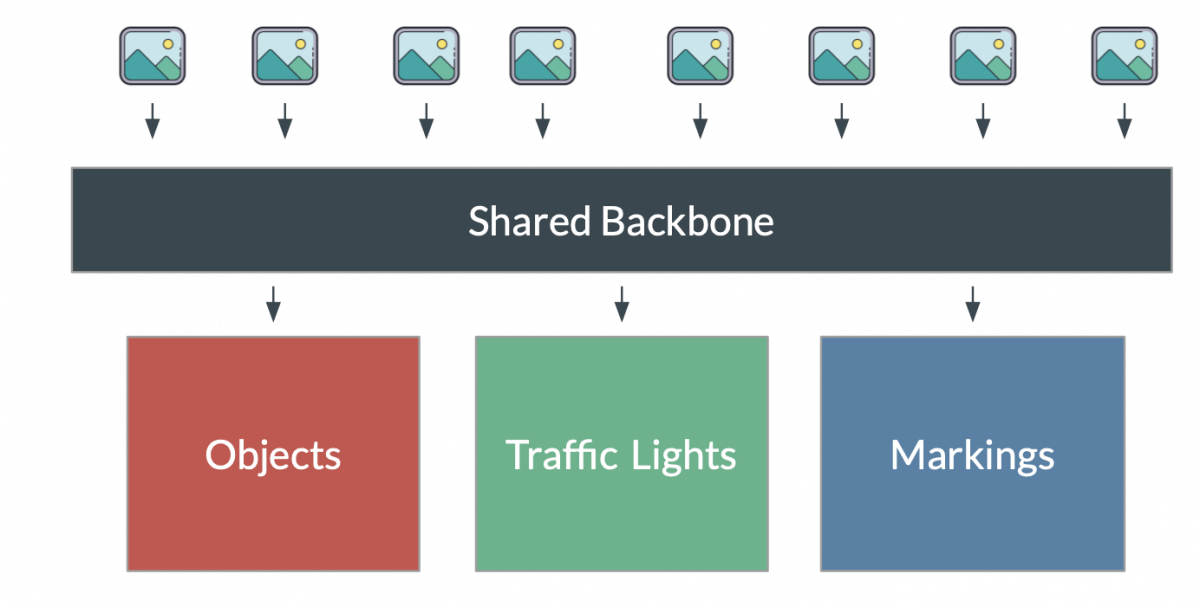

What is a HydraNet?

Between the vehicles, the lane lines, the road curbs, the crosswalks, and all the other specific environmental variables, Tesla has a lot of work to do. In fact, they must run at least 50 neural networks simultaneously! That’s just not possible on standard computers. To optimize for this, Tesla has created its own computer, and its own Neural Network architecture called a HydraNet.

Similar to transfer learning, where you have a common block and train specific blocks for specific related tasks, HydraNets have backbones trained on all objects, and heads trained on specific tasks. This improves the inference speed as well as the training speed.

The neural networks are trained using PyTorch, a deep learning framework you might be familiar with.

Tesla's Bird's Eye View

Something else Tesla uses is Bird’s Eye View: Bird’s Eye View can help estimate distances and provide a much better and more real understanding of the environment. It can help with road curbes detection, smart summon, and other features.

Some tasks run on multiple cameras. For example, Depth estimation is something we generally do on stereo cameras . Having 2 cameras helps estimate distances better. If you'd like to learn more, I have an entire course on 3D Computer Vision and 3D Reconstruction . In the meantime, here's their neural net for depth prediction.

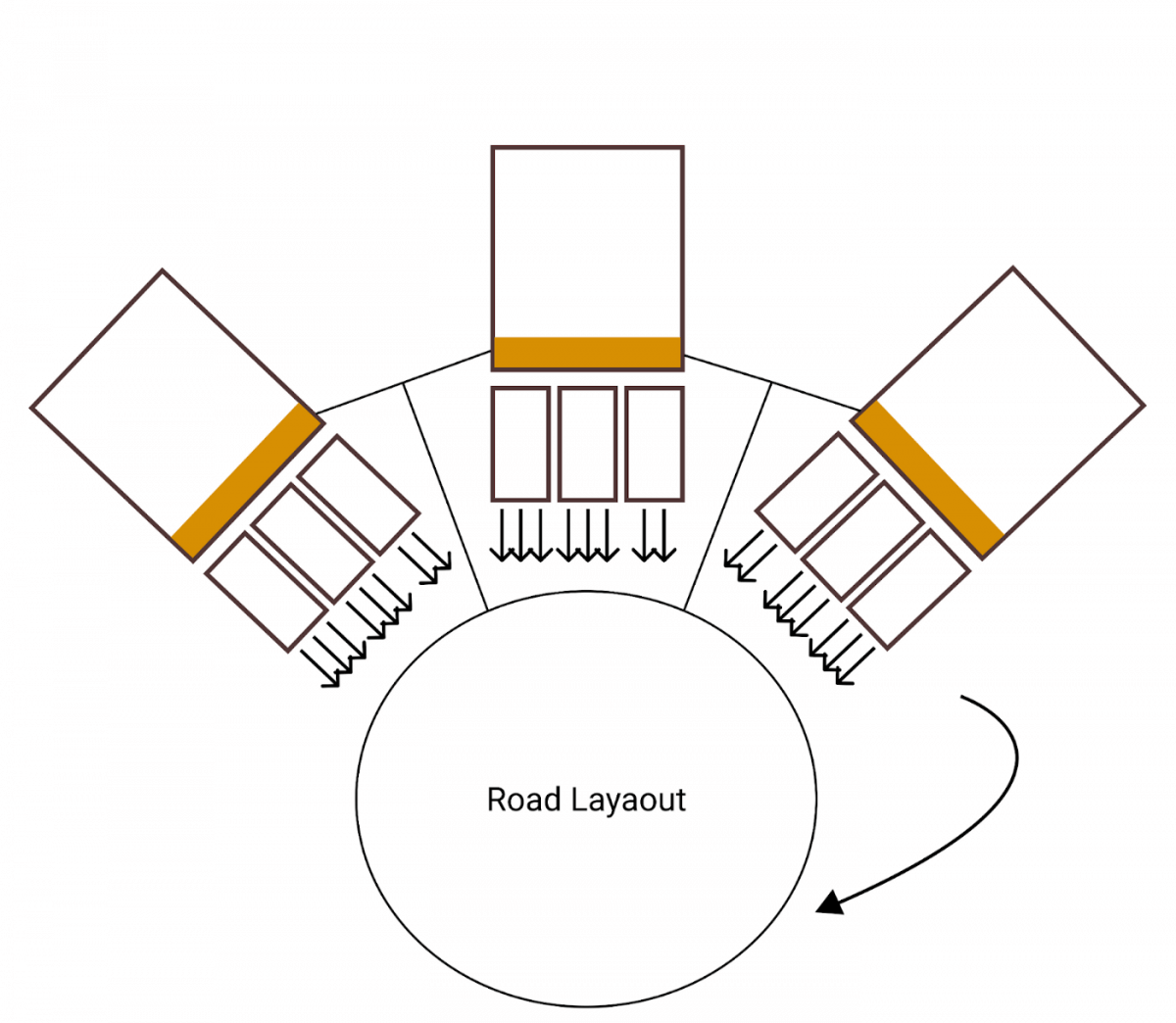

Tesla also has recurrent tasks such as road layout estimation. The idea is similar: multiple neural networks run separately, and another neural network is making the connection .

Road Layout Estimation

Optionally, this neural network can be recurrent so that it involves time. This type of architecture has been used a lot for road layout estimation using time sequence and continuity.

Something cool about the Hydranet is that it can be done to leverage only what's needed by the system: the lane line detection algorithm won't necessarily use the read or side cameras, etc...

To process all of this, Tesla has built a computer they call the Full Self Driving (FSD) computer. I have written an article on Tesla's Hydranets in 2021, their computers, and on the switch from RADARs to Vision only, you can find it here .

4. How Tesla Trains its Neural Networks (with Pytorch)

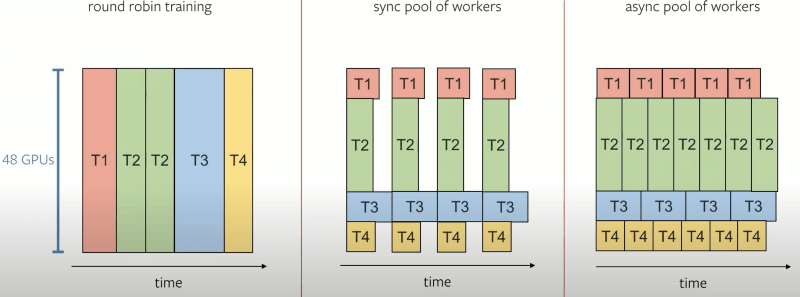

How to train a Hydranet? According to Tesla's Team, training a hydranet with 48 heads on a GPU takes as low as 70,000 hours! That's almost 8 years. 👵🏽

In order to solve this, Tesla is changing the training mode from a “round robin” to a “pool of workers”.

Here’s the idea:

- on the left — the traditional, long, 70,000 hours option.

- In the middle and on the right, the pool of workers.

What you can get from this picture is that parallel training is done so all heads are trained at the same time (versus linearly). It drastically speeds up computations.

In a perfect world, you wouldn’t need the HydraNet architecture—you’d just use one neural network per image and per task… but that, today, is impossible to do. They must collect and leverage users’s data. After all, they have thousands of cars driving out there, it would be stupid not to use their database to improve their models. Every piece of data is collected, labeled, and used for training; similar to a process called active learning (find more about this here).

In the end, Tesla's Machine Learning pipeline is leading the cutting-edge, and ran by over 300 Jedi Engineers.

5. Continuous Improvement & Fleet Leverage

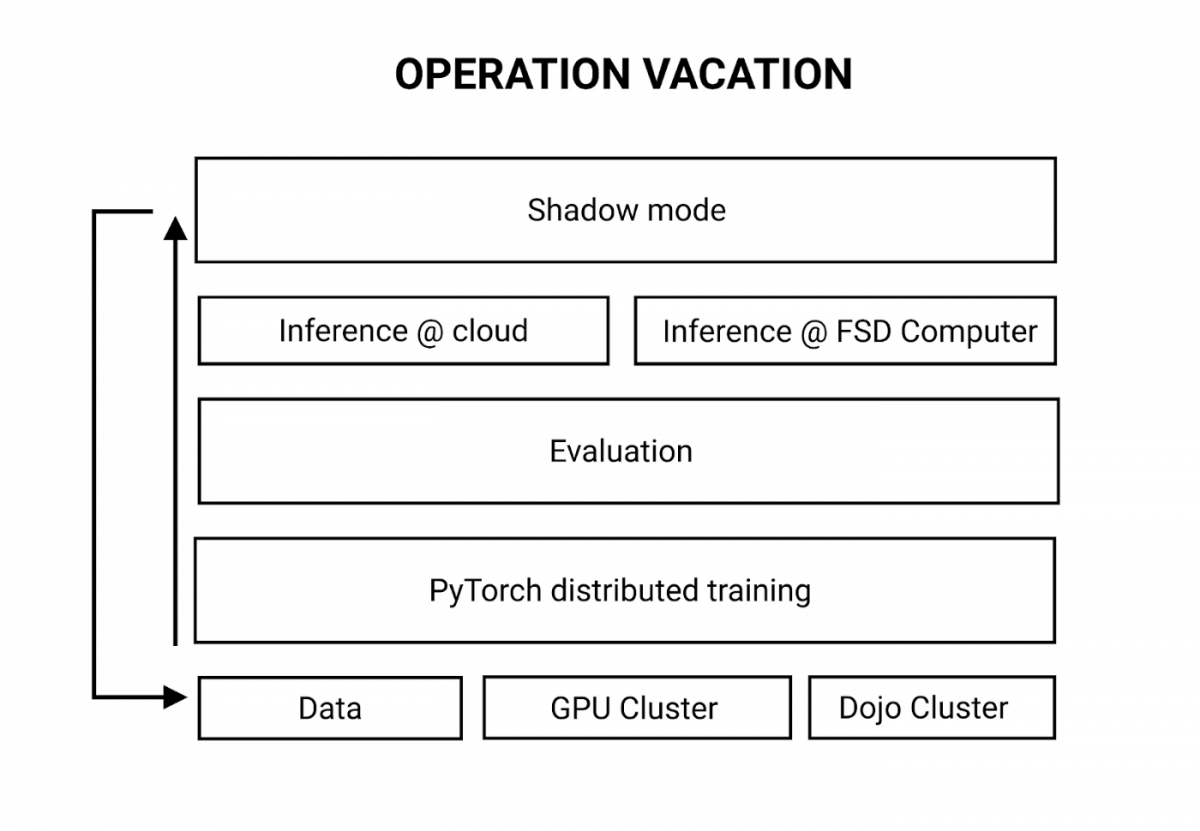

Let's now take a look at the entire process, from data collection to inference:

Let’s define the stack from the bottom to the top.

- Data — Tesla collects data from its fleet of Tesla Vision systems and a team labels it.

- GPU Cluster — Tesla uses multiple GPUs (called a cluster) to train their neural networks and run them.

- DOJO — Tesla uses something they call DOJO to train only a part of the whole architecture for a specific task.

- PyTorch Distributed Training — Tesla uses PyTorch for training.

- Evaluation — Tesla evaluates network training using loss functions.

- Cloud Inference — Cloud processing allows Tesla to improve its fleet of vehicles at the same time.

- Inference @FSD — Tesla built its own computer that has its own Neural Processing Unit (NPU) and GPUs for inference.

- Shadow Mode — Tesla collects results from the vehicles and compares them with the predictions to help improve annotations: it’s a closed-loop!

Here’s the summary of everything we just discussed:

- Tesla is working on 50 tasks simultaneously , which must all run on a very small computer called FSD (Fully Self-Driving).

- Their Autopilot system is based on Tesla Vision : 8 cameras that are fused together.

- From Tesla Vision, a HydraNet architecture is used: 1 giant neural network does all the tasks!

- This neural net is trained with PyTorch and a pool of workers to speed up results.

- Finally, a complete loop is implemented: the drivers collect data, Tesla labels that real-world data, and trains their system on it.

Elon Musk has built an incredible company which is today more valuable than every car company combined. If you'd like to learn more about them, please read these 3 articles:

- Tesla's HydraNets - How Tesla's Autopilot Works

- Tesla vs. Waymo - Two Opposite Visions

- A Look at Tesla's Occupancy Networks