9 Types of Sensor Fusion Algorithms

In autonomous vehicles, Sensor Fusion is the process of fusing data coming from multiple sensors. The step is mandatory in robotics as it provides more reliability, redundancy, and ultimately, safety.

To understand better, let's consider a simple example of a LiDAR and a Camera both looking at a pedestrian 🚶🏻.

- If one of the two sensors doesn't see the pedestrian, we'll use the other as a crutch to increase our chances of detecting it. We're doing REDUNDANCY.

- If both are detecting the pedestrian, Sensor Fusion will give us a a more accurate and confident understanding of the pedestrian's position... using the noise values of both sensors.

Since sensors are noisy, sensor fusion algorithms have been created to consider that noise, and make the most precise estimate possible.

When fusing sensors, we're actually fusing sensor data, or doing what's called data fusion. There are several ways to build a data fusion algorithm. In fact, there are 9. These 9 ways are separated into 3 families.

In this article, we'll focus on the 3 types of Sensor Fusion classification, and the 9 types of Sensor Fusion algorithms.

I - Sensor Fusion by Abstraction Level

The most common types of fusion is by abstraction level. In this case, we're asking the question " When should we do the fusion?"

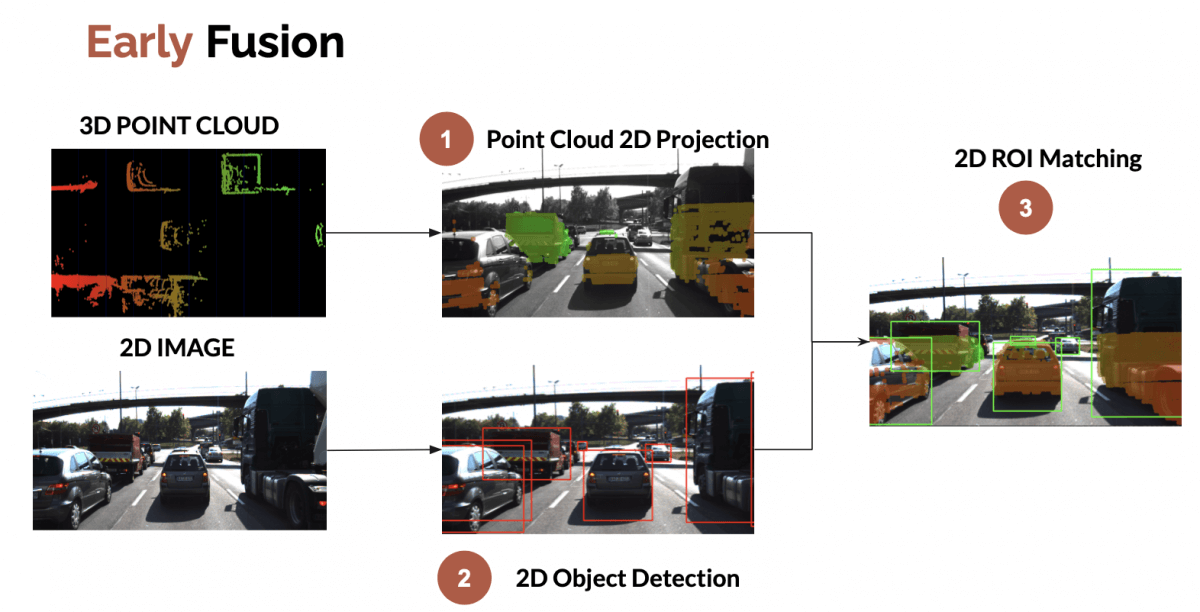

In my article on LiDAR and Camera Fusion , I describe two processes called EARLY and LATE fusion.

In the industry, people have other names for this:Low Level, Mid-Level, and High-Level Sensor fusion.

Low Level Fusion - Fusing the RAW DATA

Low Level Sensor Fusion is about fusing the raw data coming from multiple sensors. For example, we fuse point clouds coming from LiDARs and pixels coming from cameras.

✅ This type of fusion has a lot of potential for the future years, since it considers all the data.

❌ Early Fusion (Low-Level) was very hard to do until a few years ago, because the processing required is huge. At each millisecond, we can fuse hundreds of thousands of points with hundreds of thousands of pixels.

Here's an example of Low-Level Fusion for a camera and a LiDAR.

Object detection is used in the process, but what's really doing the job is projecting the 3D point clouds into the image, and then associating this with the pixels.

Mid Level Fusion - Fusing the DETECTIONS

Mid-Level sensor fusion is about fusing the objects detected independently on sensor data.

If a camera detects an obstacle, and a radar detects it, we'll fuse these results to have the best estimate of the position, class, and velocity of the obstacle. A Kalman Filter (bayesian algorithm) is generally the approach used, as I explain in this article .

✅ This process is easy to understand, and contains several existing implementations.

❌ It relies heavily on the detectors. If one fails, the whole fusion can fail. Kalman Filters to the rescue!

Here's Mid-Level Sensor fusion on the same example as before.

In this example, we're fusing 3D bounding boxes from a LiDAR with a 2D bounding box from an object detection algorithm. The process works; but could also be reversed. We could project the 3D LiDAR result in 2D, and thus do the data fusion in 2D.

High Level Fusion - Fusing the TRACKS

Finally, high level sensor fusion is about fusing both objects and their trajectories. We're not only relying on detections, but also on predictions and tracking.

✅ This process is one level higher, and thus benefits the same simplicity advantages.

❌ One major issue is that we might lose too much information. If our tracking is wrong, the whole thing is wrong.

Graph of Data Fusion by Abstraction Level between a RADAR and a CAMERA.

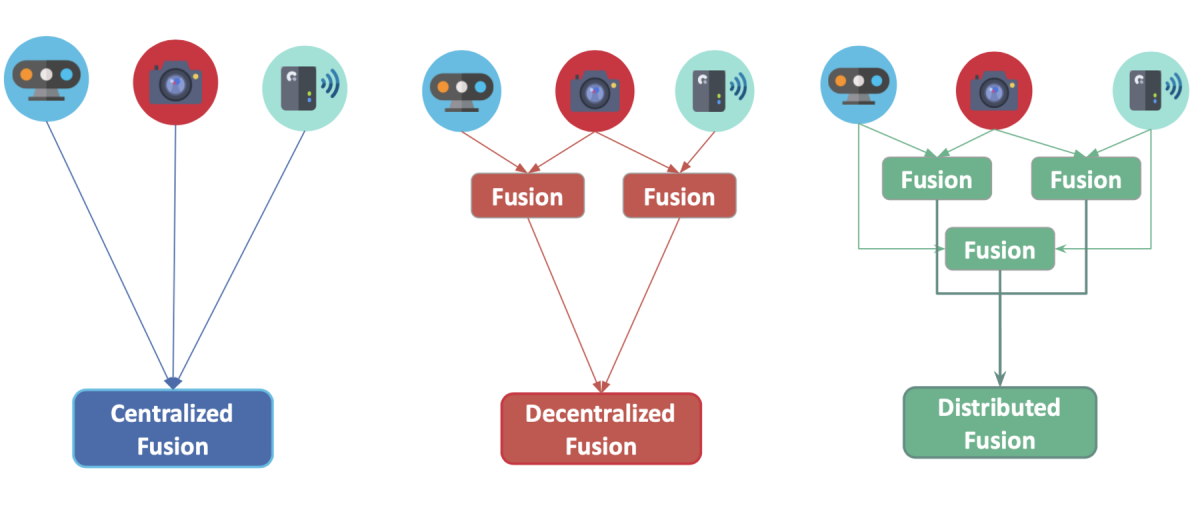

II - Sensor Fusion by Centralization Level

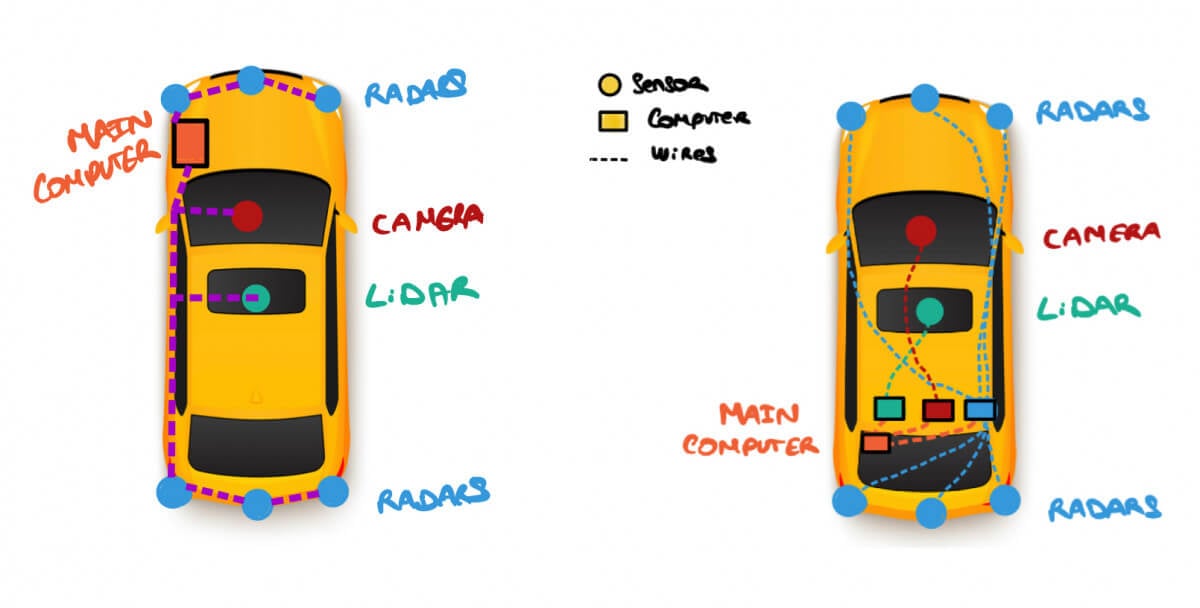

The second way to classify a Fusion algorithm is by centralization level. Here, we're asking the question " Where is the fusion happening?". The main computer can do it, or each sensor can do its own detection and fusion. Some approaches even use something called a Satellites Architecture to do so.

Let's review the 3 types of Fusion.

- Centralized - One central unit deals with the fusion (low-level)

- Decentralized - Each sensor fuses data and forward it to the next one.

- Distributed - Each sensor processes the data locally and send it to the next unit (late fusion)

To give you an example, let's take a classic self-driving vehicle. In this case, each sensor has its own computer. All these computers are linked to a central computing unit.

As opposed to this, Aptiv has develop an architecture they call the Satellite Architecture. Here's the idea: Plug many sensors, and fuse them together to one central unit that handles the intelligence called the Active Safety Domain Controller.

In this process, playing with the position of sensors, and the type of information that transits, can help reducing the total weight of the vehicle, and scale better with the number of sensors.

Here's what happens on the left image:

- Sensors are just "Satellites": They're here to collect raw data only.

- A 360° fusion happens in the main computer : We don't have to install EXTREMELY GOOD sensors, since no Individual Detection will happen.

- The detection is done on the 360° picture.

✅ This has several advantages, as you can read in this article .

These were two examples of "Centralized Fusion". The two other types of fusion can happen when we have the classic architecture.

Graph of Data Fusion by Abstraction Level between a RADAR and a CAMERA.

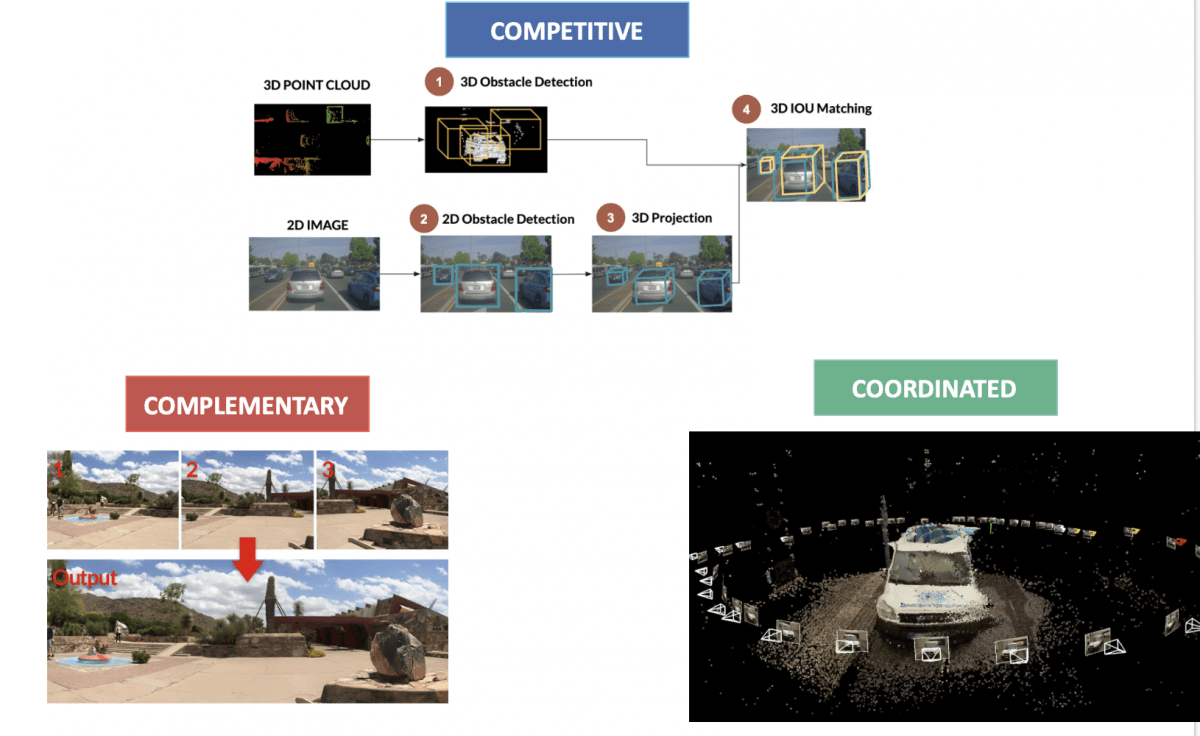

III - Sensor Fusion by Competition Level

The final way to classify a Sensor Fusion algorithm is by Competition Level.

- In Abstraction Level, we were asking "When" should the fusion occur.

- In Centralization Level, it was about "Where".

- In Competition Level, we're asking "What should the fusion do?"

Again, we have 3 possibilities.

Competitive Fusion

Competitive Fusion is when sensors are meant for the same purpose. For example, when we use both a RADAR and a LiDAR to detect the presence of a pedestrian. Here, the data fusion process happening is called redundancy, thus the "competitive" term.

Complementary Fusion

Complementary fusion is about using different sensors looking at different scenes to get something we couldn't have obtained otherwise. For example, when building a panorama with multiple cameras. Since these sensors complete eachother, we use the term "complementary".

Coordinated Fusion

Finally, coordinated fusion is about using two or more sensors to produce a new scene, but this time looking at the same object. For example, when doing 3D reconstruction or 3D Scan using 2D sensors .

👉 To learn how to implement this type of fusion, I recommend enrolling in my course on Stereo Vision that does the coordinated fusion of two cameras to produce a 3D result.

I hope this article helps you understand better how to use Sensor Fusion, and how to differentiate between different fusion algorithms.

The fusion is often done by bayesian algorithms such as Kalman Filters. We can fuse data to estimate speed, position, or classification of an object.

To go further, I recommend two other articles I have on Sensor Fusion:

See you tomorrow! 👋🏻