An Introduction to 3D Object Tracking (Advanced)

Back when I worked on autonomous shuttles, I was once given a mission: to mentor a group of Perception interns.

Among all the projects I foresaw, one of them clearly stood out; and it was from an engineering intern who coded a paper on 3D Object Detection on cameras.

At the time, all we had was 2D Object Detection, and we were integrating 2D Object Tracking. But since we saw this paper, we were considering going full 3D Object Tracking.

When you look around on LinkedIn, you'll see most object tracking applications as 2D. But the real world is 3D, and whether you're tracking cars, people, helicopters, missiles, or whether you're doing augmented reality, you'll need to use 3D.

At the CVPR 2022 (Computer Vision and Pattern Recognition) conference, we saw a high number of 3D Object Detection papers — and we're starting to see more and more papers submitted to places like IEEE (Institute of Electrical and Electronics Engineers) or the International Journal of Computer Vision.

In this article, I want to explore the field of 3D Tracking, and show you how to design a 3D Tracking system. We'll start from the flat 2D, and then move to 3D, and we'll see the differences between 2D and 3D Tracking.

What is 3D Object Tracking?

Object Tracking means locating and keeping track of an object's position and orientation in space over time. It involves detecting an object in a sequence of images (or point clouds) and then predicting its location in subsequent frames.

The goal is to continuously estimate the position and orientation of the object, even in the presence of occlusions, camera motion, and changing lighting conditions.

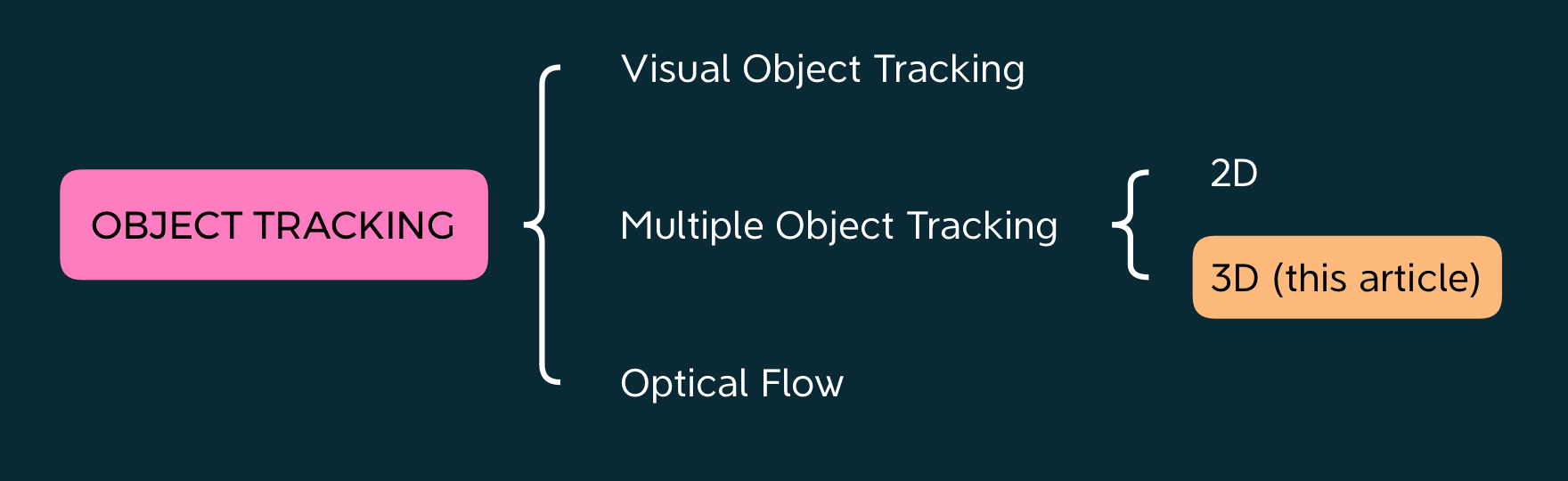

Although many people refer to tracking using Multi-Object Tracking, the field of tracking is actually wider, and involves topics such as feature tracking or optical flow. However, the most common approach is through 2D Multi-Object Tracking; and in this article, I want to talk about this, but in 3D.

In my article about 2D Tracking, I talk about 2D Object Detection, and then tracking using the Hungarian Algorithm and a Kalman Filter. In this article, we'll see how to extend this to 3D, starting with object detection.

From 2D to 3D Object Detection

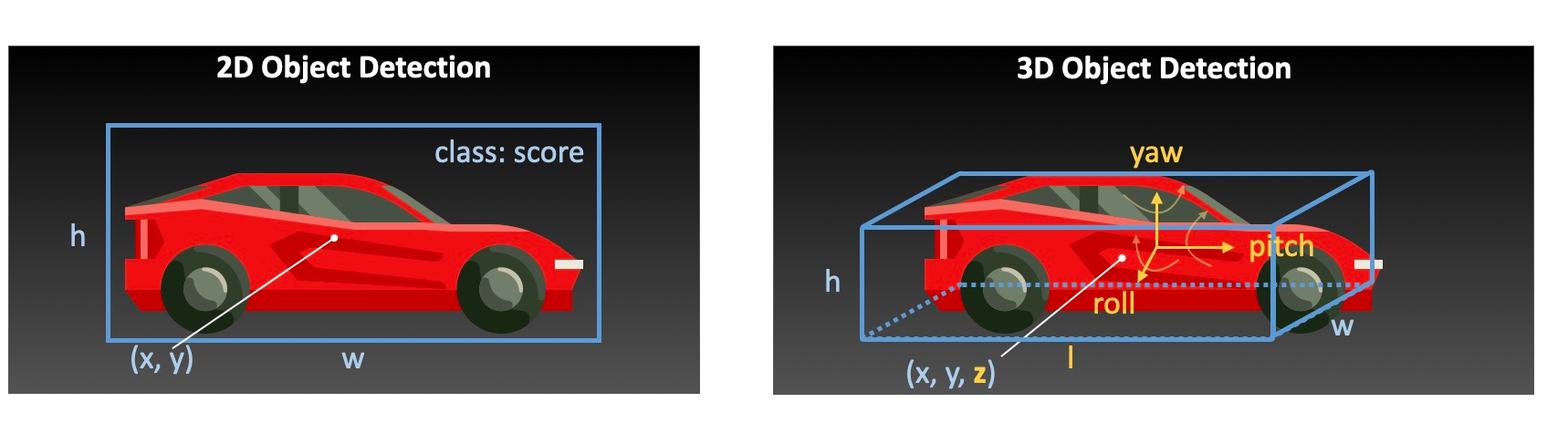

Most of us are used to 2D Object Detection, which is the task of predicting bounding boxes coordinates around objects of interest, such as cars, pedestrians, bicycles, etc... from images. Although 2D object detection is possibly the most popular technique in the entire Computer Vision field, it's lacking a lot when you want to use it in the real world.

The first element that will change in 3D will be the bounding boxes:

From 2D to 3D Bounding Boxes

This part can confuse more than one person, but a 3D box really is different from a 2D box. Rather than just predicting 4 pixel values in an image frame, we're in the camera frame, and predicting things like the exact XYZ position (with the depth), the length and depth of the box, as well as the yaw, pitch and roll angles.

To simplify things, we can always assume some values to be constant such as the pitch and roll (and think about not having a "pitch rate" in cities like San Francisco), but 3D bounding boxes are by nature oriented.

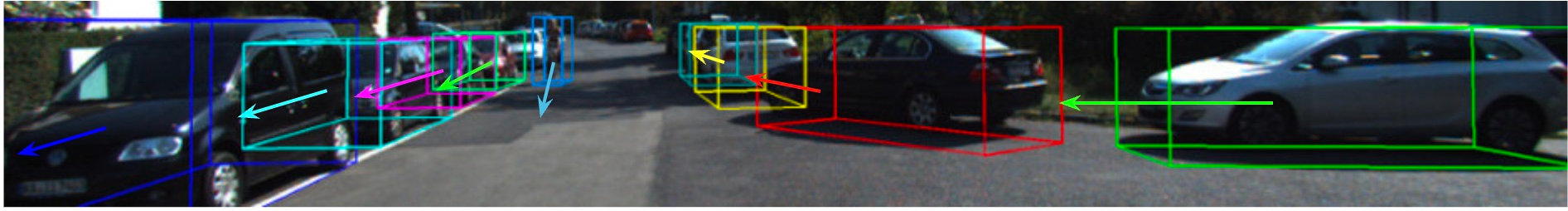

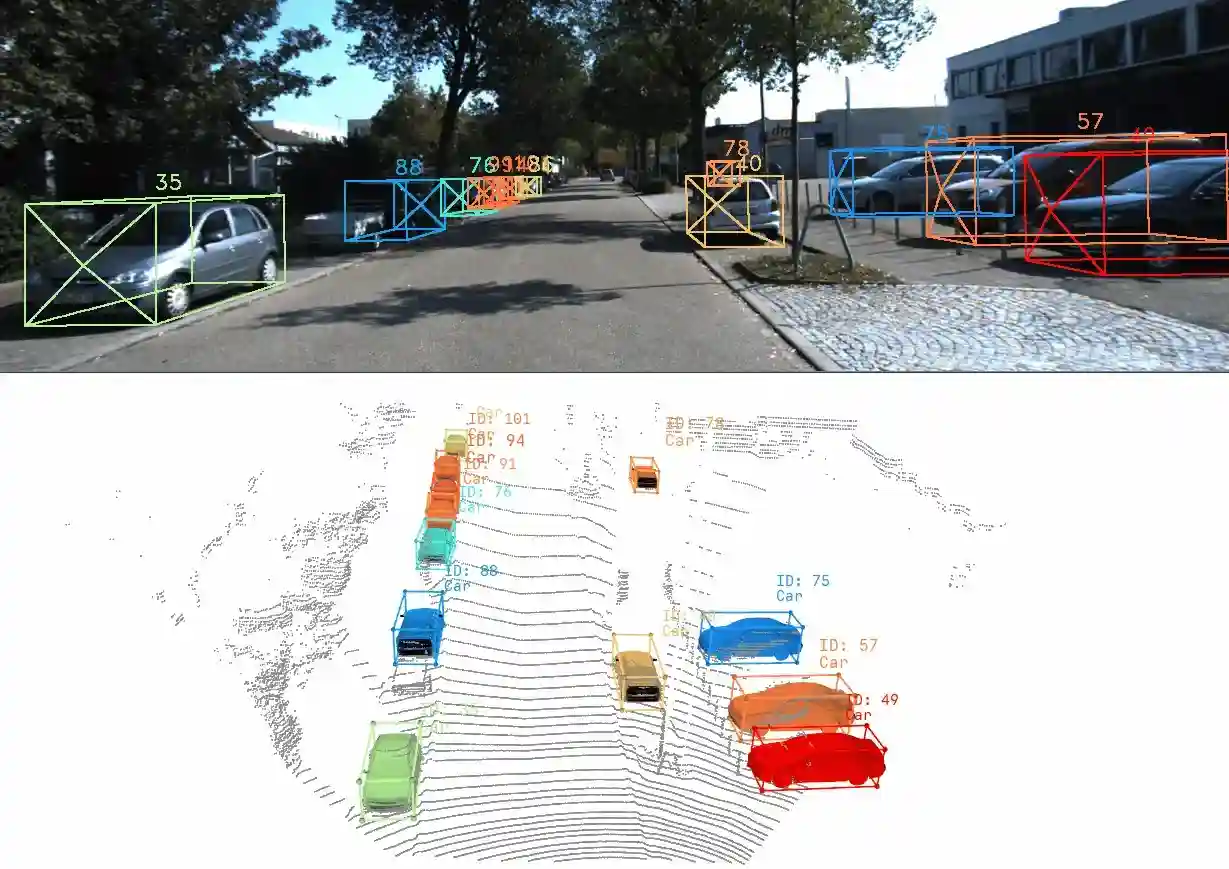

An example from the KITTI Dataset, where we see this image, notice the orientations I added:

In 2D, you didn't have to predict these orientations, and your bounding boxes were much simpler. But if you're doing 3D object tracking, you'll need to deal with 3D boxes.

Next, let's see how to generate these boxes.

Generating a 3D Bounding Box in Computer Vision

If we're working with cameras, 3D Object Detection can be either based on a single image, or a stereo vision setup. You can learn more about stereo vision through my article here. Usually, the algorithms use involve sending an image to a model that directly outputs a box.

An example with this algorithm doing monocular 3D using an algorithm named YOLO3D:

Whether it's mono or stereo, many object detectors exists in 3D, and most companies already use 3D Object Detection on cameras.

Bonus — A quick list of camera based 3D detectors: 3D Bounding Box Estimation Using Deep Learning and Geometry (the one my intern implemented, 2017), M3D-RPN: Monocular 3D Region Proposal Network for Object Detection (2019), FCOS3D: Fully Convolutional One-Stage Monocular 3D Object Detection (2021).

Generating 3D a Bounding Box from a Point Cloud

When using a LiDAR, we'll use algorithms such as Point-RCNN, that output 3D boxes from a point cloud.

For example, here is an algorithm called 3D Cascade R-CNN, doing 3D Object Detection from a LiDAR.

Bonus — A quick list of LiDAR Detection algorithms you can search: AVOD (2018), Frustum PointNet (2018), PointRCNN (2019), IOU (2020), PV-RCNN (2021), Cas-A (2022).

Next, let's move to tracking:

3D Tracking: How do you 3D Track an Object?

In the field of object tracking, you usually have 2 approaches:

- Separate Trackers — We perform tracking by detection; we first use an object detector, and then track its output image by image.

- Joint Trackers — We do joint detection and 3D object tracking by sending 2 images (or point clouds) to a Deep Learning model.

Since we've spent a lot of time on object detection already, let's continue from 3D bounding boxes.

3D Object Tracking from 3D Object Detection

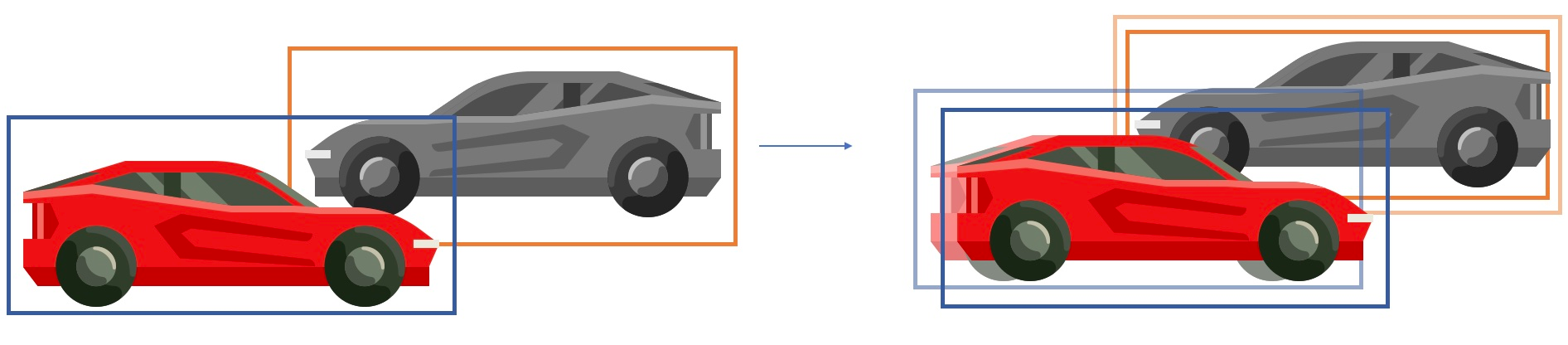

In 2D Object Tracking (separate tracker), the tracking pipeline is as follows:

Given detections at 2 consecutive timesteps...

- Compute the IOU in 2D (or any other cost, such as shape of the boxes or appearance metrics)

- Put that into a Bipartite Graph Algorithm, like the Hungarian Algorithm.

- Associate the highest matches and set the colors/ids

- Use a Kalman Filter to predict the next position (and thus have a more accurate association at the next step)

We'll do exactly this in 3D, but 2 things will change:

- The IOU

- The Kalman Filter

The Hungarian Algorithm, which tells which is used for the MOT task, will remain unchanged.

Why the Hungarian Algorithm shouldn't change

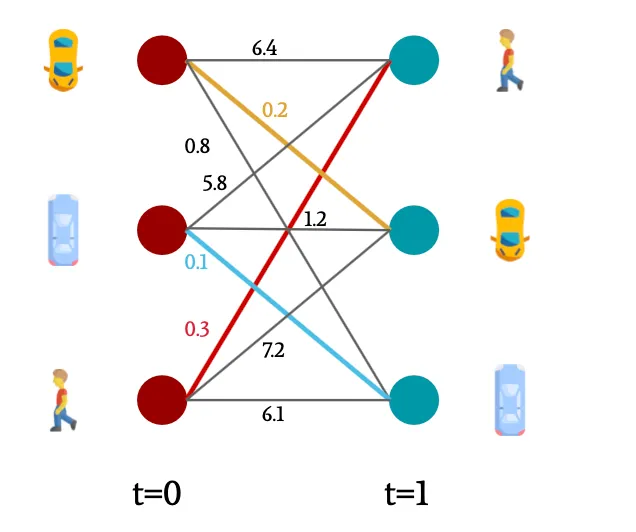

When we track a bounding box, what we usually do is to compute the IOU (intersection over union) of 2 overlapping boxes from image to image. If the IOU is high (the boxes overlap), then it means the object is the same, it moved a bit, and we should therefore track it. If not, then it means it's a different object. We can also track multiple objects using bipartite graphs, you can find my entire explanation here.

The goal of the hungarian algorithm is to take 2 lists of obstacles (from t-1 and t), and return the associations based on a cost. This cost can be IOU, but whether it's 2D or 3D IOU, the association step is exactly the same.

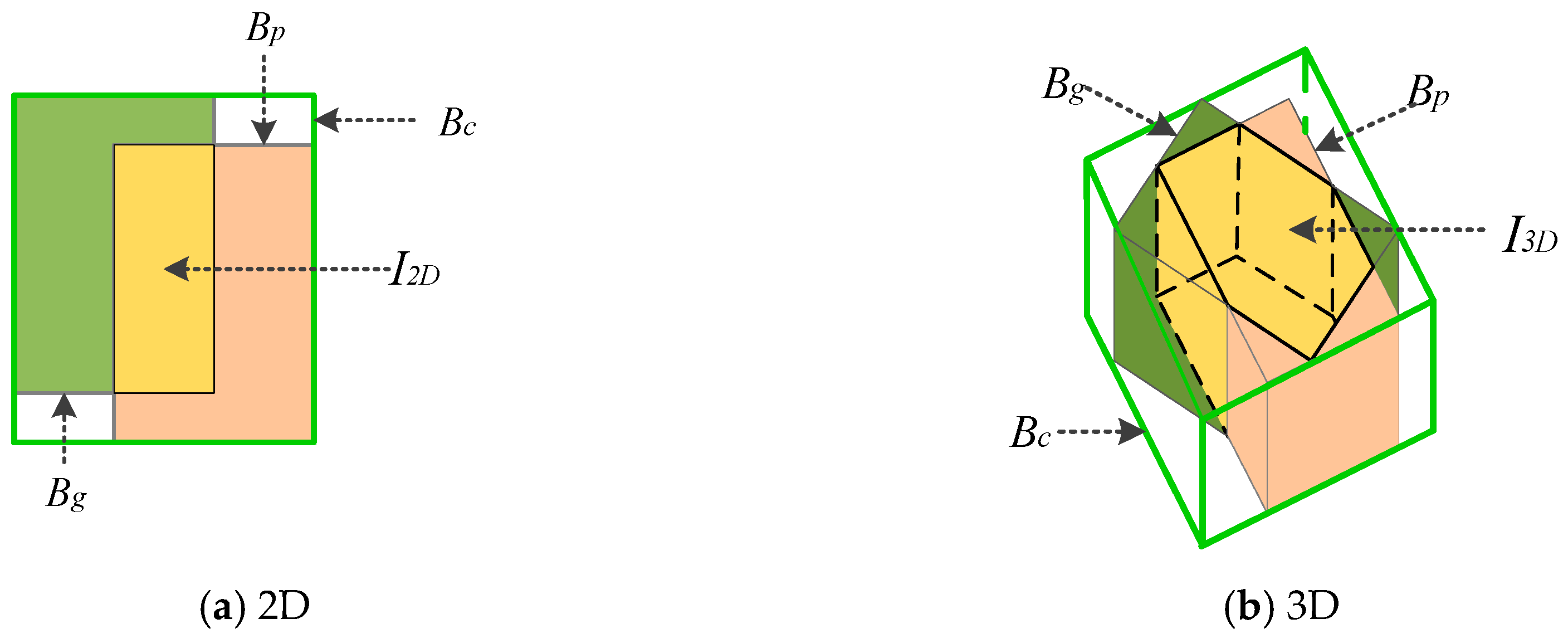

Introduction to 3D IOU (Intersection Over Union)

The Intersection Over Union (IOU) is how much the boxes from time (t-1) overlap with the box from time (t). Although it isn't enough to guarantee 2 boxes should match, this is the most popular factor, and one we can easily setup.

If we want to move from 2D to 3D, we must understand how to compute the 3D IOU, so instead of comparing areas, we now compare volumes:

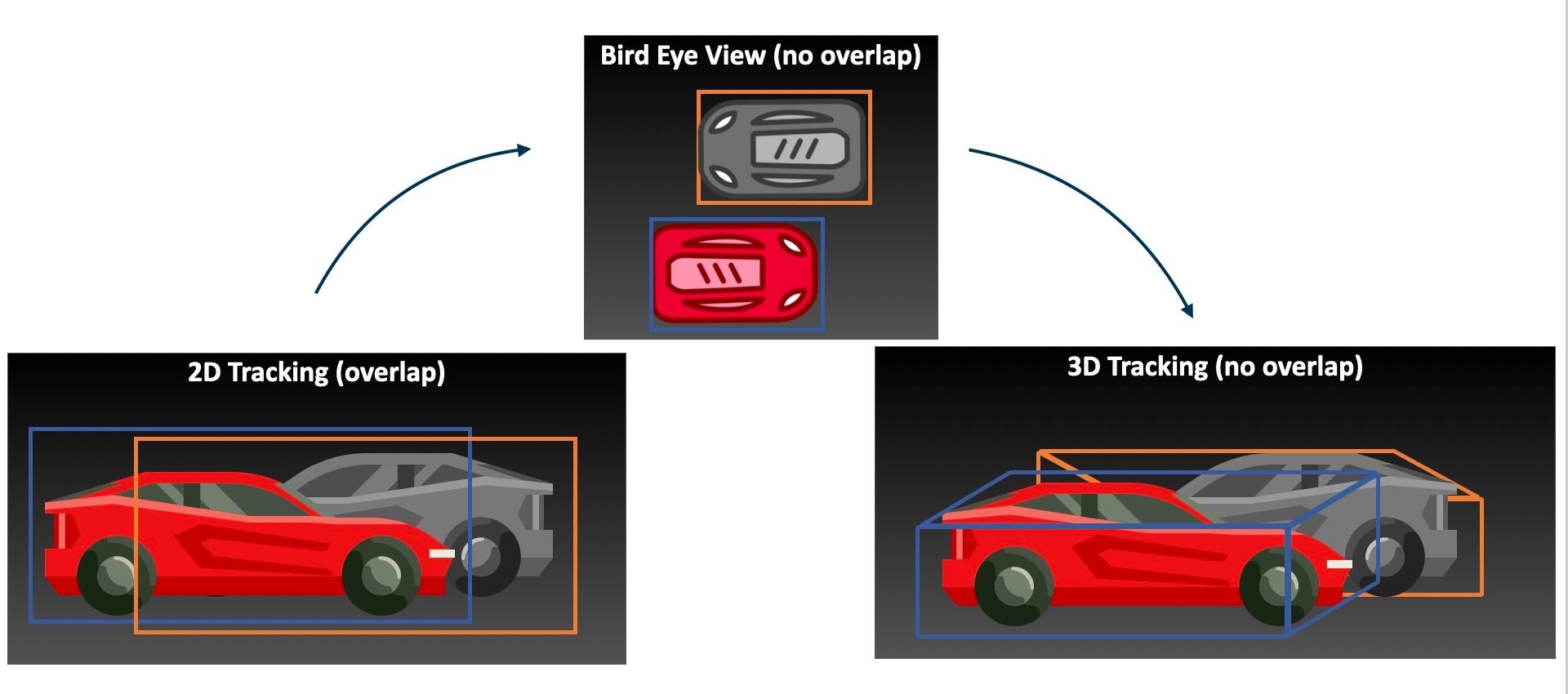

Here's a cool picture to visualize the difference between 2D and 3D IOU:

Today, many one-line implementations exist for the 3D IOU, and we really are just dividing the intersection by the union.

Althrough 3D IOU is a cool metric, it's far from the only one we could use, and can fail for objects that are far ahead. On the other hand, other metrics such as the point clouds distance (Chamfer Loss), the difference in orientations, or even the euclidean distance of the centroids can be used.

The disappearing problem of overlap

In 2D, overlap is a problem, because cars can overlap even though they're far from each other. But in 3D, this problem simply disappears. Because we're in 3D, we know that some boxes won't overlap, even if they seem to overlap in a flat picture.

Let's pause: So far, we have seen that we should:

- Get 3D Bounding Boxes in 2 consecutive time steps

- Calculate the 3D IOU of the 2 lists thanks to the Hungarian Algorithm (and we got the colors and IDs right)

All that remains is the use of a Kalman Filter, that will predict the next step.

Using 3D Kalman Filters

What is a 2D Kalman Filter?

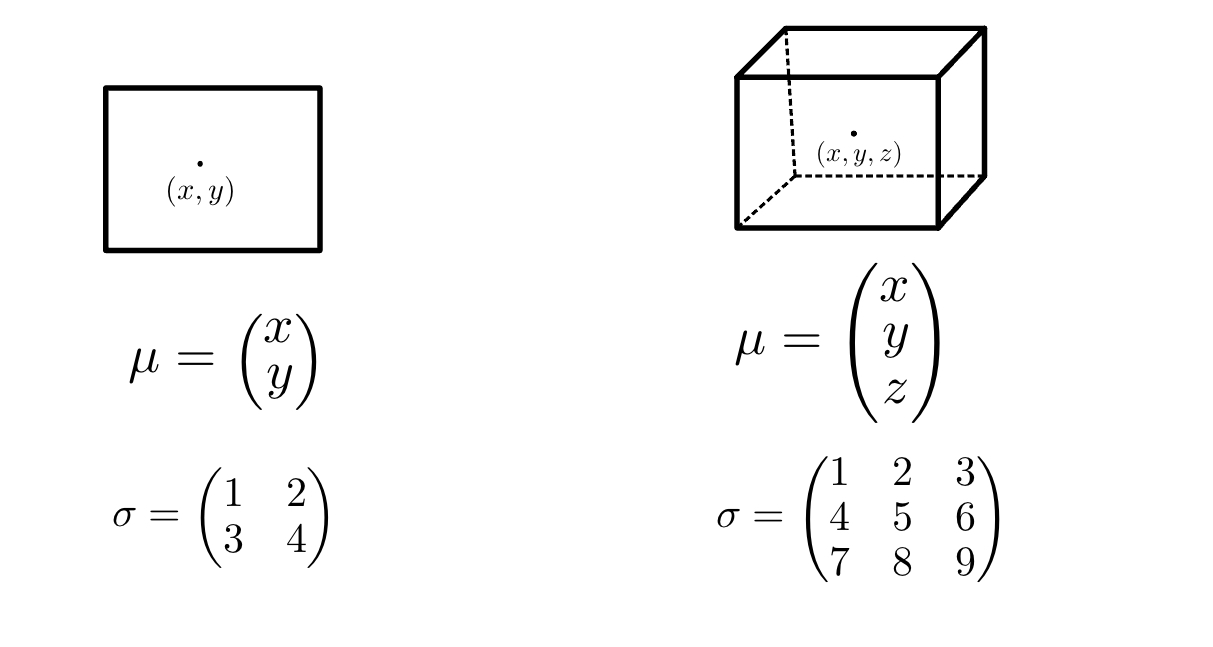

A 2D Kalman Filter is an algorithm that will take 2 coordinates and predict the next positions based on its history. It's an iterative algorithm, which means it stores the memory of the previous value and continues over time. In 2D MOT, we use it to predict the next position of the center of the bounding box (we can also do predict of all 4 coordinates of the bounding box).

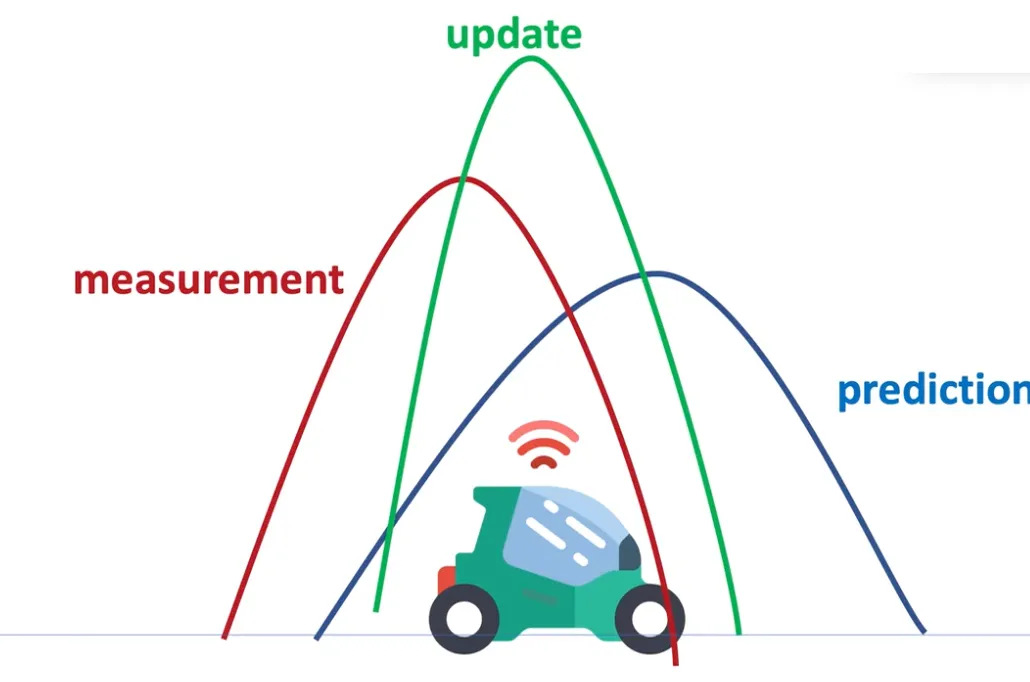

For that, we use 2 variables: μ the mean and σ the standard deviation // uncertainty. We represent the bounding box with a 2D gaussian, and we use a predict/update cycle. If you're weak on Kalman Filters, you can learn more through my course LEARN KALMAN FILTERS.

What is a 3D Kalman Filter?

When we're in 3D, the least we can do is a 3D Kalman Filter, meaning we track X, Y, and Z.

The uncertainty (random numbers used here) may be harder to work with, but this is the bare minimum we need to do when doing 3D object tracking.

We now have everything we need, so let's summarize!

Summary

- 3D Object Tracking is about tracking objects in the real world

- 3D Object Detection can be done from a camera or a LiDAR or RADAR. It is used only to generate the boxes.

- For every object, our detector will return a 3D Bounding Box.

- The Multi-Object Tracking process will be the same as in 2D, except the association will be done with 3D IOU, and the prediction with 3D Kalman Filters.

Now, here's a cool project you'll learn to code in my upcoming 3D Object Tracking course:

3D Object Tracking is one of the most fascinating areas in Perception. In self-driving vehicles, it's the very last step before "Planning". When we talk about 3D, and when we add the notion of time, we are in 4D!

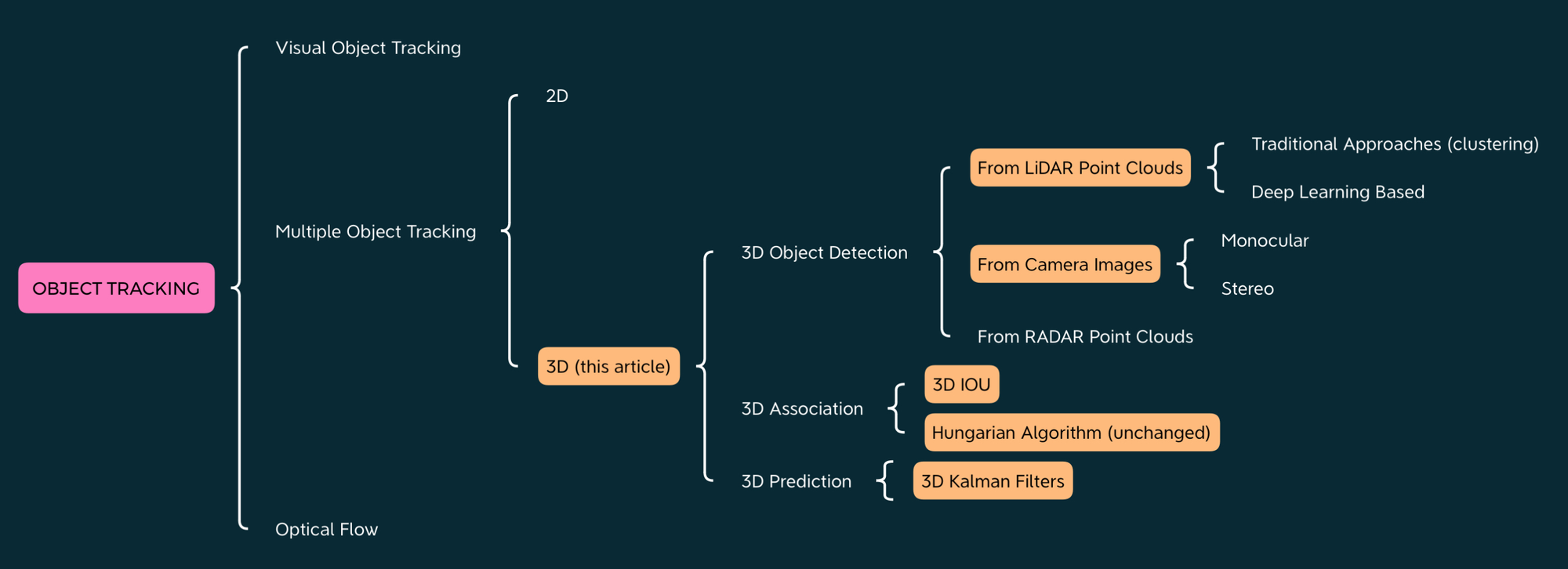

Mindmap

Here is a mindmap that reminds everything we've seen, what's orange is what we covered today.

Next steps

- You can learn more about 3D Object Detection from my article How LiDAR Detection works

- You can learn more about the Hungarian Algorithm through my article Inside the Hungarian Algoritm — A Self-Driving Car Example

- You can learn more about Kalman Filters through my article Sensor Fusion

- You can learn more about 2D Object Tracking through my article Computer Vision for Tracking

Or...

Bonus: I'm launching a new course on 3D Tracking, and if you don't want to miss the launch offer, the only way is by being subscribed to my emails.