2D LiDAR: Too weak for real self-driving cars?

"I am tasked with the impossible" started the engineer, looking at me with a serious tone. "I have to make an autonomous robot work from a 2D LiDAR and a cheap camera! This will never work!" he yelled in panick, while carefully checking his boss wasn't listening.

"Why do you want the 3D LiDAR so badly?" I asked in reply. "Isn't 2D good enough?".

Apparently, it wasn't.

"With 2D, I can't use the object detectors like PointPillars or Point-RCNN, etc... because the resolution is terrible. All I see are a few points, and it's not good enough. I need your help, our CEO doesn't listen to me, he wants to use cheap sensors only... But he might listen to you."

I could relate. A few years before this engineer reached out to me in a hidden corner of the CES in Las Vegas, I was working on autonomous shuttles, and I was FORCED to use the cheap 2D LiDARs as well.

I mean, you see what every single LiDAR on LinkedIn looks like right? It's all stunning, beautiful, and it seemed to me in 2018/2019 that THE ENTIRE WORLD was powered by Velodyne. So imagine my surprise, when I had to work with THIS:

So I felt this Engineer's struggle, but I also know he was wrong.

The thing is: We engineer have a bias. We see videos online, we see fancy datasets with 360° LiDARs, we see FMCW videos with tens of thousands of views on LinkedIn... but we don't take into account that all of these videos are published by companies with millions or even billions in funding, and that most of them are for now...

NOT PROFITABLE!

At the time, I told this engineer to either trust his CEO and try to make the 2D solution work, or quit. Because I knew his management would much rather use the 100k LiDAR option — but using 2D LiDAR is mostly a cost related problem.

Today, I'd like to try and give more depth to that answer, and in particular explain what you can do with a 2D LiDAR, who should and should not use it, and also, I'll start by explaining how 2D LiDARs work!

So let's begin...

What is a 2D LiDAR, and how does it work?

LiDAR stands for Light Detection And Ranging. It's THE sensor that everybody wants in self-driving cars. It works by having a laser that sends a light wave out there, and measures the time it takes to come back. Using this measurement, it can accurately measure the distance of every object and create a point cloud.

Hey — I know you know at least remotely what a LiDAR is, and I also know that you're clever enough to find my article on the different Types of LiDARs if you'd like to get a proper introduction...

So let me move on quickly.

2D vs 3D LiDAR: What's the difference?

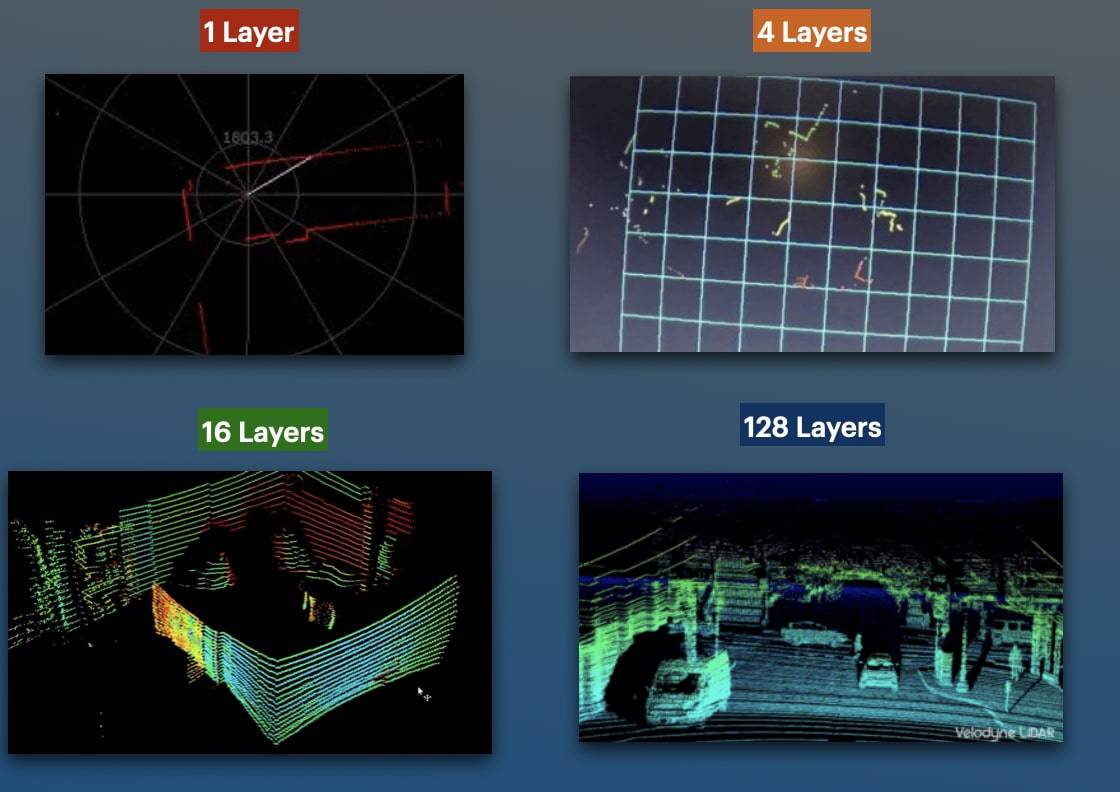

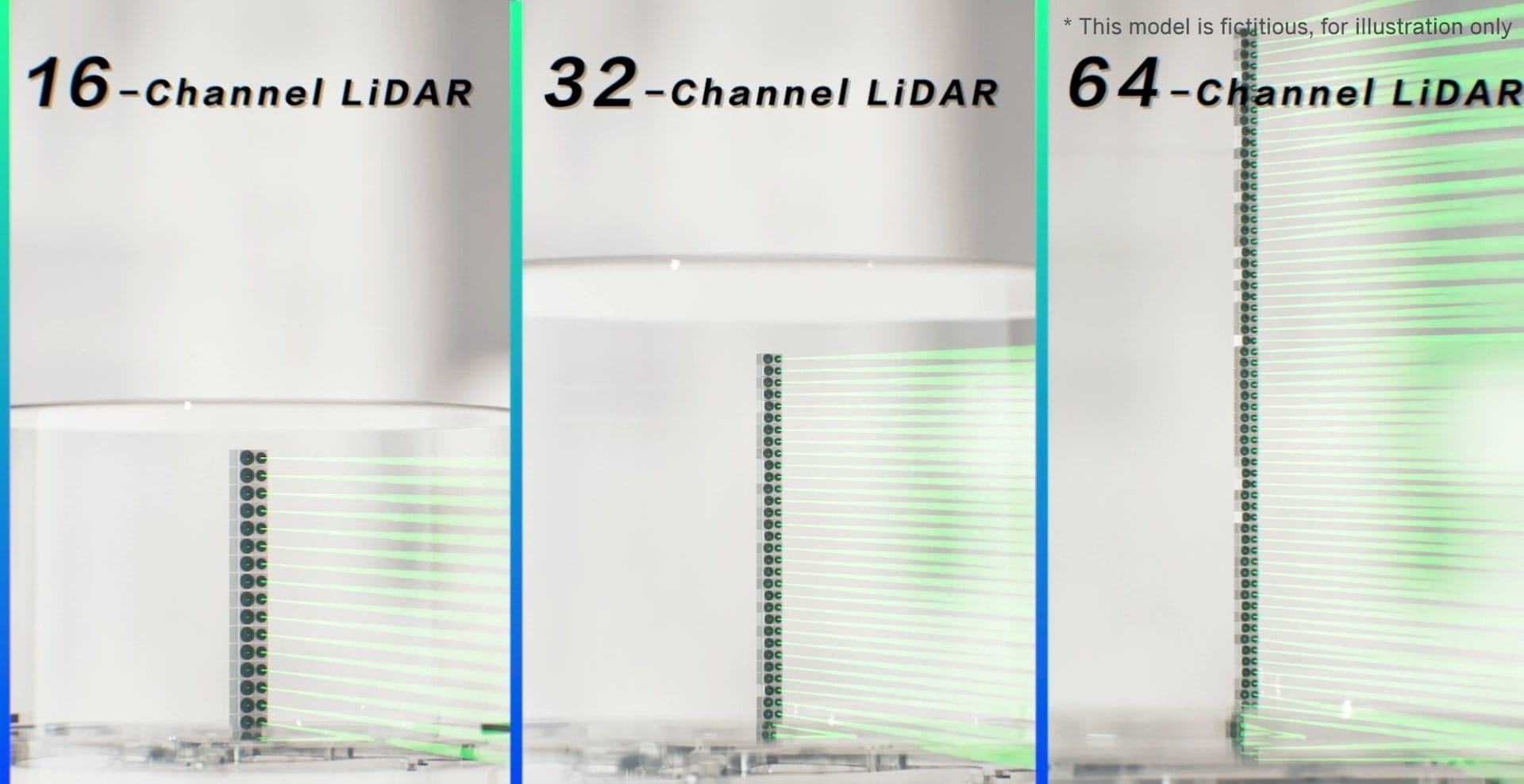

I'd like to talk about the idea of 2D. A LiDAR works by sending light waves through vertical "channels"; or sometimes people call them "layers". If you have 1 layer, you have a flat output, if you have 16 layers, you're starting to get a 3D view. Some LiDARs today have 128+ layers.

So how many layers do you need to be 2D or 3D?

Technically, your LiDAR is 2D when it's using just one channel, and becomes 3D when it's using 2 or more. But practically, I wouldn't say that. A 4-Layer LiDAR is still terrible quality, and you can't really benefit from elevation.

I'd say any LiDAR that has under 16 channels can be considered 2D... and this because you can't really get a 3D shape unless you have this many channels. Why not 7 or 8? Yes, we could, but in autonomous driving, LiDARs either use 4 channels, or they immediately start at 16-channels (then 32, 64, 128).

So how do you get 16 channels or more?

I think this image, extracted from this magnificent article by Hesai, is pretty intuitive to understand:

When you have 16 lasers, you have a 16-layer LiDAR. If you have just one laser, you have a 1-Layer LiDAR. Simple. Got it? Laser = Layers = Channels.

Then all you gotta do is stack these lasers in your LiDAR, like this:

This is technically good for intuition, but in most recent LiDARs, we work with solid-state technology. This means the LiDAR doesn't rotate, but a mirror inside of it does. The laser is sent through a mirror, that happens to rotate horizontally — and sometimes vertically.

Don't get it? In this video from Hesai (well, they happen to do really great work at explaining LiDARs, you should definitely check them out!), you can see how it works to swipe the scene both horizontally and vertically using a single laser.

This is a 3D LiDAR, using solid-state technology!

And how do you achieve this? You need really high frequency, ethernet interface, and super low response times. Your LiDAR type must be very specific; like a MEMS LiDAR (micro-electromechanical) for example. I invite you to check my LiDAR Types article to see what it means.

So let's come back to 2D:

What's the difference between this and a 2D LiDAR? As you can guess, it won't rotate vertically, so in practice, the field of view will be lower, and you will only get a flat 2D view:

So the real question we want to ask isn't "Is a 2D LiDAR too weak?" but "Which field of view is good enough for self-driving cars?".

I specify self-driving cars, because after all, there are millions of cases where a 2D LiDAR is more than a good enough solution. For example, industrial applications (industrial automation, industrial vehicles, inside factories, ...), home robots, or simply when we want to measure distances. In these cases, a 2D LiDAR is not only a cost effective solution, but it's also the only sane one. Why use a bazooka to kill a fly?

I mean, do you really want to use an FMCW LiDAR on a Turtlebot 4?

Okay, so now that this is out of the way — let's think purely in terms of self-driving cars, and in terms of algorithms...

What are the algorithms I can run on 2D LiDARs?

Back when I worked on autonomous shuttles, I had exactly the same problem as our panicked engineer. "How do I work with a 2D LiDAR??? It's chaos!! I want the 4K MAX PRO +++ LiDAR!! NOWWWW!!!!!". At the time, our shuttles were working with LiDARs from a company named SICK. SICK offers many 2D LiDAR sensors in the range of 4,000-5,000€, which was perfect for our startup that wanted a solution to remain low-cost.

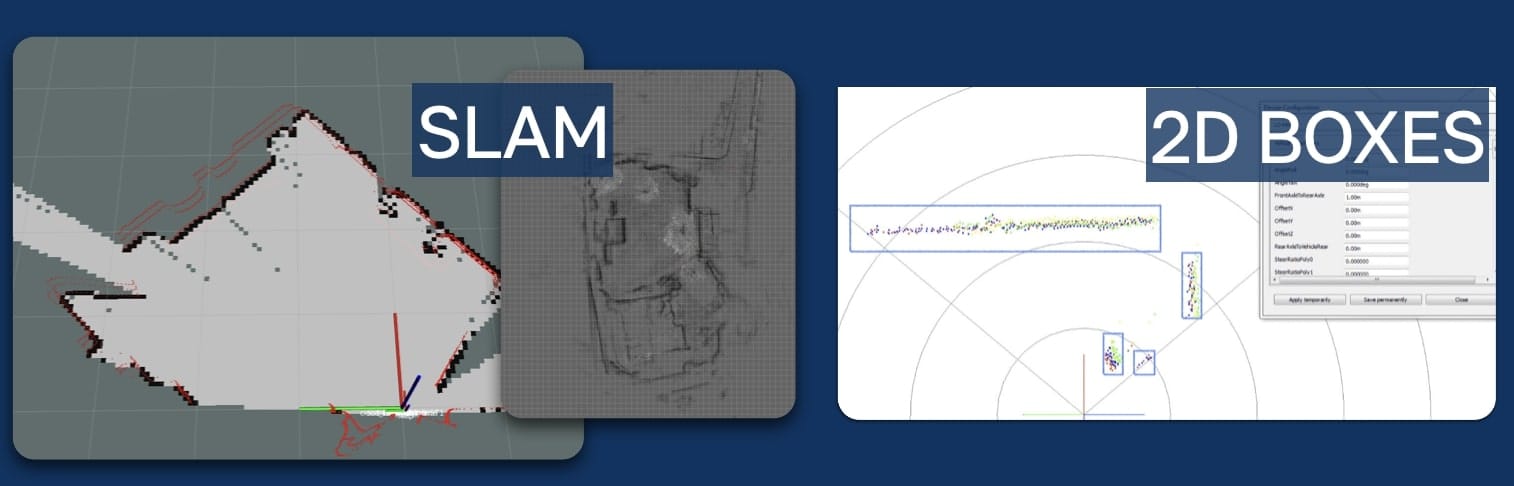

With a 2D LiDAR, you can do 2D object detection, because the sensor automatically gives you the 2D Bounding Boxes. You can also do SLAM (Simultaneous localization And Mapping) and therefore have mapping and odometry. You can try segmentation, and even point filtering based on other values like intensity or reflectivity.

These algorithms can be implemented by you manually (clustering, segmentation, ...) or you could use ROS Packages; some of them are specifically developed for 2D. If you have a SICK LiDAR for example, I'd recommend to check out this package.

The good point of this is that unlike cameras, this 2D incorporates distances — it's not a camera-space 2D, but a Bird Eye View 2D, which is infinitely better.

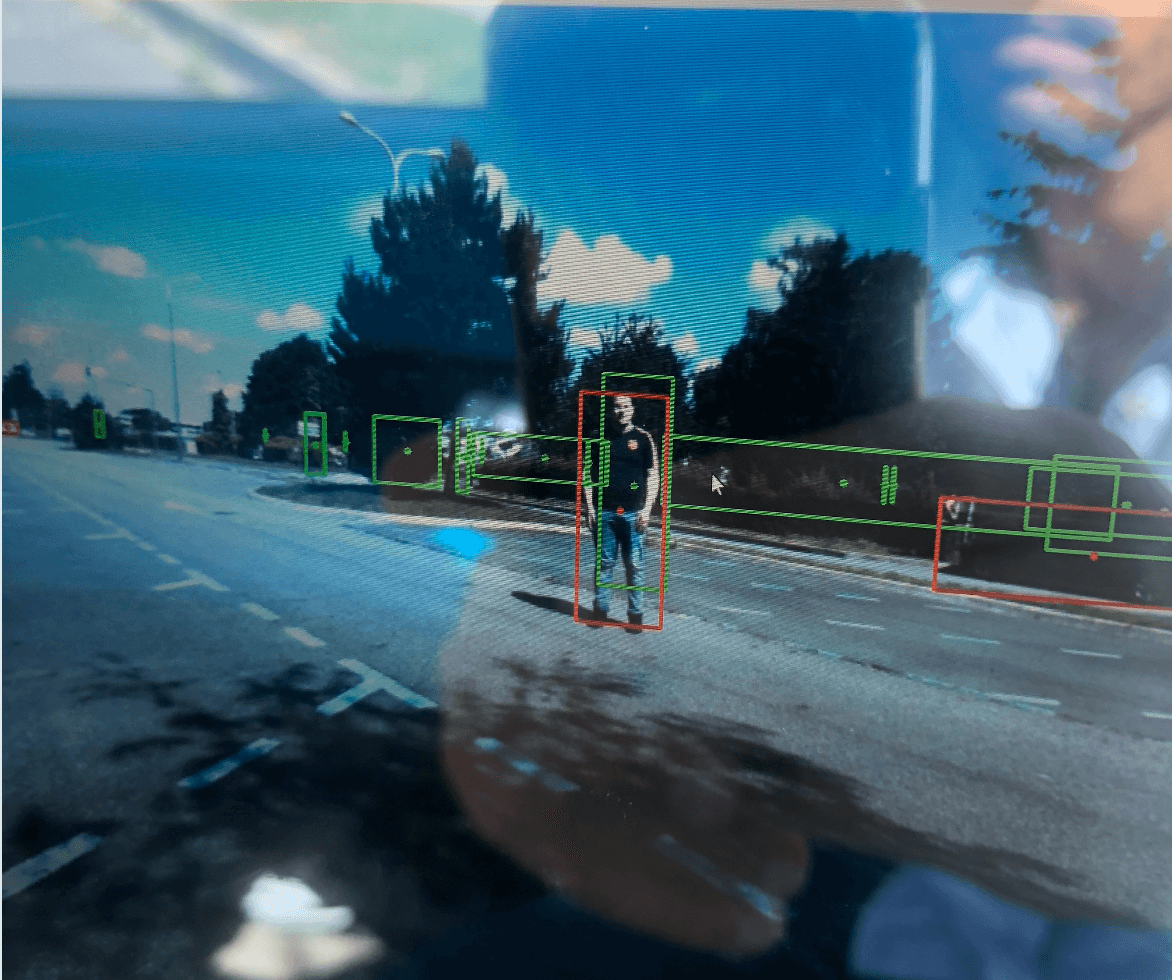

But if you like cameras, using 2D Bounding Boxes, you can also do "Visual Fusion" (sensor fusion between LiDAR and camera); as well as all the safety applications, like collision avoidance by braking at any wall or object, or obstacle avoidance (by braking or taking over). Here is an example fusing the LiDAR and camera images on the SICK LiDAR:

As you can see, we can do many things with 2D LiDARs. It works!

Now let me tell you a few things you CAN'T do.

Who should and shouldn't use a 2D LiDAR?

Okay, let's begin with the limitations...

First, it would be safe to say forget about 3D Deep Learning. By this, I mean that most of the 3D Deep Learning algorithms that you see online come from 3D LiDAR datasets. All these fancy algorithms have been designed to output 3D Boxes, and on top of that, they're been trained from 3D data. Trying them on a 4-Layer LiDAR would be sick (muahaha).

This means to find objects, it's either the 2/3 packages you can find online, or you're going to struggle with the manual point clouds processing (a visit to my Point Clouds Conqueror course would be a great help if this is your case).

Now there's more important:

These 2D sensors often have a sensing range of under 100 meters, which makes them suitable for any low-speed vehicle, whether it's a shuttle, an autonomous golf cart, mobile applications, or mobile robots. Why low-speed? Because the "range" isn't incredibly high with these "low-cost" LiDARs, and therefore, you can't afford to go high.

To illustrate this concept, let me bring Avinoam Barak, CEO of Carteav, an autonomous golf cart companies that works exclusively on LOW-SPEED self-driving cars:

Now what's interesting is that when using cheap sensors and going at low speed, you still benefit from the collision protection, but you also benefit from lower memory usage, better processing power, while keeping high performance, high accuracy, ..... This video is coming from an interview available in my Edgeneer's Land Community Membership — in this video Avinoam tell us everything about his autonomous golf cart. You can learn more here.

Okay, we've seen quite a lot, let's try to summarize it...

Summary

- A 2D LiDAR uses very few channels to generate its point cloud. Therefore, you only get a flat 2D view. These sensors are more affordable than the 3D LiDARs, while remaining powerful.

- The main difference between a 2D and a 3D liDAR is the number of channels. You can consider a LiDAR 3D when it passes 2 channels (technically) and 16 channels practically.

- Using a 2D LiDAR, you can still do many "autonomous" algorithms, such as 2D Object Detection, Segmentation, Filtering, or even SLAM.

- On the other hand, you cannot use the fancy 3D Deep Learning, because these algorithms are optimized for 3D point clouds, and trained from 3D LiDAR datasets.

- For many industries, a 2D LiDAR is the default choice. Industrial applications, mobile robots, you don't even think of 3D, because you don't need to.

- In autonomous vehicles, 2D LiDARs are a great solution for robots and cars that run at low speed. Not only you can still use the algorithms, but you can also buy several of them and fuse them with RADARs or cameras

Next Steps

Interested in LiDARs? Scroll below for a few articles you may like.